In the fast-paced world of e-commerce, where data holds the keys to success, developers stand at the center of innovation. Today, we’re starting our adventure by exploring how to dig up valuable information from one of the biggest retail players: Walmart.

With a vast and diverse product offerings, coupled with its significant online presence, Walmart became a treasure trove of information for data analysts and developers. However, navigating the complexities of data acquisition, analysis, and ethical considerations can be challenging. In this article, we will provide you with a comprehensive roadmap to master the art of web scraping for product analysis, all while staying within the bounds of legality and ethics.

Whether you’re a data scientist, a business owner seeking actionable insights, or simply a curious developer, this article serves as your gateway to understanding the transformative potential of web scraping. We will not only delve into the essential tools and techniques required to crawl Walmart’s digital shelves but also guide you on how to effectively scrape bits of valuable data.

At the core of our project lies Crawlbase, an invaluable web scraping tool that streamlines the process, enhancing your ability to extract essential data from Walmart’s online domain. By the end of this journey, you’ll be equipped not only with the technical expertise to scrape data effectively but also with a profound appreciation for the role data plays in shaping the landscape of e-commerce.

So, get ready to dive in. We’re about to set off on a transformative journey into the world of web scraping and product analysis.

Table of Content

I. Understanding Data Analysis and Its Role in E-commerce

III. Setting Up the Environment

V. Fetching HTML using Crawling API

VI. Writing a Custom Scraper using Cheerio

VII. Streamline the Scraping Process

I. Understanding Data Analysis and Its Role in E-commerce

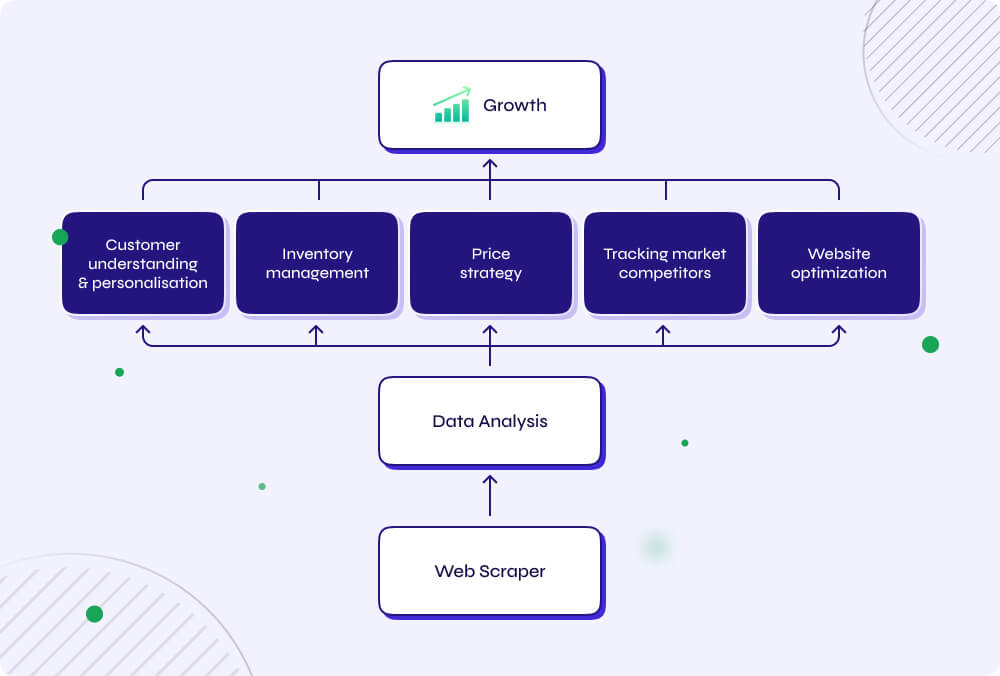

Data analysis is the process of inspecting, cleaning, and interpreting data with the aim of discovering valuable insights, drawing conclusions, and supporting decision-making. In the context of e-commerce, data analysis serves as the compass that guides businesses through the ever-shifting terrain of the digital marketplace.

Why Data Analysis Matters

Customer Understanding: E-commerce businesses deal with a diverse customer base. Data analysis allows them to gain a deeper understanding of their customers’ behaviors, preferences, and purchasing patterns. By analyzing historical transaction data, for example, businesses can identify which products are most popular, who their most valuable customers are, and what marketing strategies resonate the most.

Personalization: Today’s consumers expect a personalized shopping experience. Data analysis can be leveraged to create tailored product recommendations, personalized marketing campaigns, and customized content, increasing customer engagement and loyalty.

Pricing Strategy: The digital marketplace is highly competitive, with prices fluctuating frequently. Data analysis enables e-commerce businesses to monitor competitor pricing, adjust their own pricing strategies in real-time, and identify opportunities to offer competitive prices without sacrificing profitability.

Inventory Management: Maintaining optimal inventory levels is a critical aspect of e-commerce operations. Data analysis helps businesses predict demand trends, reducing the risk of overstocking or understocking products. This, in turn, improves cash flow and ensures customers can access products when they want them.

Tracking Market Competitors: Businesses can gain an extensive understanding of their rivals, including their products, pricing strategies, marketing approaches, and customer behavior. This data-oriented analysis allows businesses to make informed decisions, adjust pricing strategies in real-time, optimize their product assortments, fine-tune marketing campaigns, and respond proactively to emerging market trends.

Website Optimization: Understanding how customers navigate and interact with your e-commerce website is crucial. Data analysis tools can track user behavior, revealing areas where website optimization can enhance the user experience, increase conversion rates, and reduce bounce rates.

Marketing Effectiveness: E-commerce businesses invest heavily in digital marketing campaigns. Data analysis provides insights into the performance of these campaigns, helping businesses allocate their marketing budgets more effectively and measure the return on investment (ROI) for each channel.

In summary, data analysis is the backbone of a successful e-commerce operation. It enables businesses to make data-driven decisions, adapt to changing market conditions, and create a seamless and personalized shopping experience for their customers.

In the following sections of this blog, we’ll guide you on how you can build your own web scraper for data collection from platforms like Walmart. The scraped material can be utilized for effective data analysis, allowing your businesses to have an advantage in the digital age.

II. Project Scope and Flow

Before we proceed on our web scraping journey, it’s essential to understand the scope of this project. In this guide, we’ll be focusing on crawling product data from Walmart’s Search Engine Results Page (SERP) and building a customized scraper which can be used for e-commerce analysis.

Before diving into the technical aspects, make sure you have the following prerequisites in place:

Basic Knowledge of JavaScript and Node.js: Familiarity with JavaScript and Node.js is essential as we’ll be using these technologies for web scraping and data handling.

Active Crawlbase API Account: You’ll need an active Crawlbase account with valid API credentials. These credentials are necessary to interact with Crawlbase’s web scraping service. Start by signing up to Crawlbase and obtaining your credentials from the account documentation. You will receive 1,000 free requests upon signing up that can be used for this project.

Familiarity with Express.js: While optional, having some knowledge of Express.js can be beneficial if you intend to create an endpoint for receiving scraped data. Express.js will help you set up your server efficiently.

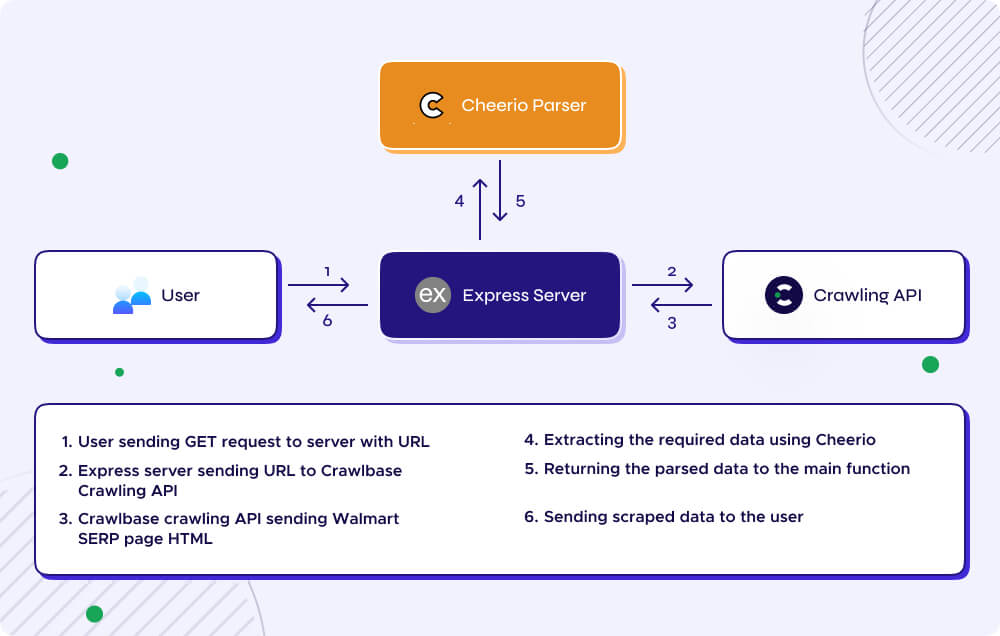

With these prerequisites in place, let us briefly discuss the project flow. It begins by sending a GET request containing a Walmart SERP URL to your Express server. The server, in turn, forwards this URL to the Crawling API, which crawls the Walmart SERP page, retrieving the crucial HTML content.

Once obtained, Cheerio steps in to extract vital product information. This extracted data is then handed back to the main function, ready for further processing. Finally, the scraped data is promptly sent back to the user, completing the seamless project flow, from user input to data delivery.

Now you are well-prepared to follow along with the steps in this guide. Our goal is to provide you with the knowledge and tools needed for seamless web scraping and e-commerce analysis. Let’s get started!

III. Setting Up the Environment

First off, create a Node.js project. This project will be the foundation for our web scraping environment. If you haven’t already, make sure Node.js is installed on your development machine.

Now, let’s set up your web scraping environment by installing some key dependencies. These tools will help us parse HTML, set up a server for receiving scraped data (if needed), and interact efficiently with web content. Here are the dependencies:

Cheerio Library: Think of Cheerio as your trusty companion for parsing HTML. It’s a powerful library that allows us to extract data from web pages seamlessly.

Express (Optional): If your project requires a server to receive and handle scraped data via an endpoint, you can use Express.js. It’s a versatile framework for setting up web servers.

Crawlbase Library (Optional): To streamline the process of fetching HTML content from websites, you can opt for the Crawlbase library. It’s specifically designed for interacting with the Crawlbase Crawling API, making data retrieval more efficient.

To install these dependencies, simply run the following command in your Node.js project directory:

1 | npm install express cheerio crawlbase |

IV. Creating an Endpoint

In this step, you’ll set up an Express.js server and establish a GET route for /scrape. This endpoint serves as the entry point for triggering the web scraping process. When a client sends a GET request to this route, your server will kickstart the scraping operation, retrieve data, and provide a response.

Creating this endpoint proves especially valuable when you intend to offer an API for users or other systems to request real-time scraped data. It grants you control over the timing and methodology of data retrieval, enhancing the versatility and accessibility of your web scraping solution.

Below is an example of how to create a basic Express.js GET route for /scrape:

1 | const express = require('express'); |

Save this code within your Node.js project and run node index.js to start the server.

V. Fetching HTML using the Crawling API

Now that you’ve got your API credentials and your server is all set up with the required dependencies, let’s move on to the next step: using the Crawlbase Crawling API to grab HTML content from Walmart’s SERP page.

Here’s the deal: The Crawling API is like a tool that helps you ask websites nicely for their web pages, and they give you those pages in a simple form called raw HTML.

In this step, we’ll show you how to use the Crawling API. It’s like telling the API, “Hey, can you get me the Walmart web page?” and it goes and gets it for you. We’ll also show you how to use your special API credentials to make this happen.

To start, we’ll integrate the Crawlbase library to make a GET request to a specified URL (req.query.url), we have to insert the following lines into our main code:

1 | const { CrawlingAPI } = require("crawlbase"); |

Instead of expecting data in the request body, this code uses the URL parameter to specify the Walmart SERP URL to scrape (which we’ll show later using Postman). It then logs the response from the Crawling API to the console and handles errors, responding with an error message and status code 500 if there’s a problem.

Here is the updated code snippet:

1 | const express = require('express'); |

Run the server, pass the URL parameter to the route and you should be able to get the HTML response as shown below:

The HTML data you get from Crawlbase will be the building blocks for our next steps. We’ll use this raw HTML to find and collect the data we need using Cheerio and some custom tricks we’ll teach you.

By getting this part right, you’ll be all set to gather web data like a pro, and you’ll be ready to dive into the fun stuff – extracting and analyzing the data you’ve collected.

VI. Writing a Custom Scraper using Cheerio

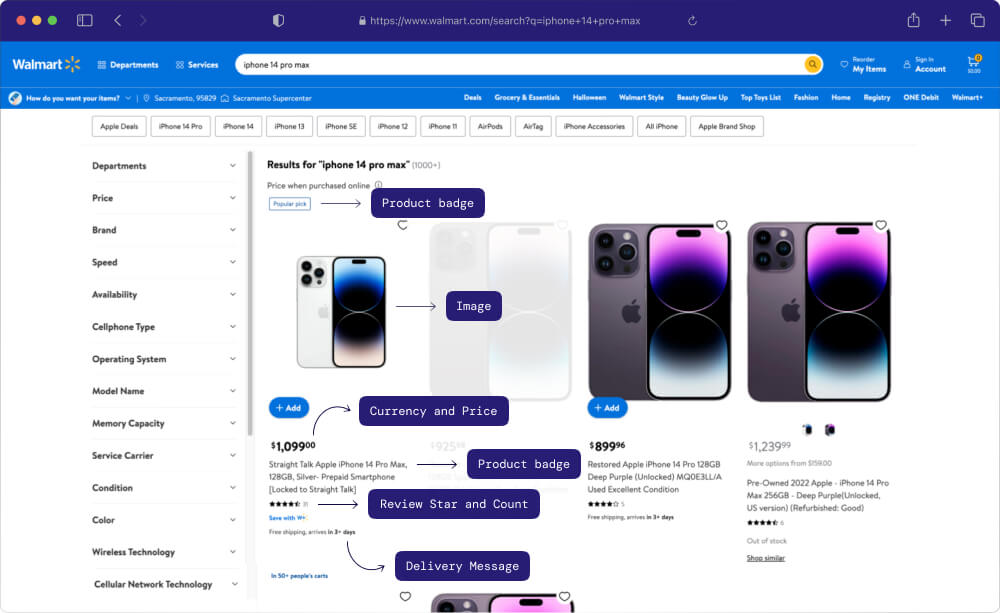

In this step, we’re getting to the heart of web scraping. We want to get some really useful information from a Walmart Search Engine Results Page (SERP) URL, and here’s how we’re going to do it using Cheerio.

Imagine building your own tool to grab exactly what you want from a webpage – that’s what a custom scraper is. Our aim is to pull out important details like product names, prices, and ratings from the Walmart search results.

By creating this custom tool, you get to be the boss of how you want to collect data. We’ll show you how to pick and choose the information you need with selectors. It’s like you’re saying, “Please grab me the titles, prices, and ratings,” and it’ll do just that.

This hands-on approach gives you the power to fine-tune your scraping to match Walmart’s webpage structure perfectly. It ensures you get the data you want quickly and accurately.

1 | const $ = cheerio.load(html), |

Essentially, this code traverses the entirety of the HTML content retrieved from the specified Walmart URL. It navigates through the various class elements within the page’s structure to locate the relevant data and places each piece of information into corresponding variables such as title, images, price, currency, and more.

VII. Streamline the Scraping Process

In this crucial phase, we bring together all the elements to create a smooth web scraping process.

Beginning with the /scrape endpoint, which manages requests on our localhost.

Then, introduce the code snippet that employs the Crawlbase library, ensuring that we can crawl the Walmart search engine results page (SERP) URLs without encountering blocks.

Lastly, we’ll incorporate our custom scraper, utilizing the Cheerio library, to provide us with a neatly organized JSON response for easy readability.

Here’s the complete code:

1 | const express = require('express'); |

Once a URL is provided, our server swings into action. This automated process ensures that you can access valuable information from Walmart’s search results in no time, making your experience more efficient and user-friendly.

VIII. Testing the Flow

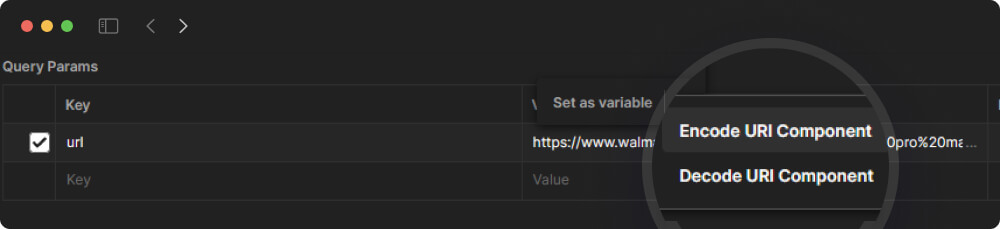

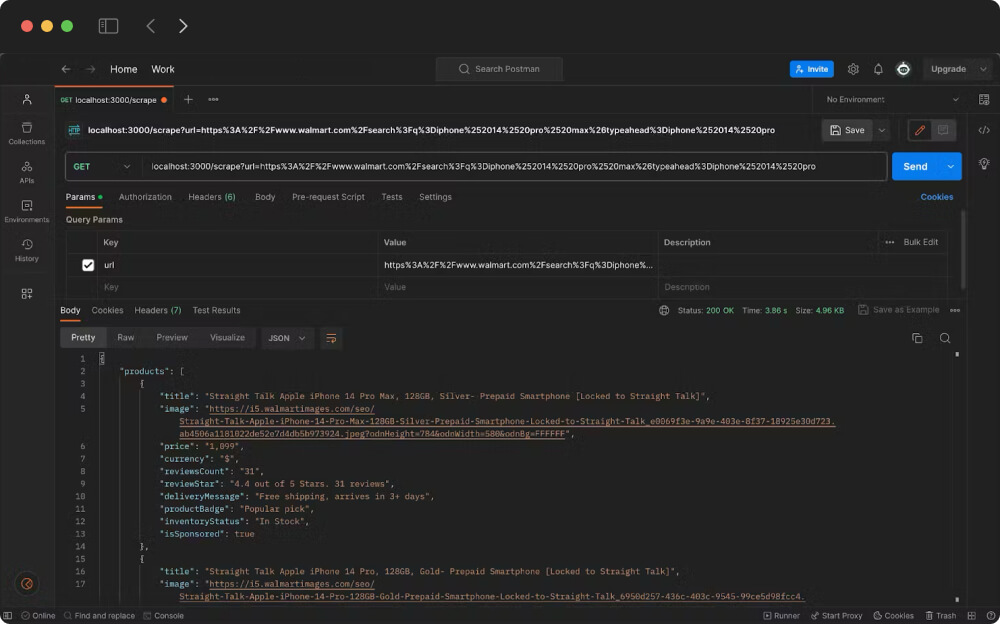

To test our project, we recommend utilizing Postman as it provides a user-friendly graphical interface for making HTTP requests to APIs.

You can initiate a GET request to the local server, configured on port 3000, via the endpoint /scrape. Simply include a URL query parameter with a fully encoded target URL.

To encode a URL, you can highlight the URL string in Postman, click the Meatballs menu and select Encode URI Component.

Complete Postman request:

1 | http://localhost:3000/scrape?url=https%3A%2F%2Fwww.walmart.com%2Fsearch%3Fq%3Diphone%2B14%2Bpro |

So, when you make this GET request in Postman, it will be sent to your local server, and your server, using the Crawlbase library and Cheerio, will scrape the HTML content from the specified Walmart URL and return the relevant data as shown in the JSON response below:

1 | { |

Now that you have successfully scraped the data, the possibilities are endless. You have the flexibility to scale your project and scrape thousands of Search Engine Results Pages (SERPs) per hour, and you can choose to store this valuable data in a database or securely in the cloud.

With the data at your disposal, you can collaborate with your company’s data scientists to formulate strategies that drive your business’s growth and success. The power of web scraping combined with data analysis is now in your hands, empowering you and your team to make impactful decisions to stay relevant in the competitive world of e-commerce.

IX. Conclusion

We’ve taken quite the ride through the world of web scraping and its incredible potential for e-commerce product analysis. From setting up the basics to diving headfirst into data extraction, you’ve seen how this skill can be a game-changer for developers and businesses alike.

Web scraping, done responsibly and ethically, is like your secret sauce for getting ahead, staying in the game, and making smart moves in the fast-paced e-commerce arena. With tools like Crawlbase at your disposal, and the know-how to wield Cheerio like a pro, you’re well on your way to strengthen your e-commerce strategies.

But a word to the wise: With great power comes great responsibility. Always play by the rules, respect website terms, and treat data with the care it deserves.

Armed with the skills and tools from this guide, you’re all set to navigate the competitive e-commerce landscape, make data-driven decisions, and thrive in the digital marketplace.

Thanks for joining us on this adventure through the world of web scraping for e-commerce. Here’s to your success and the game-changing insights you’re about to uncover. Happy scraping!

X. Frequently Asked Questions

Q. How can businesses use data analysis from web scraping to improve their pricing strategies in e-commerce?

Businesses can use web scraping and data analysis to enhance their e-commerce pricing strategies by monitoring competitor prices, implementing dynamic pricing, optimizing prices based on historical data, identifying price elasticity, assessing promotion effectiveness, analyzing abandoned cart data, forecasting demand, strategically positioning themselves in the market, segmenting customers, and conducting A/B tests. These data-driven approaches enable companies to make informed pricing decisions, stay competitive, and maximize revenue while providing value to their customers.

Q. What are the best practices for data storage and management when conducting web scraping for e-commerce product analysis?

Best practices for data storage and management during web scraping for e-commerce product analysis include legal compliance, structured data formats, thorough data cleaning, cloud storage for scalability, regular data backups, encryption for security, access control, version control, defined data retention policies, monitoring and alerts, respectful scraping to avoid IP blocking, documentation of scraping processes, understanding data ownership, and periodic audits. Adhering to these practices ensures data integrity, security, and responsible scraping.

Q. If the search result have multiple pages, how do I scrape the next pages using Crawlbase?

To scrape multiple pages of search results in Crawlbase, you’ll need to utilize the pagination structure specific to Walmart. Walmart typically structures its URLs with a “page” parameter to navigate through search result pages. Here’s an example:

- https://www.walmart.com/search?q=iphone%2014%20pro%20max&typeahead=iphone%2014%20pro

- https://www.walmart.com/search?q=iphone+14+pro+max&typeahead=iphone+14+pro&page=2

- https://www.walmart.com/search?q=iphone+14+pro+max&typeahead=iphone+14+pro&page=3

By modifying the “page” parameter in the URL, you can access subsequent pages of search results. When configuring your Crawlbase scraping task, specify this pagination logic and provide selectors or rules to collect data from each page.

Q. Is there a risk of your web scraper encountering blocks while scraping Walmart?

Yes, there is a significant likelihood of your web scraper facing blocks, especially if you’re not using a vast pool of proxies. While it’s possible to construct your own proxy pool, this can be both time-consuming and costly. This is where Crawlbase comes in, which operates on a foundation of millions of proxies, enhanced with AI logic that mimics human behavior to avoid bot detection and CAPTCHAs. By utilizing Crawlbase, you can scrape web pages anonymously, eliminating concerns about IP blocks and proxy-related challenges while saving valuable time and resources.