When selecting new software, the perspectives of previous users are invaluable. Online reviews offer a straightforward look at genuine user experiences. In this landscape, G2 has established itself as a reliable platform for trustworthy software feedback.

Many buyers consult G2 for insights, appreciating the authenticity and depth of its reviews. Given this trend, businesses are increasingly interested in tapping into the wealth of information these reviews contain.

This guide will lead you through the process of web-scraping G2 product reviews. We’ll cover topics from understanding the review structure to bypassing potential blocks and analyzing the data. Equip yourself with the skills to harness insights from G2 reviews effectively.

Table of Content

III. Installing Required Packages

IV. Initializing ExpressJS & Crawlbase Crawling API

V. Crawling G2 Reviews Page with Crawlbase

VII. Configuring Firebase Database

VIII. Storing Data in Firebase Realtime Database

I. Project Scope

Let’s explore the core of our project, which is centered on extracting G2 product reviews. To ensure a smooth process, we’ve combined a prime selection of tools: Crawlbase, JavaScript, Firebase, ExpressJS, and Cheerio. Each of these tools plays a unique role in this project.

1. The Crawlbase Advantage

Crawlbase’s Crawling API is our main tool when tackling challenges like blocks and CAPTCHAs. This API ensures we can gather the data we need without any interruptions. With Crawlbase, we’re all set to scrape effectively and efficiently.

2. Unleashing JavaScript’s Magic

JavaScript is the magic behind modern web development, and we’re putting it to work in our project. It’s our go-to for making things happen dynamically. From dealing with content to tweaking HTML elements, JavaScript’s flexibility gives us the power to craft a responsive and dynamic scraping solution.

3. The Firebase Connection

Our trusty sidekick in this adventure is Firebase. This powerful real-time database platform becomes our playground, where we securely store all those precious G2 product reviews. With Firebase, our data is neatly organized and ready for analysis whenever needed.

4. Building with Express

Think of Express.js as the strong foundation of our project. It’s like the scaffold that lets us handle incoming requests and serve up the responses. Setting up routes, managing our scraping tasks, and connecting to Firebase—all this and more is possible because of ExpressJS.

5. Cheerio: The HTML Magician

Cheerio is our secret to understanding HTML. With this scraper, we can pick out significant information from the raw HTML of G2 reviews.

II. Getting Started

Creating a Crawlbase Account

Visit the website and complete the sign-up process to create an account. Keep track of your account tokens, especially the Normal request/tcp token, as we will be utilizing it to crawl G2 reviews page content.

Note that G2 is a complex website, and Crawlbase implements a particular solution to bypass G2’s bot detection algorithm. Contact the support team to enable this tailored solution for your account.

Installing Node.js

Go to the official Node.js website and download the appropriate installer for your operating system (Windows, macOS, or Linux). Run the installer and follow the on-screen instructions.

Postman

Create a free Postman account. It is a popular API (Application Programming Interface) testing and development tool that simplifies working with APIs. It provides a user-friendly interface that allows developers to send HTTP requests to APIs, view the responses, and interact with different API endpoints.

Firebase Project

Setup a free account at Google Firebase. Hold on to your account, as we will be utilizing this real-time database further into the article.

III. Installing Required Packages

Now that you’ve laid down the initial groundwork let’s equip your development environment with the necessary tools. We’ll install the required packages using npm (Node Package Manager) to achieve this.

Using npm, a command-line tool that accompanies Node.js, you can effortlessly download and manage external libraries and packages. These packages provide pre-built functionalities that simplify various tasks, including web scraping.

In this step, we’ll initiate a new Node.js project and install three vital packages: express, cheerio, and Crawlbase. The ExpressJS package enables you to create a web server easily, Cheerio facilitates HTML parsing, and the Crawlbase library empowers your scraping endeavors.

Open your terminal or command prompt, navigate to your project directory, and initiate the following commands:

1 | npm init --yes |

The first command initializes a new Node.js project with default settings, while the second command downloads and installs these packages and their dependencies, saving them in a folder called node_modules within your project directory.

These packages will serve as the building blocks of this project, enabling us to interact with web content, extract information, and store valuable insights.

IV. Initializing Express & Crawlbase Crawling API

Initialize your ExpressJS app and define the necessary routes. We’ll create a route to handle the scraping process.

1 | const express = require("express"); |

This code establishes an ExpressJS web server that facilitates web scraping via the crawlbase library. Upon receiving a GET request at the /scrape endpoint, the server extracts a URL from a query parameter. It then employs the CrawlingAPI class, authenticated with your user token (make sure to replace the USER_TOKEN value with your actual Normal request/ TCP token), to perform a GET request on the given URL.

Successful responses are formatted into JSON and sent back to the client. In case of errors during the API call, the server responds with a 500 status code and an error message. The server is configured to listen on a specified port or defaults to 3000, with a log message indicating its operational status.

V. Crawling G2 Reviews Page with Crawlbase

In the process of extracting G2 product reviews, we will utilize the robust capabilities of Crawlbase. This step involves retrieving the HTML content of the G2 reviews page, which is a critical precursor to parsing and extracting valuable information.

By integrating Crawlbase, you gain a significant advantage in navigating potential challenges such as CAPTCHAs and IP blocks. Crawlbase’s Crawling API allows you to bypass these obstacles, ensuring an efficient and uninterrupted extraction process.

To verify the functionality of your /scrape route, you can initiate the ExpressJS server by running the command node index.js, or if you’ve configured a startup script in your package.json file, you can use npm start. This action will initiate the server and allow you to assess the functionality of the defined route.

The server will be active and running on port 3000 by default. You can access it through your browser by navigating to http://localhost:3000.

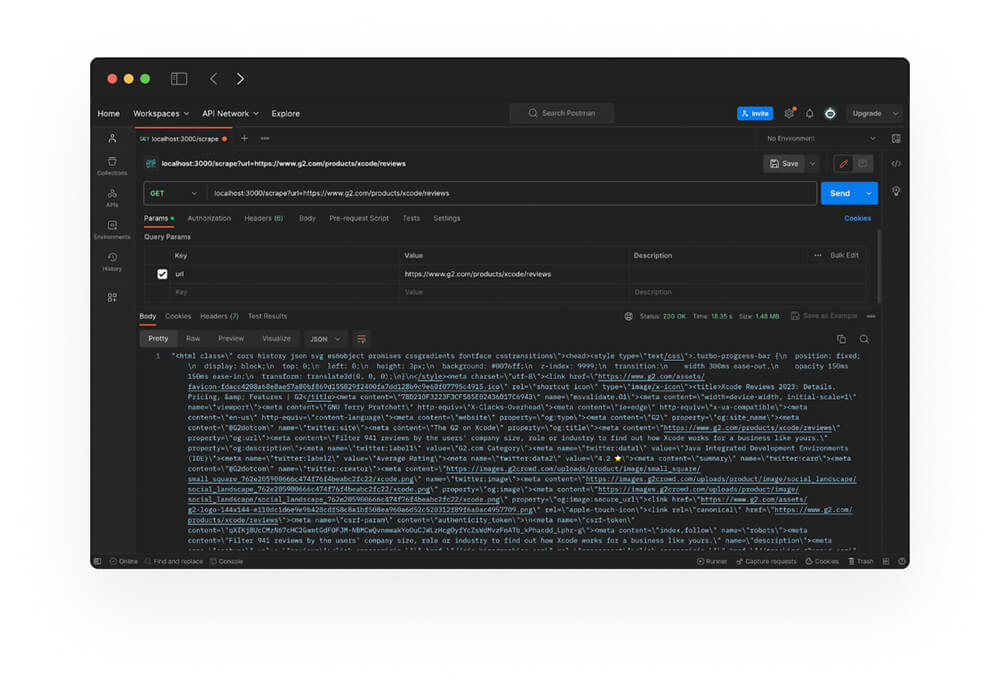

To test the /scrape route, open Postman and create a new GET request. Enter the URL http://localhost:3000/scrape and add the request URL query parameter. In this example, we will crawl and scrape xcode reviews.

Send the request by clicking the Send button. Postman will capture the response, which will contain the HTML content of the G2 product reviews page.

By utilizing Postman to fetch the HTML content of our target URL, you can visually inspect the returned content. This validation step ensures that your integration with Crawlbase is successful, and you’re able to retrieve the essential web content.

VI. Parsing HTML with Cheerio

Once we obtain the HTML content, we will use Cheerio to parse it and extract the relevant information. This involves finding the right selectors, patterns used to target specific elements in the HTML structure. Here’s how you can obtain selectors:

1. Inspect the Web Page

Open the G2 product reviews page in your web browser. Right-click on the element you want to scrape and select Inspect or Inspect Element. This will open the browser’s developer tools.

2. Locate the Element

In the developer tools, you’ll see the HTML structure of the page. Navigate through the elements until you find the one containing the desired data. Hover over different HTML elements to highlight them on the page, helping you identify the correct element.

3. Identify Classes and IDs

Look for attributes like class and id, uniquely identifying the element you want to scrape. These attributes are selectors that you can use with Cheerio to get the desired value.

4. Use CSS Selectors

Once you’ve identified the classes or IDs, you can use them as CSS selectors in your Cheerio code. For example, if you want to scrape review titles, you might have a selector like .review-title to target elements with the review-title class.

5. Test Selectors with Cheerio

To ensure your selectors are accurate, open a new Node.js script and use Cheerio to load the HTML content and test your selectors. Use the $(selector) syntax to select elements and verify that you capture the correct data.

1 | function parsedDataFromHTML(html) { |

This code defines a function named parsedDataFromHTML that takes an HTML content as input which uses the Cheerio library to extract specific data from the HTML. It initializes an object called productData with fields for product information and reviews.

The code employs Cheerio selectors to extract details such as product name, star rating, total reviews count, and individual review data. It iterates through review elements, extracting reviewer details, review text, star ratings, reviewer avatar, review links, profile titles, profile labels, and review dates.

All this data is organized into a structured object and returned. In case of any errors during the parsing process, the function returns an error message.

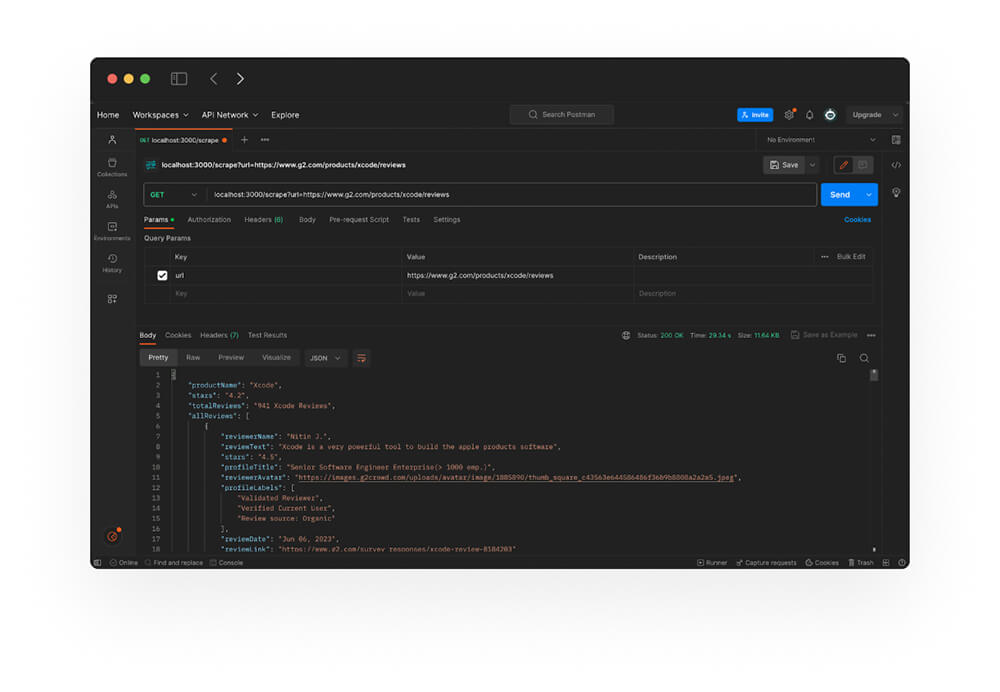

Note: To verify if parsing is successful, you can follow the steps below.

- Ensure that your Express server is running on port

3000. Alternatively, you can runnode index.jsornpm startto start the server. - Open Postman and create a new GET request. Enter

http://localhost:3000/scrapeas the request URL and add the URL query parameters. - Send the request by clicking the

Send button. As a result of Postman, the G2 product reviews page’s JSON content will be captured.

VII. Configuring Firebase Database

At this point, we will be integrating Firebase into your web scraping project. You can follow the steps below.

1. Create a New Project

Log in to your Firebase console and create a new project by clicking the Add project button. Give your project a suitable name and select your preferred options.

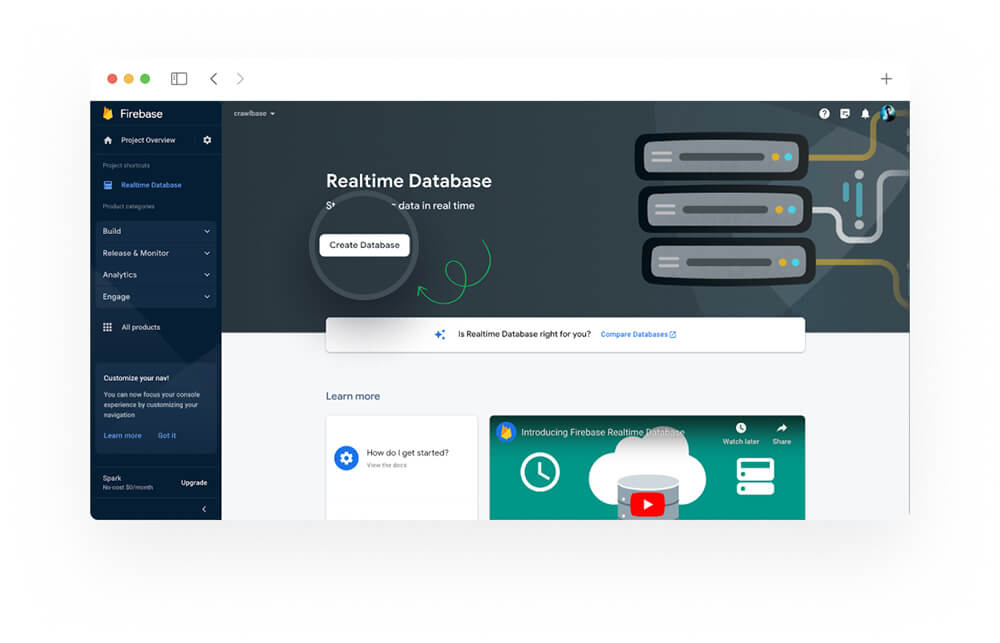

2. Create a Realtime Database

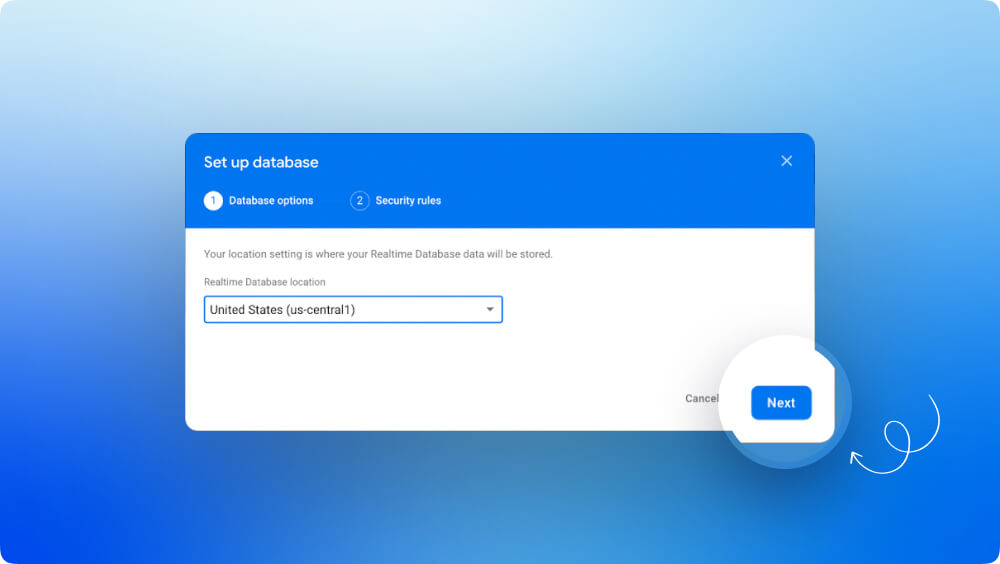

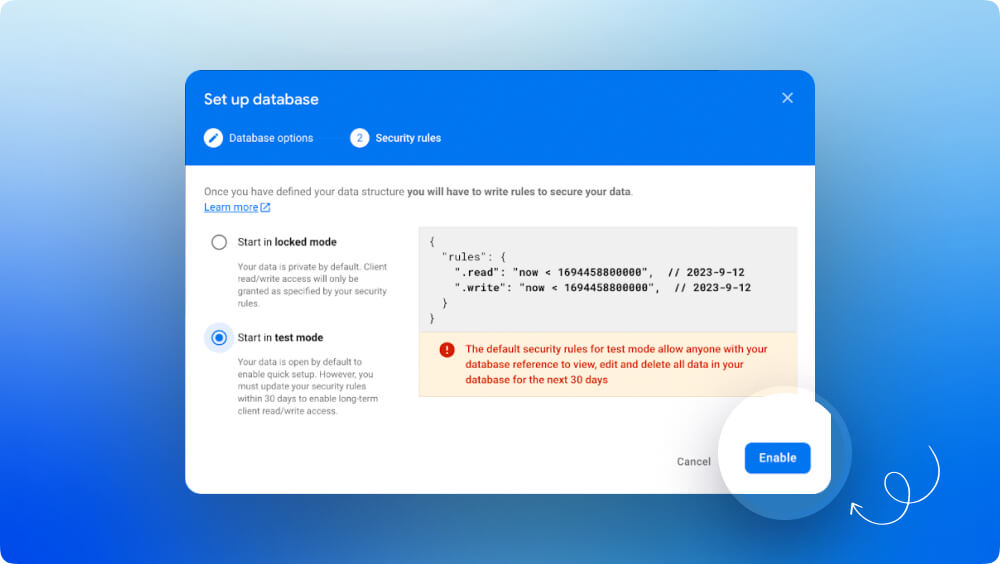

After successfully setting up the project, navigate to the left-hand menu of the Firebase console and choose Database. From there, click on Create Database and opt for the Start in test mode selection. This approach allows you to establish a realtime database with restricted security rules, making it well-suited for testing and development purposes.

3. Review Rules and Security

While in test mode, it’s important to be aware that your database is accessible with limited security rules. As you move to production, make sure to review and implement appropriate security rules to protect your data. Click on Next and then Enable.

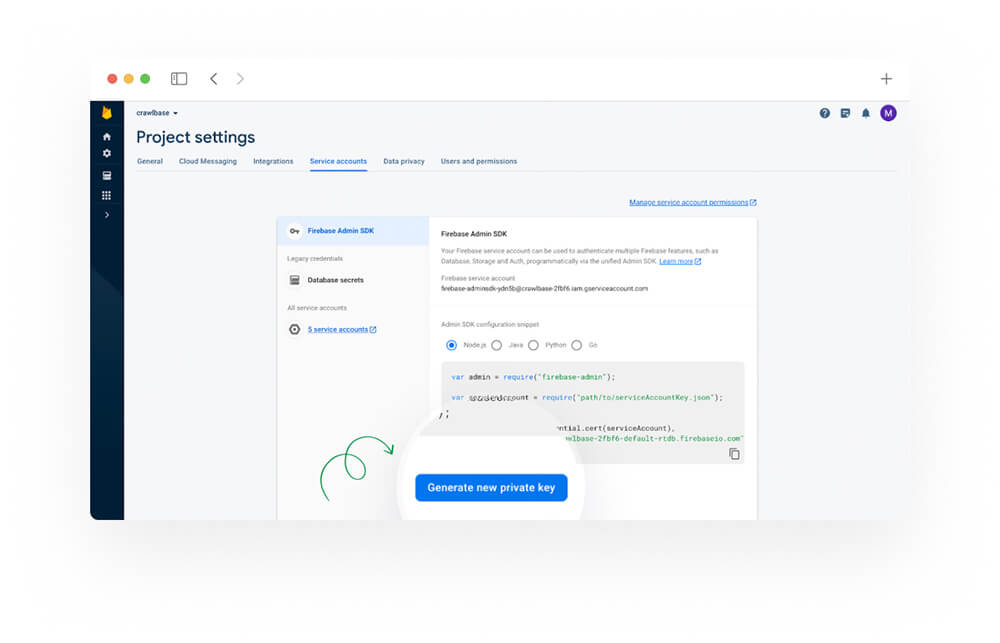

4. Generating a Private Key

Proceed to the settings of your Firebase project, then generate a fresh private key specifically for the Firebase Admin SDK. After generating the key, ensure that you save the resulting JSON file to the root directory of your project. This JSON file contains your service account key and is crucial for SDK functionality.

5. Integrating the Private Key

In your ExpressJS project, install the Firebase Admin SDK package and initialize it using your private key and database URL.

1 | npm i firebase-admin |

1 | const admin = require('firebase-admin'); |

This code snippet first installs the firebase-admin package using npm. Then, it imports the package and initializes the SDK using a service account JSON file for authentication and the URL of your Firebase Realtime Database. This setup allows your Node.js app to interact with Firebase services using the Admin SDK’s functionalities.

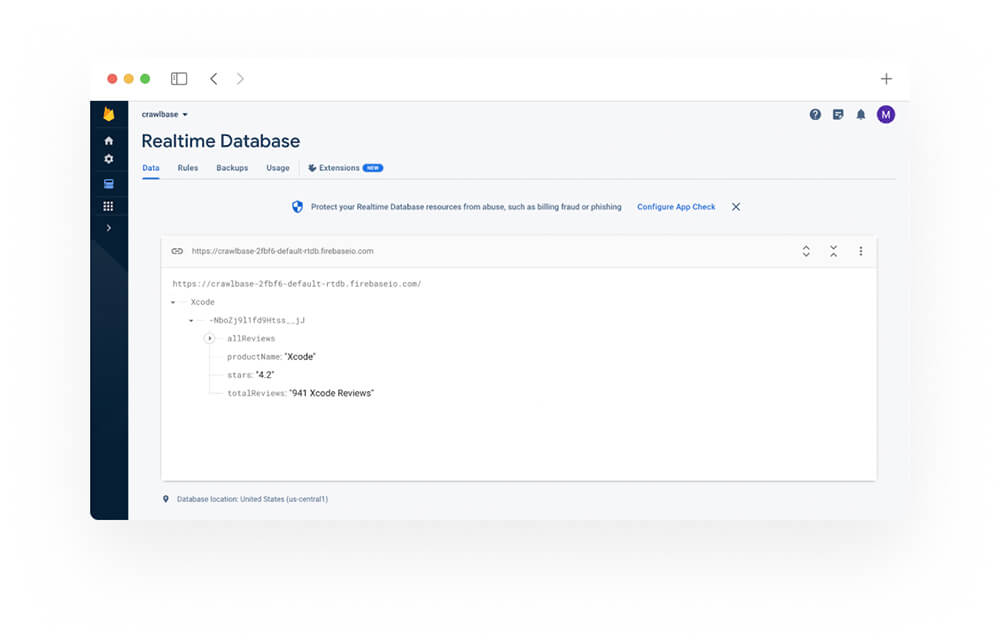

VIII. Storing Data in Firebase Realtime Database

Inside your /scrape route’s logic, after parsing the HTML with Cheerio, structure the extracted data in a format suitable for your needs. This could be an array of objects, where each object represents a review.

Choose a meaningful location in the database to store the reviews related to a specific product. You can use the product name as a key in the database to differentiate between multiple product reviews.

Implement a route, such as

~/productName/reviews, corresponding to the location in the database where you want to store the reviews.When a request is made to this route, push the structured data to the specified location in the Firebase Realtime Database.

Here is the complete code for crawling and scraping G2 product reviews and storing the scraped data into your database.

1 | const express = require('express'); |

In summary, this code creates a Node.js app with multiple functions. It begins by importing essential libraries, including express.js for web server creation, cheerio for HTML parsing, crawlbase for web crawling, and firebase-admin for interacting with Firebase services. The Firebase Admin SDK is initialized using a service account’s JSON file and the corresponding database URL. An instance of the CrawlingAPI class is instantiated with a user token to facilitate web crawling.

The code then creates an ExpressJS app and sets it to listen on a specific port, either a custom one or the default 3000. A function named parsedDataFromHTML is defined and utilizing Cheerio selectors to parse HTML content and structure it into organized data.

A route /scrape is established within the ExpressJS app, configured to handle GET requests. Upon receiving a request, the API crawls the provided URL using the CrawlingAPI. The response is parsed using the parsedDataFromHTML function, resulting in structured data. This parsed data is then stored in the Firebase Realtime Database through the Firebase Admin SDK, placing it under a designated path. Successful data storage prompts a JSON response containing the parsed data.

We have now successfully established a seamless connection between the ExpressJS app and Firebase Realtime Database, enabling you to store the extracted G2 product reviews in a structured and organized manner. This ensures that those valuable insights are securely stored and easily accessible whenever needed.

IX. Conclusion

Here is a simple flowchart that summarizes our project scope:

In summary, this comprehensive guide has equipped you with the necessary techniques to extract G2 product reviews using JavaScript and Crawlbase proficiently. By sticking to the detailed steps, you’ve gained a profound understanding of navigating G2’s product interface, refining and structuring the retrieved data, and efficiently archiving it into a database for subsequent analysis.

The importance of web scraping in extracting actionable business insights is undeniable. It forms a solid foundation for informed decisions, product improvements, and tailored strategies. However, it’s vital to emphasize the need for ethical scraping practices – respecting website terms is essential.

As you continue your journey with Crawlbase, remember that web scraping is not just about technical skill but also about responsibility. Commit to continuous learning and use this wealth of data to identify new opportunities and drive forward-thinking innovation.

The world of web scraping is dynamic and expansive, offering a wealth of knowledge and insights that can significantly benefit your business. Embrace the challenges, make the most of the opportunities, and let your passion for discovery guide you in your future endeavors with Crawlbase.

X. Frequently Asked Question

Q. How does Crawlbase help in crawling and scraping G2.com and other websites?

The Crawlbase API utilizes advanced algorithms and an extensive proxy network to navigate through blocks and CAPTCHAs while effectively masking your IP with each request. This strategic process guarantees anonymity and prevents the target websites from tracing your crawling activities.

In dealing with complex websites like G2.com, we have implemented a custom solution utilizing trained AI bots. This augmentation enhances our capacity to bypass proxy challenges and avoid interruptions due to blocks. By leveraging premium residential networks in the US, we effectively mimic authentic human browsing behavior. This approach ensures a seamless and uninterrupted crawling experience.

Q. Can I use any other database?

This blog showcased the utilization of the Firebase Realtime Database to store scraped data. However, your choices are not confined solely to this avenue. Depending on your project’s specific use cases or demands, you can delve into alternative database solutions such as MongoDB, PostgreSQL, MySQL, or even cloud-based platforms like Amazon DynamoDB.

Q. How can I handle pagination while scraping G2 reviews?

Handling pagination while scraping G2 reviews involves fetching data from multiple pages of the reviews section. Here’s a general approach to handling pagination in web scraping:

Identify Pagination Method: Examine the structure of the G2 reviews page to determine how pagination is implemented. In this case, G2’s implementation involves clicking the “Next” button at the bottom of the review page.

Adjust URLs or Parameters: If pagination involves changing URL parameters (like page numbers) in the URL, modify the URL accordingly to each page as shown in the example below.

page 1: https://www.g2.com/products/xcode/reviews

page 2: https://www.g2.com/products/xcode/reviews.html?page=2

page 3: https://www.g2.com/products/xcode/reviews.html?page=3Loop Through Pages: Once you understand the pagination method, use a loop to iterate through pages. Send a request to each pages to crawl and scrape data using your scraping library (e.g., Axios, Fetch) to fetch the HTML content. Then, parse the HTML to extract the desired information using Cheerio or any other HTML parsing library.

Q. How can I ensure the legality and ethicality of web scraping?

Ensuring the legitimacy and ethical nature of web scraping involves respecting the rules and guidelines set by websites. Prior to initiating any scraping, take a moment to thoroughly review the website’s terms of service and check its robots.txt file. Furthermore, be mindful of the data you’re extracting, verifying that you possess the rightful authority to employ it for your intended objectives. This approach helps maintain a responsible and ethical scraping practice.