Web scraping and data extraction have revolutionized the way we gather information from the vast ocean of data available on the internet. Search engines like Google serve as treasure troves of knowledge, and being able to extract valuable URLs from their search results can be a game-changer for various purposes. Whether you’re a business owner conducting market research, a data enthusiast seeking information, or a professional in need of data for various applications, web scraping can provide you with the data you need.

In this blog, we’ll embark on a journey to explore the art of crawling Google search pages, scraping valuable information, and efficiently storing information in an SQLite database. Our tools for this endeavor will be Python and the Crawlbase Crawling API. Together, we’ll navigate through the intricate world of web scraping and data management, giving you the skills and knowledge you need to harness the power of Google’s search results. Let’s dive in and get started!

- Key Benefits of Web Scraping

- Why Scrape Google Search Pages?

- Introducing the Crawlbase Crawling API

- The Distinct Advantages of Crawlbase Crawling API

- Exploring the Crawlbase Python Library

- Configuring Your Development Environment

- Installing the Necessary Libraries

- Creating Your Crawlbase Account

- Deconstructing an Google Search Page

- Obtaining Your Crawlbase Token

- Setting Up Crawlbase Crawling API

- Selecting the Ideal Scraper

- Effortlessly Managing Pagination

- Saving Data to SQLite database

1. Unveiling the Power of Web Scraping

Web scraping is a dynamic technology that involves extracting data from websites. It’s like having a digital robot that can visit websites, collect information, and organize it for your use. Web scraping involves using computer programs or scripts to automate the process of gathering data from websites. Instead of manually copying and pasting information from web pages, web scraping tools can do this automatically and at scale. These tools navigate websites, extract specific data, and store it in a structured format for analysis or storage.

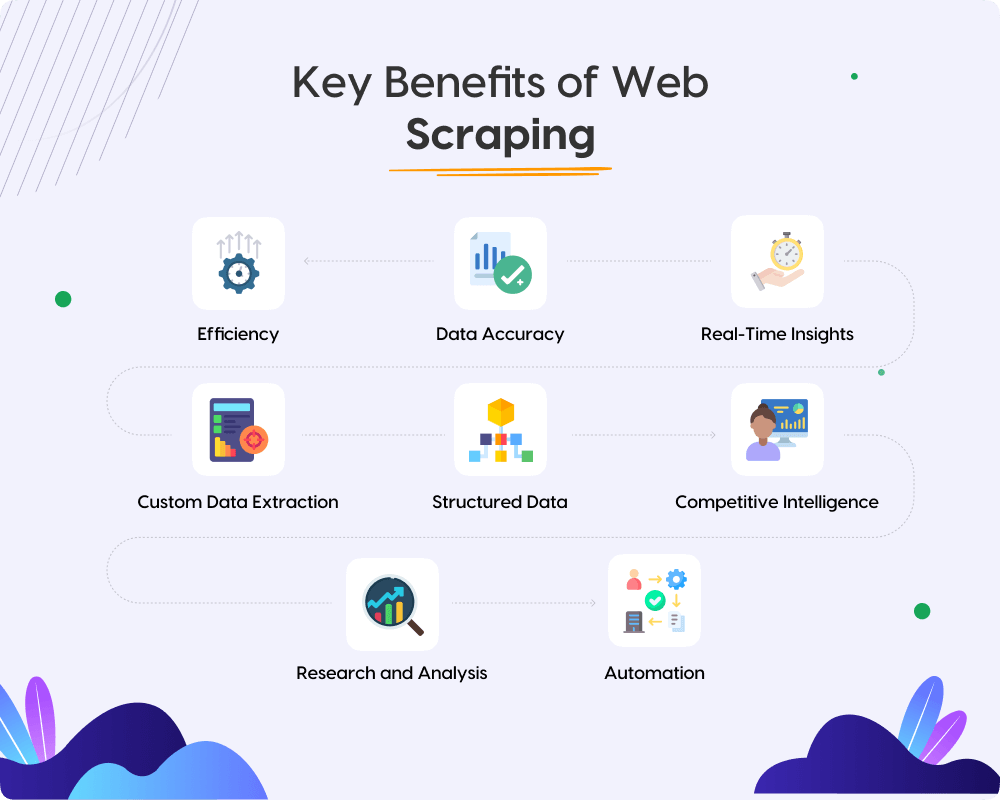

Key Benefits of Web Scraping:

- Efficiency: Web scraping automates data collection, saving you time and effort. It can process large volumes of data quickly and accurately.

- Data Accuracy: Scraping ensures that data is pulled directly from the source, reducing the risk of errors that can occur with manual data entry.

- Real-Time Insights: Web scraping allows you to monitor websites and gather up-to-the-minute information essential for tasks like tracking prices, stock availability, or news updates.

- Custom Data Extraction: You can tailor web scraping to collect specific data points you need, whether product prices, news headlines, or research data.

- Structured Data: Scraped data is organized in a structured format, making it easy to analyze, search, and use in databases or reports.

- Competitive Intelligence: Web scraping can help businesses monitor competitors, track market trends, and identify new opportunities.

- Research and Analysis: Researchers can use web scraping to collect academic or market research data, while analysts can gather insights for business decision-making.

- Automation: Web scraping can be automated to run on a schedule, ensuring that your data is always up-to-date.

2. Understanding the Significance of Google Search Page Scraping

As the most widely used search engine globally, Google plays a pivotal role in this landscape. Scraping Google search pages provides access to extensive data, offering numerous advantages across various domains. Before delving into the intricacies of scraping Google search pages, it’s essential to understand the benefits of web scraping and comprehend why this process holds such significance in web data extraction.

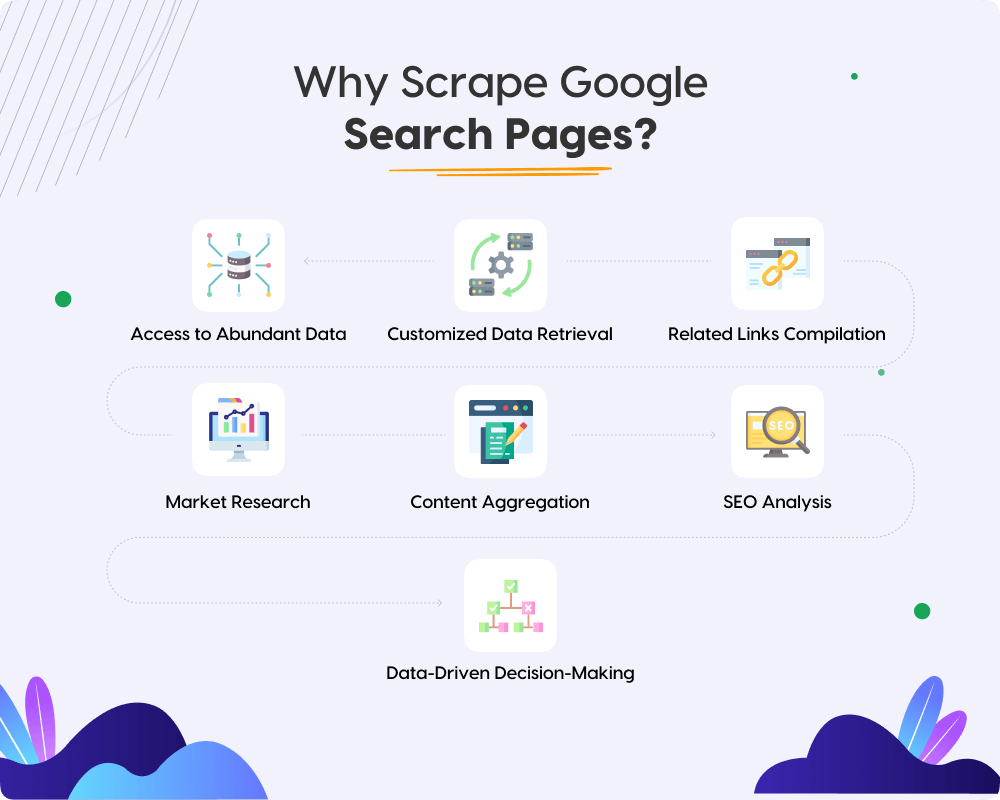

Why Scrape Google Search Pages?

Scraping Google search pages brings a multitude of advantages. It provides unparalleled access to a vast and diverse repository of data, capitalizing on Google’s position as the world’s leading search engine. This data spans a wide spectrum of domains, encompassing areas as diverse as business, academia, and research.

The true power of scraping lies in its ability to customize data retrieval. Google’s search results are meticulously tailored to your specific queries, ensuring relevance. By scraping these results, you gain the capability to harvest highly pertinent data that precisely aligns with your search terms, enabling precise information extraction. Google Search provides a list of associated websites when you search for a particular topic. Scraping these links empowers you to curate a comprehensive collection of resources meticulously attuned to your research or analytical needs.

Businesses can leverage Google search scraping for market research, extracting competitive insights from search results tied to their industry or products. Analyzing these results provides a deep understanding of market trends, consumer sentiment, and competitor activities. Content creators and bloggers can employ this technique to unearth relevant articles, blog posts, and news updates, serving as a solid foundation for crafting curated content. Digital marketers and SEO professionals significantly benefit from scraping search pages, as it unveils invaluable insights regarding keyword rankings, search trends, and competitor strategies.

Mastering the art of scraping Google search pages equips you with a potent tool for harnessing the internet’s wealth of information. In this blog, we will delve into the technical aspects of this process, using Python and the Crawlbase Crawling API as our tools. Let’s embark on this journey to discover the art and science of web scraping in the context of Google search pages.

3. Embarking on Your Web Scraping Journey with Crawlbase Crawling API

Welcome aboard as we set sail on your web scraping journey with the Crawlbase Crawling API. Whether you’re a novice in web scraping or a seasoned professional, this API is your trusty compass, guiding you through the complexities of data extraction from websites. This section will introduce you to this invaluable tool, highlighting its unique advantages and providing insights into the Crawlbase Python Library.

Introducing the Crawlbase Crawling API

The Crawlbase Crawling API stands at the forefront of web scraping, offering a robust and versatile platform for extracting data from websites. Its primary mission is to simplify the intricate process of web scraping by presenting a user-friendly interface coupled with formidable features. With Crawlbase as your co-pilot, you can automate data extraction from websites, even those as dynamic as Google’s search pages. This automation saves you invaluable time and effort that would otherwise be spent on manual data collection.

This API opens the gateway to Crawlbase’s comprehensive crawling infrastructure, accessible via a Restful API. Essentially, you communicate with this API, specifying the URLs you want to scrape and any necessary query parameters provided with the Crawling API. You receive the scraped data in a structured format, typically in HTML or JSON. This seamless interaction allows you to focus on harnessing valuable data while Crawlbase handles the technical complexities of web scraping.

The Distinct Advantages of Crawlbase Crawling API

Why have we chosen the Crawlbase Crawling API for our web scraping expedition amidst numerous available options? Let’s delve into the reasons behind this selection:

- Scalability: Crawlbase is engineered to handle web scraping at scale. Whether your project encompasses a few hundred pages or an extensive database of millions, Crawlbase adapts to your requirements, ensuring your scraping endeavors grow seamlessly.

- Reliability: Web scraping can be a challenging endeavor due to the ever-evolving nature of websites. Crawlbase mitigates this challenge with robust error handling and monitoring, reducing the likelihood of scraping jobs encountering unexpected failures.

- Proxy Management: In response to websites’ anti-scraping measures, such as IP blocking, Crawlbase provides efficient proxy management. This feature helps you evade IP bans and ensures reliable access to the data you seek.

- Convenience: With the Crawlbase API, you are relieved of the burden of creating and maintaining your own custom scraper or crawler. It operates as a cloud-based solution, handling the intricate technical aspects and allowing you to focus solely on extracting the data that matters.

- Real-time Data: The Crawlbase Crawling API guarantees access to the most current and up-to-date data through real-time crawling. This feature is pivotal for tasks requiring accurate analysis and decision-making.

- Cost-Effective: Building and maintaining an in-house web scraping solution can strain your budget. Conversely, the Crawlbase Crawling API offers a cost-effective solution, requiring payment based only on your specific requirements.

Exploring the Crawlbase Python Library

To unlock the full potential of the Crawlbase Crawling API, we turn to the Crawlbase Python library. This library acts as your toolkit for seamlessly integrating Crawlbase into Python projects, making it accessible to developers of all skill levels.

Here’s a glimpse of how it works:

- Initialization: Begin your journey by initializing the Crawling API class with your Crawlbase token.

1 | api = CrawlingAPI({ 'token': 'YOUR_CRAWLBASE_TOKEN' }) |

- Scraping URLs: Effortlessly scrape URLs using the get function, specifying the URL and any optional parameters.

1 | response = api.get('https://www.example.com') |

- Customization: The Crawlbase Python library offers various options to tailor your scraping, providing further exploration possibilities detailed in the API documentation.

With this knowledge, you’ll be acquainted with the Crawlbase Crawling API and equipped to wield it effectively. Together, we embark on a journey through Google’s expansive search results, unlocking the secrets of web data extraction. So, without further ado, let’s set sail and explore the wealth of information that Google has to offer!

4. Essential Requirements for a Successful Start

Before embarking on your web scraping voyage with the Crawlbase Crawling API, there are some essential preparations you need to make. This section will cover these prerequisites, ensuring you’re well-prepared for the journey ahead.

Configuring Your Development Environment

Configuring your development environment is the first step in your web scraping expedition. Here’s what you need to do:

- Python Installation: Ensure that Python is installed on your system. You can download the latest version of Python from the official website, and installation instructions are readily available.

- Code Editor: Choose a code editor or integrated development environment (IDE) for writing your Python scripts. Popular options include Visual Studio Code, PyCharm, Jupyter Notebook, or even a simple text editor like Sublime Text.

- Virtual Environment: It’s a good practice to create a virtual environment for your project. This isolates your project’s dependencies from the system’s Python installation, preventing conflicts. You can use Python’s built-in

venvmodule or third-party tools likevirtualenv.

Installing the Necessary Libraries

To interact with the Crawlbase Crawling API and perform web scraping tasks effectively, you’ll need to install some Python libraries. Here’s a list of the key libraries you’ll require:

- Crawlbase: A lightweight, dependency free Python class that acts as wrapper for Crawlbase API. We can use it to send requests to the Crawling API and receive responses. You can install it using

pip:

1 | pip install crawlbase |

- SQLite: SQLite is a lightweight, server-less, and self-contained database engine that we’ll use to store the scraped data. Python comes with built-in support for SQLite, so there’s no need to install it separately.

Creating Your Crawlbase Account

Now, let’s get you set up with a Crawlbase account. Follow these steps:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase website Signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the instructions provided.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your tokens here.

With your development environment configured, the necessary libraries installed, and your Crawlbase account created, you’re now equipped with the essentials to dive into the world of web scraping using the Crawlbase Crawling API. In the following sections, we’ll delve deeper into understanding Google’s search page structure and the intricacies of web scraping. So, let’s continue our journey!

5. Deciphering the Anatomy of Google Search Pages

To become proficient in scraping Google search pages, it’s essential to understand the underlying structure of these pages. Google employs a complex layout that combines various elements to deliver search results efficiently. In this section, we’ll break down the key components and help you identify the data gems within.

Deconstructing a Google Search Page

A typical Google search page comprises several distinct sections, each serving a specific purpose:

- Search Bar: The search bar, positioned at the top of the page, is where you enter your search query. Google then processes this query to display a set of relevant results.

- Search Tools: Located prominently above the search results, this section provides an array of filters and customization options to fine-tune your search experience. You have the flexibility to sort results by date, type, and additional criteria to suit your specific needs.

- Ads: Google often displays sponsored content at the top and bottom of the search results. These are paid advertisements that may or may not be directly related to your query.

- Locations: Google frequently provides a map related to the search query at the start of the search result page, along with the addresses and contact information of the most relevant locations.

- Search Results: The page’s core displays a list of web pages, articles, images, or other content relevant to your search. A title, snippet, and URL typically accompany each result.

- People Also Ask: Alongside the search results, Google often presents a “People Also Ask” section, which functions like an FAQ section. This includes questions most related to the search query.

- Related Searches: Google often presents a list of related search links based on your query. These links can lead to valuable resources that complement your data collection.

- Knowledge Graph: On the right side of the page, you might find a Knowledge Graph panel containing information about the topic you searched for. This panel often includes key facts, images, and related entities.

- Pagination: If multiple pages of search results exist, pagination links appear at the bottom, allowing you to navigate through the results.

In the upcoming sections, we’ll delve into the technical aspects of scraping Google search pages, including extracting important data effectively, handling pagination and saving data into SQLite database.

6. Mastering Google Search Page Scraping with the Crawling API

This section will delve into the mastery of Google Search page scraping using the Crawlbase Crawling API. We aim to harness this powerful tool’s full potential to extract information from Google’s search results effectively. We will cover the essential steps, from obtaining your Crawlbase token to seamlessly handling pagination. For example, we will gather crucial information about search results related to the search query “data science” on Google.

Getting the Correct Crawlbase Token

Before we embark on our Google Search page scraping journey, we need to secure access to the Crawlbase Crawling API by obtaining a suitable token. Crawlbase provides two types of tokens: the Normal Token (TCP) for static websites and the JavaScript Token (JS) for dynamic pages. For Google Search pages, Normal Token is a good choice.

1 | from crawlbase import CrawlingAPI |

You can get your Crawlbase token here after creating account on it.

Setting up Crawlbase Crawling API

With our token in hand, let’s proceed to configure the Crawlbase Crawling API for effective data extraction. Crawling API responses can be obtained in two formats: HTML or JSON. By default, the API returns responses in HTML format. However, we can specify the “format” parameter to receive responses in JSON.

HTML response:

1 | Headers: |

JSON Response:

1 | // pass query param "format=json" to receive response in JSON format |

We can read more about Crawling API response here. For the example, we will go with the JSON response. We’ll utilize the initialized API object to make requests. Specify the URL you intend to scrape using the api.get(url, options={}) function.

1 | from crawlbase import CrawlingAPI |

In the above code, we have initialized the API, defined the Google search URL, and set up the options for the Crawling API. We are passing the ”format” parameter with the value “json” so that we can have the response in JSON. Crawling API provides many other important parameters. You can read about them here.

Upon successful execution of the code, you will get output like below.

1 | { |

Selecting the Ideal Scraper

Crawling API provides multiple built-in scrapers for different important websites, including Google. You can read about the available scrapers here. The “scraper” parameter is used to parse the retrieved data according to a specific scraper provided by the Crawlbase API. It’s optional; if not specified, you will receive the full HTML of the page for manual scraping. If you use this parameter, the response will return as JSON containing the information parsed according to the specified scraper.

Example:

1 | # Example using a specific scraper |

One of the available scrapers is “google-serp”, designed for Google search result pages. It returns an object with details like ads, and people also like section details, search results, related searches, and more. This includes all the information we want. You can read about “google-serp” scraper here.

Let’s add this parameter to our example and see what we get in the response:

1 | from crawlbase import CrawlingAPI |

Output:

1 | { |

The above output shows that the “google-serp” scraper does its job very efficiently. It scraps all the important information including 9 search results from related Google search page and gives us a JSON object that we can easily use in our code as per the requirement.

Effortlessly Managing Pagination

When it comes to scraping Google search pages, mastering pagination is essential for gathering comprehensive data. The Crawlbase “google-serp” scraper provides valuable information in its JSON response: the total number of results, known as “numberOfResults.” This information serves as our guiding star for effective pagination handling.

Your scraper must deftly navigate through the various pages of results concealed within the pagination to capture all the search results. You’ll use the “start” query parameter to do this successfully, mirroring Google’s methodology. Google typically displays nine search results per page, creating a consistent gap of nine results between each page, as illustrated below:

- Page 1: https://www.google.com/search?q=data+science&start=1

- Page 2: https://www.google.com/search?q=data+science&start=10

- … And so on, until the final page.

Determining the correct value for the “start” query parameter is a matter of incrementing the position of the last “searchResults” object from the response and adding it into the previous start value. You’ll continue this process until you’ve reached your desired result number or until you’ve harvested the maximum number of results available. This systematic approach ensures that valuable data is collected, enabling you to extract comprehensive insights from Google’s search pages.

Let’s update the example code to handle pagination and scrape all the products:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | Total Search Results: 47 |

As you can see above we have now 47 search results which are far greater then what we have previously. You can update the limit in the code (Set to 50 for the example) and can scrape any amount of search results within the range of number of available results.

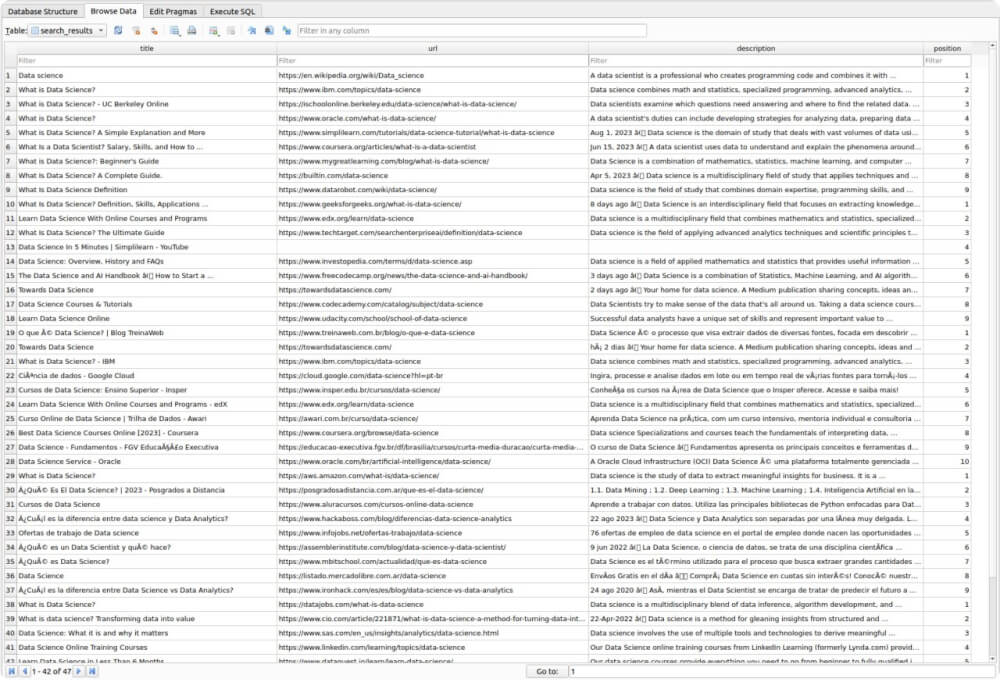

Saving Data to SQLite database

Once you’ve successfully scraped Google search results using the Crawlbase API, you might want to persist this data for further analysis or use it in your applications. One efficient way to store structured data like search results is by using an SQLite database, which is lightweight, self-contained, and easy to work with in Python.

Here’s how you can save the URL, title, description, and position of every search result object to an SQLite database:

1 | import sqlite3 |

In above code, The scrape_google_search() function is the entry point. It initializes the Crawlbase API with an authentication token and specifies the Google search URL that will be scraped. It also sets up an empty list called search_results to collect the extracted search results.

The scrape_search_results(url) function takes a URL as input, sends a request to the Crawlbase API to fetch the Google search results page, and extracts relevant information from the response. It then appends this data to the search_results list.

Two other key functions, initialize_database() and insert_search_results(result_list), deal with managing a SQLite database. The initialize_database() function is responsible for creating or connecting to a database file named search_results.db and defining a table structure to store the search results. The insert_search_results(result_list) function inserts the scraped search results into this database table.

The script also handles pagination by continuously making requests for subsequent search result pages. Max limit for search results are set to 50 for this example. The scraped data, including titles, URLs, descriptions, and positions, is then saved into the SQLite database which we can use for further analysis.

search_results database preview:

7. Conclusion

Web scraping is a transformative technology that empowers us to extract valuable insights from the vast ocean of information on the internet, with Google search pages being a prime data source. This blog has taken you on a comprehensive journey into the world of web scraping, employing Python and the Crawlbase Crawling API as our trusty companions.

We began by understanding the significance of web scraping, revealing its potential to streamline data collection, enhance efficiency, and inform data-driven decision-making across various domains. We then introduced the Crawlbase Crawling API, a robust and user-friendly tool tailored for web scraping, emphasizing its scalability, reliability, and real-time data access.

We covered essential prerequisites, including configuring your development environment, installing necessary libraries, and creating a Crawlbase account. We learned how to obtain the token, set up the API, select the ideal scraper, and efficiently manage pagination to scrape comprehensive search results.

Now that you know how to do web scraping, you can explore and gather information from Google search results. Whether you’re someone who loves working with data, a market researcher, or a business professional, web scraping is a useful skill. It can give you an advantage and help you gain deeper insights. So, as you start your web scraping journey, I hope you collect a lot of useful data and gain plenty of valuable insights.

8. Frequently Asked Questions

Q. What is the significance of web scraping Google search pages?

Web scraping Google search pages is significant because it provides access to a vast amount of data available on the internet. Google is a primary gateway to information, and scraping its search results allows for various applications, including market research, data analysis, competitor analysis, and content aggregation.

Q. What are the main advantages of using the “google-serp” Scraper?

The “google-serp” scraper is specifically designed for scraping Google search result pages. It provides a structured JSON response with essential information such as search results, ads, related searches, and more. This scraper is advantageous because it simplifies the data extraction process, making it easier to work with the data you collect. It also ensures you capture all relevant information from Google’s dynamic search pages.

Q. What are the key components of a Google search page, and why is understanding them important for web scraping?

A Google search page comprises several components: the search bar, search tools, ads, locations, search results, the “People Also Ask” section, related searches, knowledge graph, and pagination. Understanding these components is essential for web scraping as it helps you identify the data you need and navigate through dynamic content effectively.

Q. How can I handle pagination when web scraping Google search pages, and why is it necessary?

Handling pagination in web scraping Google search pages involves navigating through multiple result pages to collect comprehensive data. It’s necessary because Google displays search results across multiple pages, and you’ll want to scrape all relevant information. You can use the “start” query parameter and the total number of results to determine the correct URLs for each page and ensure complete data extraction.