Access to real-time data serves as the lifeblood of numerous businesses and researchers in the contemporary landscape. Whether you’re tracking market trends, monitoring competitor prices, or conducting academic research, obtaining data from e-commerce giants like Walmart can provide invaluable insights. Web scraping is the key to unlocking this treasure trove of information. Combining it with headless Firefox Selenium and a Crawlbase Smart Proxy opens doors to more efficient and effective data collection.

In this comprehensive guide, we will take you on a journey through the world of web scraping, focusing on the intricacies of scraping Walmart product pages using Python programming language. We’ll equip you with the knowledge and tools needed to navigate the challenges posed by IP blocks, CAPTCHAs, and dynamic content. By the end of this guide, you’ll be able to harness the full potential of headless Firefox Selenium with a smart proxy to scrape Walmart’s extensive product listings.

Whether you’re a seasoned data scientist, a business analyst, or just someone eager to explore the world of web scraping, this guide is your road map to success. So, fasten your seat belt as we embark on a data-driven adventure that will empower you to extract, analyze, and utilize data from one of the world’s largest online retailers.

Table of Contents

- What is Selenium?

- What is a smart proxy?

- Why use headless Firefox Selenium with a smart proxy to scrape Walmart product pages?

- Can bypass IP blocks and CAPTCHAs

- Can scrape more pages without getting banned

- Can get more accurate and consistent results

- Can run faster and use less resources

- Install Firefox, Python, and download Firefox Geckodriver

- Install Selenium and Random User Agent libraries

- Get a smart proxy from a provider like Crawlbase

- Configure Selenium Firefox Driver to use the smart proxy

- Start Firefox in headless mode and see the IP

- Understanding Walmart Product Page Structure

- Scraping the important Data from Walmart page HTML

1. Introduction

In the fast-paced web scraping and data extraction world, the combination of Selenium, headless Firefox, and smart proxies has become a formidable trio. This introduction sets the stage for our exploration by defining these key components and shedding light on why their convergence is essential for scraping Walmart product pages.

What is Selenium?

Selenium is a powerful automation tool widely used in web development and testing. It allows us to control web browsers programmatically, mimicking human interaction with web elements. Selenium essentially empowers us to navigate websites, interact with forms, and extract data seamlessly.

What is a Smart Proxy?

A smart proxy is a dynamic solution that intermediates between your web scraping application and the target website. Unlike static proxies, smart proxies possess the intelligence to rotate and manage IP addresses effectively. They play a pivotal role in overcoming hurdles like IP bans and CAPTCHAs, making them indispensable for high-scale web scraping operations.

Why use headless Firefox Selenium with a smart proxy to scrape Walmart product pages?

One of the world’s largest retailers, Walmart boasts an extensive online presence with a treasure trove of product information. However, scraping such a vast e-commerce platform comes with its challenges, including anti-scraping measures. Walmart employs measures such as IP blocking and CAPTCHAs to deter web scrapers. We leverage headless Firefox Selenium and a smart proxy to overcome these hurdles and extract data efficiently.

In the next section, we’ll delve into the benefits of this setup, highlighting how it enhances the web scraping process.

2. Benefits of using headless Firefox Selenium with a smart proxy

Now that we understand the basics, it’s time to delve into the advantages of employing headless Firefox Selenium in tandem with a smart proxy for scraping Walmart product pages. This potent combination offers a range of benefits, making it a preferred choice for web scraping enthusiasts and professionals alike.

Can bypass IP blocks and CAPTCHAs

Like many other websites, Walmart employs security measures such as IP blocking and CAPTCHAs to deter automated scraping. Headless Firefox Selenium can seamlessly bypass these obstacles when paired with a smart proxy. The smart proxy rotates IP addresses, making identifying and blocking scraping activities challenging for websites. This ensures uninterrupted data collection, even from IP-restricted sources.

Can scrape more pages without getting banned

Traditional scraping methods often lead to IP bans due to the high volume of requests generated in a short span. With its browser-like behavior and a smart proxy’s IP rotation, Headless Firefox Selenium allows for scraping a larger number of pages without triggering bans. This scalability is invaluable when dealing with extensive product catalogs on Walmart or similar platforms.

Can get more accurate and consistent results

Accuracy is paramount when scraping e-commerce data. Headless Firefox Selenium can render web pages just like a human user, ensuring that the data retrieved is accurate and up to date. The smart proxy enhances this accuracy by maintaining the consistency of requests, reducing the chances of receiving skewed or incomplete information.

Can run faster and use fewer resources

Efficiency matters, especially in large-scale scraping operations. Headless Firefox Selenium, a headless browser, consumes fewer system resources than traditional browsers. This results in faster scraping, reduced server costs, and a more agile data extraction process. When combined with a smart proxy’s intelligent IP management, the scraping operation becomes faster and resource-efficient.

In the subsequent sections, we will guide you through the setup of headless Firefox Selenium with a smart proxy, followed by a practical demonstration of scraping Walmart product pages. These benefits will become even more apparent as we dive deeper into the world of web scraping.

3. How to Set Up Headless Firefox Selenium with a Smart Proxy

Now that we’ve explored the advantages let’s dive into the practical steps to set up headless Firefox Selenium with a smart proxy for scraping Walmart product pages. This process involves several key components, and we’ll guide you through each one.

Install Firefox, Python and Download Firefox Geckodriver

To set up headless Firefox Selenium with a smart proxy, you need to ensure that you have the necessary software and drivers installed on your system. Here’s a detailed guide on how to do that:

Install Mozilla Firefox:

Mozilla Firefox is the web browser that Selenium will use for web automation. You can download it from the official Firefox website. Ensure you download the latest stable version compatible with your operating system.

Install Python:

Python is a programming language mostly used to write Selenium scripts. Most operating systems come with Python pre-installed. However, it’s essential to have Python installed on your system.

To check if Python is already installed, open your terminal or command prompt and type:

1 | python --version |

If Python is not installed, you can download it from the official Python website. Download the latest stable version for your operating system.

Download Firefox Geckodriver:

Geckodriver is a crucial component for Selenium to interact with Firefox. It acts as a bridge between Selenium and the Firefox browser. To download Geckodriver, follow these steps:

Visit the Geckodriver releases page on GitHub.

Scroll down to the section labeled “Assets.”

Under the assets, you’ll find the latest version of Geckodriver for your operating system (e.g.

geckodriver-vX.Y.Z-win64.zipfor Windows orgeckodriver-vX.Y.Z-linux64.tar.gzfor Linux). Download the appropriate version.Once downloaded, extract the contents of the ZIP or TAR.GZ file to a directory on your computer. Note down the path to this directory, as you’ll need it in your Python script.

Install Selenium and Random User Agent Libraries

Selenium is a powerful tool for automating web interactions, and it’s the core of our web scraping setup. Install Selenium using Python’s package manager, pip, with the following command:

1 | pip install selenium |

Additionally, we’ll use a library called Random User Agent to generate random user-agent strings for oupip install seleniumr browser. Install it using pip as well:

1 | pip install random-user-agent |

Get a Smart Proxy from Crawlbase

Crawlbase offers a range of web scraping solutions, including smart proxies compatible with Selenium. Open your web browser and navigate to the Crawlbase website.

If you’re a new user, you’ll need to create an account on Crawlbase. Click the “Sign Up” or “Register” button to provide the required information. You can find your Smart Proxy URL here once you’re logged in. Crawlbase Proxy URL will look like this.

1 | http://[email protected]:8012 |

Crawlbase offers various proxy plans based on your web scraping needs. Check out more about Crawlbase proxy plans. These plans may vary in terms of the number of proxies available, their locations, and other features. For a good start, Crawlbase provides a Free trial with limited features for one month. Review the available plans and select the one that best suits your requirements by reading Crawlbase Smart Proxy documentation.

Configure Selenium Firefox Driver to use the smart proxy

Now, let’s configure Selenium to use the smart proxy. To use a Crawlbase smart proxy with Selenium Firefox driver in a Python script, create a Python script with your desired name and add the following code to it:

Step 1: Import Necessary Libraries

1 | import os |

Explanation:

Here, we import the required Python libraries and modules. These include Selenium for web automation, random_user_agent for generating random user agents, and others for configuring the Firefox browser.

Step 2: Generate a Random User Agent

1 | user_agent_rotator = UserAgent( |

Explanation:

Here, we create a random user agent for the Firefox browser. User agents help mimic different web browsers and platforms, making your scraping activities appear more like regular user behavior.

Step 3: Configure Firefox Options

1 | firefox_options = Options() |

Explanation:

In this part, we set various options for the Firefox browser. For example, we make it run in headless mode (without a visible GUI), set window size, disable GPU usage, and apply the random user agent generated earlier.

Step 4: Define Your Smart Proxy URL

1 | proxy_host = "http://[email protected]" |

Explanation:

In this section, you should replace YourAccessToken with the token you obtained from the Crawlbase. This proxy_host and proxy_port will be used to route your web requests through the smart proxy.

Step 5: Set Up the Smart Proxy for Firefox

1 | firefox_options.set_preference("network.proxy.type", 1) |

Explanation:

This section is setting up a proxy server for Firefox. The first line sets the proxy type to 1, which is “manual proxy configuration.” The next eight lines set the proxy server host and port for HTTP, HTTPS (SSL), FTP, and SOCKS connections. The last line disables the cache for HTTP connections.

Step 6: Specify the Firefox Geckodriver Path

1 | fireFoxDriverPath = os.path.join(os.getcwd(), 'Drivers', 'geckodriver') |

Explanation:

This line specifies the path to the Firefox Geckodriver executable. Make sure to provide the correct path to the Geckodriver file on your system.

Step 7: Create a Firefox Driver with Configured Options

1 | firefox_service = Service(fireFoxDriverPath) |

Explanation:

This line specifies the path to the Firefox Geckodriver executable. Ensure you provide the correct path to the Geckodriver file on your system.

Start Firefox in Headless Mode and Verify the IP

To check if the proxy is functioning correctly and your IP is being routed through it, you can use a simple example. We’ll make a request to the http://httpbin.org/ip URL and display the proxy IP returned in the HTML body. Add the following code at end of your script you created in previous step.

1 | # Access the http://httpbin.org/ip URL to see if the IP has changed |

In this code, we use Selenium’s driver.get() method to navigate to the http://httpbin.org/ip URL, where we intend to gather information about the IP address.

Within a try block, we employ Selenium’s WebDriverWait in conjunction with EC.presence_of_element_located. This combination allows us to await the presence of an HTML element tagged as “body”. To ensure that we don’t wait to long, we set a maximum waiting time in seconds for our condition to meet in WebDriverWait, defined as time_to_wait. This step is vital to ensure that the webpage has indeed loaded in its entirety.

Once the webpage is fully loaded, we extract its HTML source using the drver.page_source method. After getting the HTML, we are locating and retrieving the IP address from the HTML body. We achieve this by searching for the “body” tag and extracting its text content.

Sample Output:

1 | { |

By following these steps, you can start Firefox in headless mode, access a URL to check the IP, and verify that your requests are indeed being routed through the smart proxy.

4. Practical Example of Scraping Walmart Product Page

This section will delve into a practical example of using headless Firefox Selenium with a smart proxy to scrape valuable data from a Walmart product page. We’ll provide you with code and introduce a valuable function to streamline the scraping process.

Understanding Walmart Product Page Structure

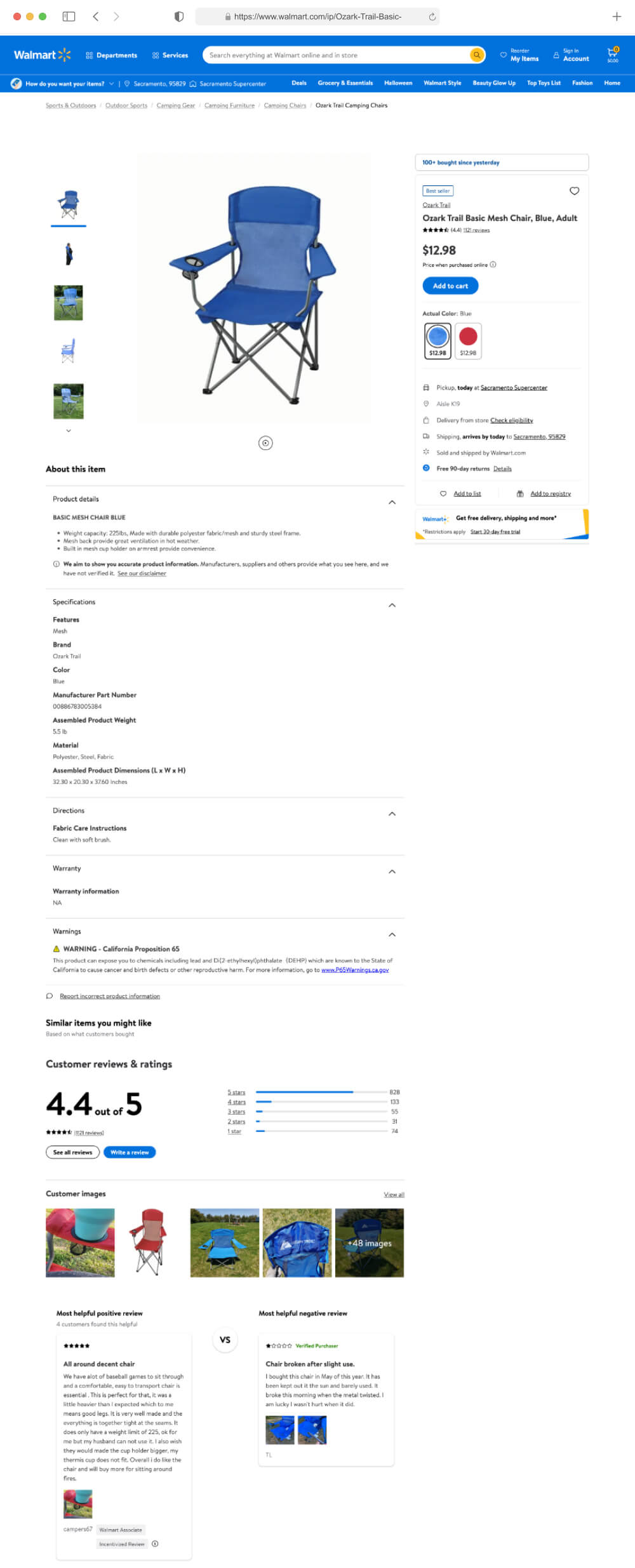

To successfully scrape data from a Walmart product page, it’s crucial to comprehend the underlying structure of the page’s HTML. Walmart’s product pages are well-organized and contain valuable information such as the product name, price, description, reviews, and more.

To scrape the important information from Walmart product page, you can inspect the Walmart page HTML and find a unique selector to the elements where desired information is present. Let’s break down the essential elements and how to identify them for scraping:

- Product Title: The product title, which is often the most prominent element on the page, is typically located within an h1 HTML element with the id

main-title. We can use the XPath expression'//h1[@id="main-title"]'to precisely locate this title. - Product Price: The product price is another critical piece of information. It can usually be found within a span HTML element containing the data attribute with name

testidand value asprice-wrap. Inside this element, the price will be inside a span with attributeitempropwith valueprice. To locate it, we use the XPath expression'//span[@data-testid="price-wrap"]/span[@itemprop="price"]'. - Product Description: While the product description is not covered in this example, it can be located in various ways depending on its placement in the HTML structure. You can inspect the page’s source code to identify the appropriate HTML element and XPath for scraping the description.

- Customer Reviews: Similarly, customer reviews can be located using XPath expressions that target the HTML elements containing review data, typically found under a section titled “Customer Reviews.”

Note: The HTML elements referenced above were current when writing this blog. Please be aware that these elements may undergo changes or updates in the future.

Understanding these key elements and their respective XPath expressions is fundamental to web scraping. By analyzing the HTML structure of Walmart product pages, you’ll be well-equipped to extract specific data points for your scraping needs.

In the following sections, we’ll demonstrate how to use Selenium and Python to scrape the product name and price from a Walmart product page while considering the page’s structure and element locations.

Scraping the Important Data from Walmart Page HTML

Let’s jump into the code example demonstrating how to scrape the product name and price from a Walmart product page. This code example illustrates how to scrape essential product details from a Walmart product page using Python and the Selenium WebDriver library. The script’s central feature is the scrape_walmart_product_page function, which encapsulates the logic for this web scraping task.

1 | import os |

The script begins by defining a function named scrape_walmart_product_page. This function takes a URL as input and employs Selenium to interact with the web page. It meticulously waits for specific elements, such as the product title and price, to load completely before extracting and displaying this crucial information. This patient approach ensures the accuracy and reliability of the data retrieval process.

The script incorporates essential configurations to enhance its versatility. It dynamically generates random user agents, emulating various web browsers and operating systems. This dynamic user-agent rotation helps mask the scraping activity, reducing the risk of detection by the target website. Furthermore, the script optimizes Firefox settings, rendering the browser headless (without a visible interface), configuring window dimensions, and disabling GPU acceleration to improve performance. It also demonstrates leveraging a proxy server, an invaluable feature in scenarios necessitating IP rotation or anonymity.

To bolster the script’s robustness, it includes a built-in retry mechanism. This mechanism gracefully handles timeouts or exceptions by allowing users to specify the maximum number of retry attempts and the duration of pauses between retries.

In the main execution block, the script initializes crucial components such as user agents, Firefox options, and proxy settings. It also specifies the URL of the Walmart product page to be scraped. The scrape_walmart_product_page function is then invoked with the chosen URL, initiating the scraping process.

Output:

1 | Product Title: Ozark Trail Basic Mesh Chair, Blue, Adult |

By studying and adapting this example, users will gain practical insights into web scraping techniques while ensuring the confidentiality of their scraping endeavors. The script’s output, which includes the product title and price, serves as tangible proof of its successful execution, showcasing its utility for extracting valuable data from e-commerce websites like Walmart.

5. Conclusion

In the contemporary landscape, real-time data serves as the lifeblood of numerous businesses and researchers. Whether it’s tracking market trends, monitoring competitor prices, or conducting academic research, the ability to access data from e-commerce giants like Walmart can provide invaluable insights. Web scraping is the linchpin that unlocks this treasure trove of information, and when combined with headless Firefox Selenium and a Crawlbase Smart Proxy, it becomes a potent tool for efficient and effective data collection.

This comprehensive guide has taken you on a journey into the realm of web scraping, with a specific focus on the intricacies of scraping Walmart product pages using Python and powerful automation tools. It has equipped you with the knowledge and tools needed to tackle the challenges posed by IP blocks, CAPTCHAs, and dynamic content. By the end of this guide, you’re poised to leverage the full potential of headless Firefox Selenium with a smart proxy to scrape Walmart’s vast product listings.

Whether you’re a seasoned data scientist, a business analyst, or an enthusiast eager to explore the world of web scraping, this guide has provided you with a roadmap to success. As you embark on your data-driven journey, you’ll gain practical experience in extracting, analyzing, and harnessing data from one of the world’s largest online retailers.

Web scraping with headless Firefox Selenium and a smart proxy offers a powerful means to access and utilize the wealth of data available on the web. Remember to use this newfound knowledge responsibly, respecting website policies and legal considerations, as you leverage web scraping to drive insights and innovations in your respective fields.

6. Frequently Asked Questions

Q. What is the benefit of using headless Firefox Selenium with a smart proxy for web scraping?

Using headless Firefox Selenium with a smart proxy offers several benefits for web scraping, including the ability to bypass IP blocks and CAPTCHAs, scrape more pages without getting banned, obtain more accurate and consistent results, and run scraping operations faster and with fewer resources. This combination enhances the efficiency and effectiveness of data collection, making it a preferred choice for web scraping professionals.

Q. How can I obtain a smart proxy for web scraping, and what is its role in the process?

You can obtain a smart proxy from a provider like Crawlbase. These smart proxies act as intermediaries between your web scraping application and the target website, effectively managing and rotating IP addresses to bypass IP bans and CAPTCHAs. They play a crucial role in maintaining uninterrupted data collection and ensuring the anonymity of your scraping activities.

Q. What are some key elements to consider when scraping data from Walmart product pages?

When scraping data from Walmart product pages, it’s essential to understand the page’s HTML structure, identify unique selectors for elements containing the desired information (e.g., product title and price), and use tools like Selenium and XPath expressions to locate and extract data. Additionally, consider that the HTML structure may change over time, so periodic adjustments to your scraping code may be necessary.