In this blog, we will show how to use the Crawlbase Smart Proxy to extract ASIN for a selected Amazon product, we will also show how to pass Crawlbase Crawling APIs parameters to the Smart Proxy for enhanced scraping with more control on the way to crawl data. In the end we will have a structured JSON of the Amazon product page for easy consumption. We will also answer few frequent questions we get asked about web scraping Amazon and the Amazon product pages aka ASIN pages.

Step-by-Step: Extracting Amazon ASIN with Crawlbase Smart Proxy

Step 1: Start by creating a free Crawlbase account to access your Smart Proxy token.

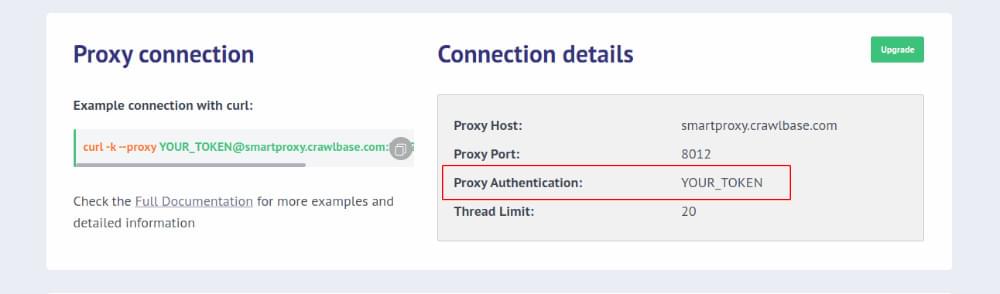

Step 2: Navigate to the Crawlbase Smart Proxy Dashboard to retrieve your free access token found under the ‘Connection details’ section.

Step 3: Select the Amazon product you wish to crawl. For this example, let’s crawl this OtterBox iPhone 14 Pro Max (ONLY) Commuter Series Case Amazon product. The URL is as follows:

https://www.amazon.com/OtterBox-COMMUTER-iPhone-Pro-ONLY/dp/B0B7CH8DMR/

Step 4: To send a request to the Smart Proxy, copy the following line and paste it into your terminal:

1 | curl -x "http://[email protected]:8012" -k "https://www.amazon.com/OtterBox-COMMUTER-iPhone-Pro-ONLY/dp/B0B7CH8DMR/" |

This curl command can also be found in the Crawlbase Smart Proxy Documentation. Remember to replace “USER_TOKEN” with your access token and insert the URL of the product you wish to crawl.

As you can see the curl command has 2 options, the -x which is equivalent to —proxy allows the user to send a proxy host:port and also a proxy authentication. The Crawlbase Smart Proxy does not require a password for authentication as the proxy usernames are unique and are secure, it is enough to use the username or USER_TOKEN for the proxy authentication. If you however require to add a password in your web scraping application then add any string you prefer like your company name or just add “Crawlbase”.

In the curl command, we also added the -k flag (or --insecure) stands for “insecure.” When you use the -k flag with curl, it tells the command to allow connections to SSL/TLS-protected (HTTPS) sites without verifying the authenticity of the certificate presented by the server. This option is required at the Smart Proxy, it allows us to handle the forwarding to the Crawling API and bypass captchas and blocks before sending the request to the original requested website. It is mandatory to use the -k or —insecure flag when sending requests to the Smart Proxy.

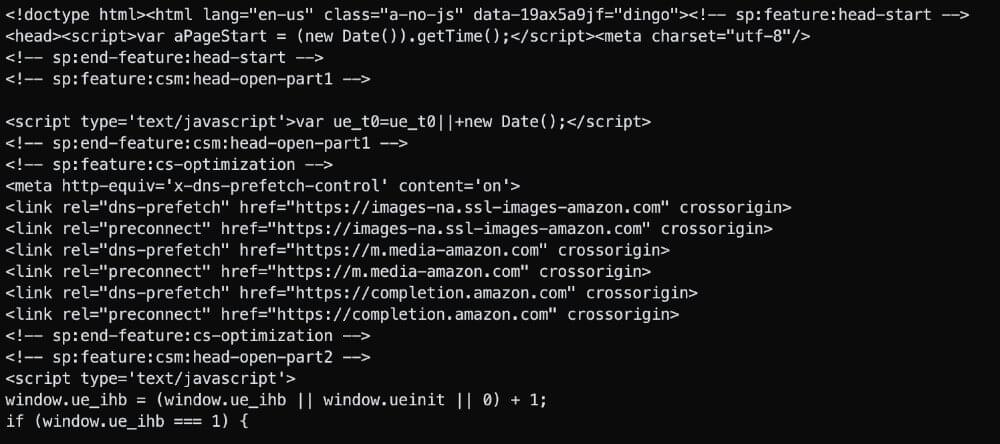

Step 5: If performed correctly, you should receive an HTML response similar to the one shown in this screenshot.

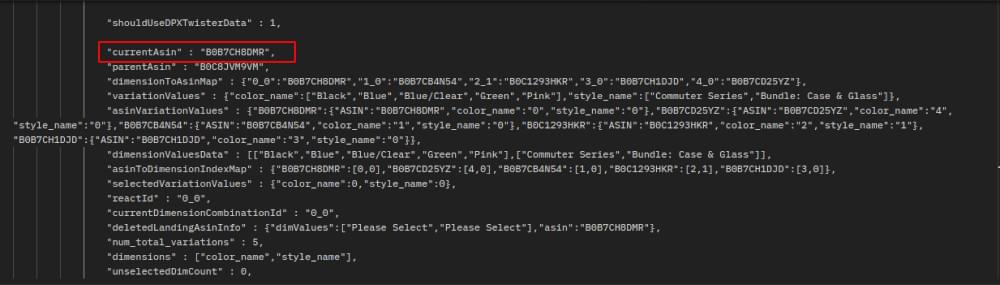

In the above example, we have crawled the target Amazon page and we can see that the ASIN we were looking for is present as currentAsin:

Extracting Amazon ASIN using Python and Smart Proxy

In the last section, we used curl to make a basic request which returns scraped data for a product page from where we extracted the ASIN. For a more advanced usage, we will now delve into using Python to automate these requests and parse the response.

For the Python code, we will be using the requests library only and create a file named smartproxy_amazon_scraper.py.

1 | import requests |

Then you can simply run the above script in your terminal with python smartproxy_amazon_scraper.py.

This is the successful response that you get in your terminal in the form of HTML. You can parse this response and structure the data which then can be stored in a database for easy retrieval and analysis.

Customizing Requests with Crawling API Parameters

Let’s dive deeper by exploring how to customize Smart Proxy requests using Crawlbase’s Crawling API Parameters. You can simply pass these parameters to the Smart Proxy as headers prefixed with CrawlbaseAPI-Parameters: ... For example:

Example # 1:

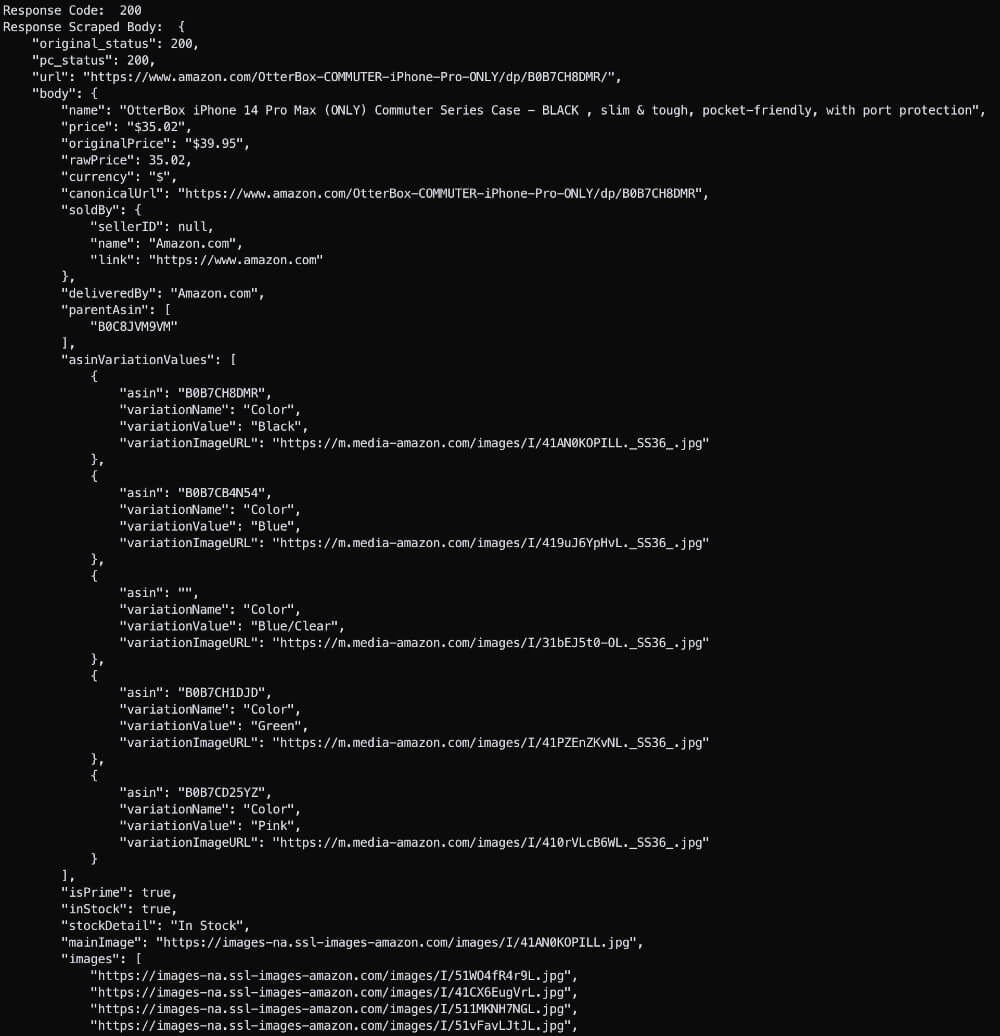

In this Python script, we set the CrawlbaseAPI-Parameters to autoparse=true. This API call instructs the Smart Proxy to automatically parse the page and return a JSON response. You can then use this structured data as per your requirement.

1 | # pip install requests |

After running the above call in the terminal you’ll get the response in JSON format and you can see that data looks much more structured now.

Example #2:

In order to achieve geolocation for your requests from a particular country, simply include the “country=” parameter, using the two-character country code, such as “country=US”. See below:

1 | # pip install requests |

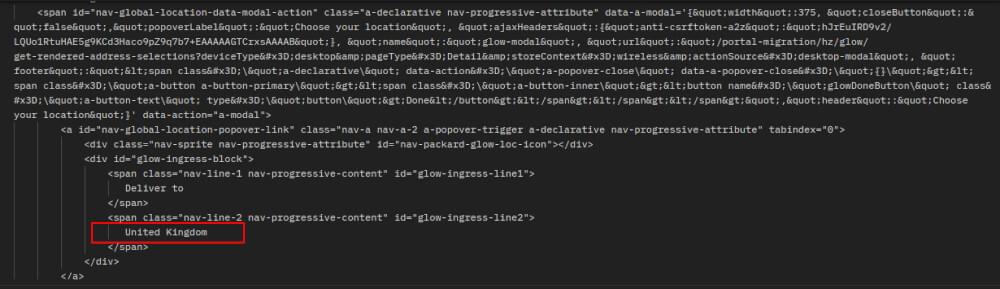

After running the above call in the terminal you’ll get the response in HTML as shown below:

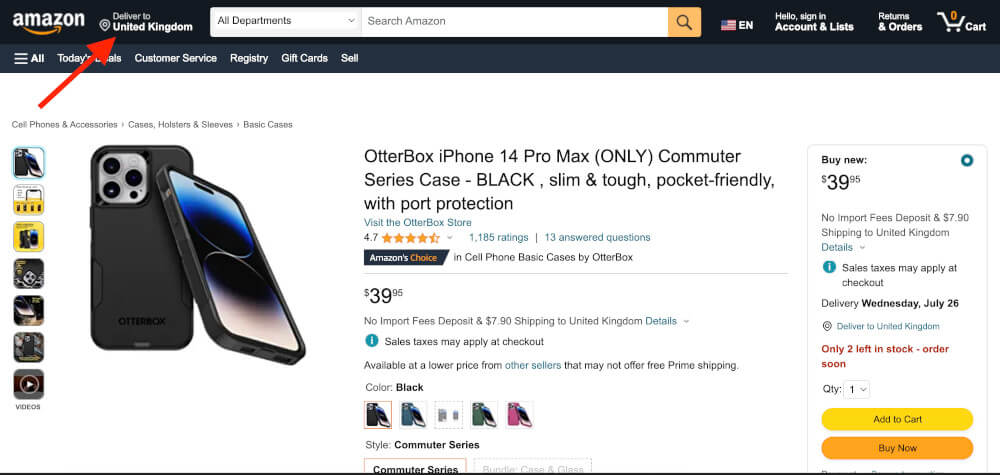

You can save the output HTML as smartproxy_amazon_scraper.html on your local machine. When you open the HTML file in the browser, you will notice the page says United Kingdom under “Deliver to” which means your request to Amazon was routed from GB as we instructed the API in the code above.

In the above two examples, we showed you how you can successfully crawl a webpage using Crawlbase Smart Proxy and also how you can easily utilize the potentials of our Crawlbase Crawling API via the CrawlbaseAPI-Parameters. Specifically, we introduced the autoparse=true parameter, which provides a structured output for easier data processing, and the country=GB parameter (or any valid two-letter country code) that facilitates targeted geolocation.

Crawlbase Smart Proxy Made Redirects Easy!

Usually, proxies don’t do URL redirects but Crawlbase Smart Proxy does. That’s why we call it Smart Proxy. Smart Proxy uses Crawling API features to handle URL redirects by intercepting incoming requests, evaluating redirect rules set by users, and sending appropriate HTTP status codes to clients. It efficiently routes users from the source URL to the target URL based on the specified redirect type (e.g., 301 or 302).

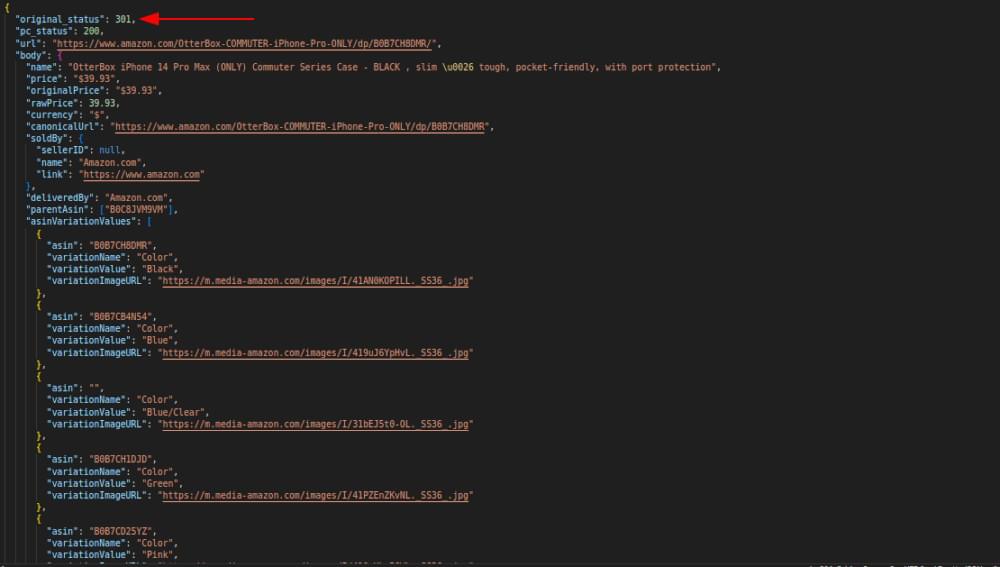

Let’s demonstrate one redirect scenario by targeting the same URL as before, but this time we will remove the “www” prefix from the URL. The modified URL will trigger a redirect, showcasing how Crawlbase Smart Proxy handles this type of redirection. The resulting URL without the “www” prefix will appear like this:

https://amazon.com/OtterBox-COMMUTER-iPhone-Pro-ONLY/dp/B0B7CH8DMR/

We will continue using the Python code provided earlier, and the API call for setting up URL redirects will follow the same structure as before. The code snippet will look like this:

1 | # pip install requests |

After executing the above API call in the terminal, you will receive the response in JSON format. In the response, you can observe that the “original_status” field has the value “301.”

Summary

Extracting Amazon ASINs on a large scale allows developers to quickly pull important product information. This key data is crucial for studying the market, setting prices, and comparing competition. By using web scraping tools, users can automate the collection of ASINs from large product lists, saving a lot of time and energy.

To summarize, Crawlbase Smart Proxy stands as a revolutionary solution offering custom geolocation, unlimited bandwidth, AI-driven crawling, rotating IP addresses, and a high success rate. Its diverse features, including a vast proxy pool, anonymous crawling, and real-time monitoring make it an essential tool for developers, enabling them to thrive in the dynamic realm of web data acquisition. Sign up now and get the benefit of 5000 free requests with Crawlbase Smart Proxy!

Frequently Asked Questions

Q: What is an Amazon ASIN?

A: An Amazon ASIN (Amazon Standard Identification Number) is a unique 10-character alphanumeric code assigned to products sold on Amazon’s marketplace. It serves as a product identifier and is used to differentiate items in Amazon’s vast catalog. It always begins with “B0.”

Q: Is it legal to scrape Amazon?

A: Scraping Amazon data is entirely legal when the data is publicly accessible. However, it’s crucial to avoid scraping data that requires login credentials and to ensure that collected datasets do not contain any sensitive or copyrighted content.

Q: What is SKU?

A: SKU (Stock Keeping Unit) is a unique code assigned by sellers or retailers to track and manage their inventory. Unlike ASIN, SKU is not specific to Amazon’s platform and can be used across multiple sales channels

Q: Why is it important to scrape ASIN for products listed on Amazon?

- Scraping ASINs for products listed on Amazon is important because ASINs act as unique identifiers for each item in Amazon’s vast marketplace.

- By retrieving ASINs through web scraping, developers can gather essential product details, pricing, availability, and customer reviews, empowering them to build custom applications, analyze trends, and compare products across categories.

- Scraping ASINs enables developers to integrate Amazon’s product data into their own applications and websites seamlessly.

- By tracking ASINs and monitoring their performance over time, businesses and developers can optimize marketing strategies, manage inventory, and stay competitive in the e-commerce landscape.

Q: What are key features of Crawlbase Smart Proxy?

A: The key features of the Smart Proxy are rotating IP addresses for maintaining anonymity during the crawling process. The pool of rotating IP addresses includes 140 million residential and data center proxies.The Smart Proxy is also really helpful in bypassing CAPTCHA challenges and ensuring a 99% success rate for your crawling and scraping. The Smart Proxy also offers custom geolocation for region-specific data access.