In today’s rapidly evolving digital landscape, web data extraction, and analysis have become more crucial than ever for businesses seeking to gain a competitive edge. Amidst the vast sea of web scraping solutions, Crawlbase stands out by offering a diverse range of innovative products tailored to efficiently and effectively crawl websites and scrape valuable data. One of the flagship offerings by Crawlbase is the powerful “Crawler” - a cutting-edge tool that grants users the ability to push URLs to it using the Crawling API asynchronously. In turn, the Crawler diligently processes these requests and seamlessly pushes back the crawled page data to your server’s webhook, all in real time. This intelligent and streamlined workflow eliminates the need for continuous polling and ensures you have immediate access to freshly extracted data. This makes data retrieval significantly faster, allowing you to scrape large volumes of data more efficiently.

In this blog, our primary focus will be exploring the key features of Crawlbase Crawler. Specifically, we will delve into its exceptional asynchronous capabilities and seamless webhook integration, both of which play pivotal roles in optimizing large volumes of data retrieval.

Creating the Crawlbase Crawler

To use the Crawler, you must first create it from your Crawlbase account dashboard. Depending on your need, you can create two types of Crawler, TCP or JavaScript. Use TCP Crawler to crawl static pages. Use the JS Crawler when the content you need to crawl is generated via JavaScript, either because it’s a JavaScript-built page (React, Angular, etc.) or because the content is dynamically generated on the browser. Let’s create a TCP crawler for the example.

1. Creating a Webhook

While creating a Crawler, you need to give the URL of your webhook. Webhooks serve as a powerful means to receive real-time updates on scraped website data. By setting up webhooks, users can effortlessly receive the extracted HTML content of specified URLs directly on their server endpoints, enabling seamless integration with their data processing pipelines and facilitating timely data-driven decision-making. This efficient and instantaneous data delivery empowers users to stay up-to-date with dynamic web content, making webhooks an essential component of Crawlbase Crawler’s capabilities. In the case of Crawlbase Crawler webhook should…

- Be publicly reachable from Crawlbase servers

- Be ready to receive POST calls and respond within 200ms

- Respond within 200ms with a status code 200, 201, or 204 without content

Let’s create a simple webhook for receiving responses in the Python Django framework. Make sure you have Python and Django installed. To create a simple webhook receiver using Django in Python, follow these steps:

Step 1: Create a new Django project and app using the following commands:

1 | # Command to create the Project: |

Step 2: In the webhook_app directory, create a views.py file and define a view to receive the webhook data:

1 | # webhook_app/views.py |

Step 3: Configure URL Routing In the webhook_project directory, edit the urls.py file to add a URL pattern for the webhook receiver:

1 | # webhook_project/urls.py |

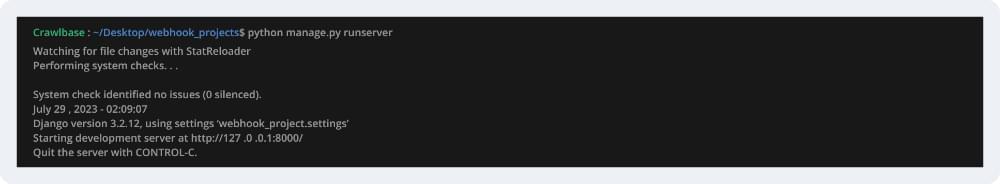

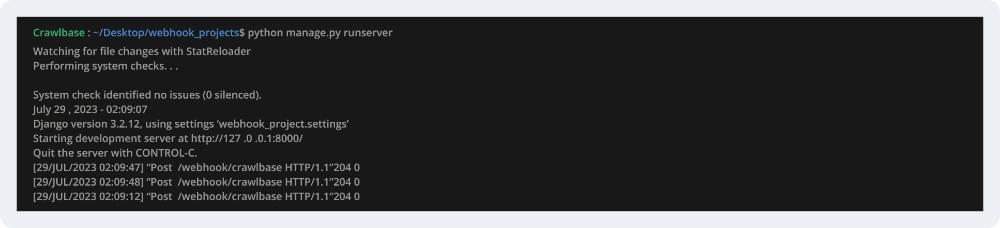

Step 4: Run the Django Development Server Start the Django development server to test the webhook receiver:

1 | # Command to start server |

App will start running on localhost port 8000.

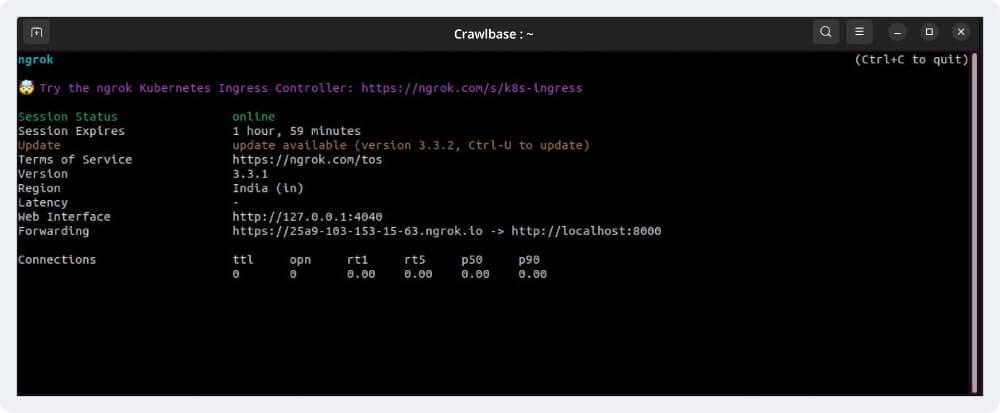

After creating a webhook, the next thing we need is to make the webhook publicly available on the internet. For this, we can use ngrok. Since our webhook is running on localhost with port 8000, we need to run the ngrok on port 8000.

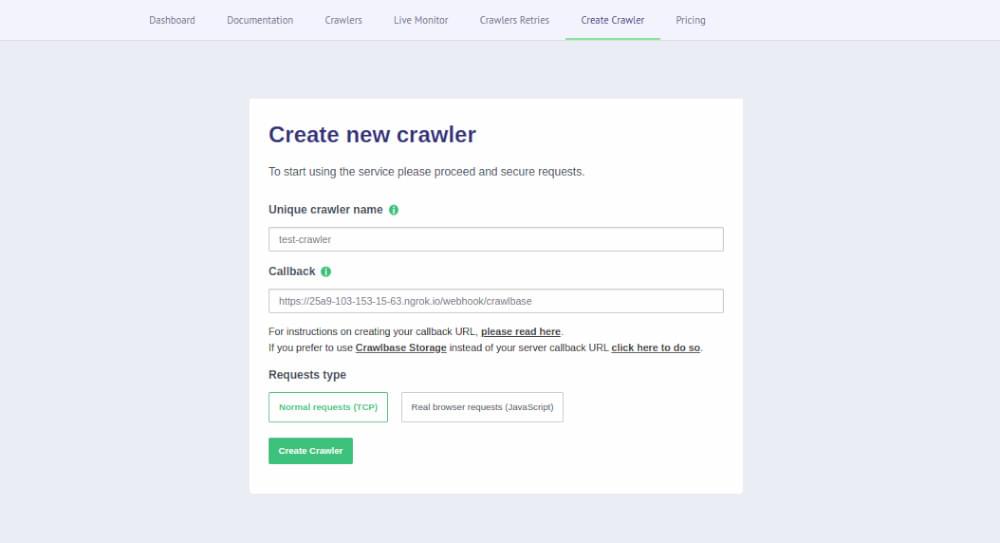

2. Creating new Crawler from Crawlbase dashboard

After running ngrok at port 8000, we can see that ngrok provides a public forwarding URL that we can use to create the crawler. With the free version of ngrok, this link will auto-expire after 2 hours. Now, let’s create a crawler from dashboard.

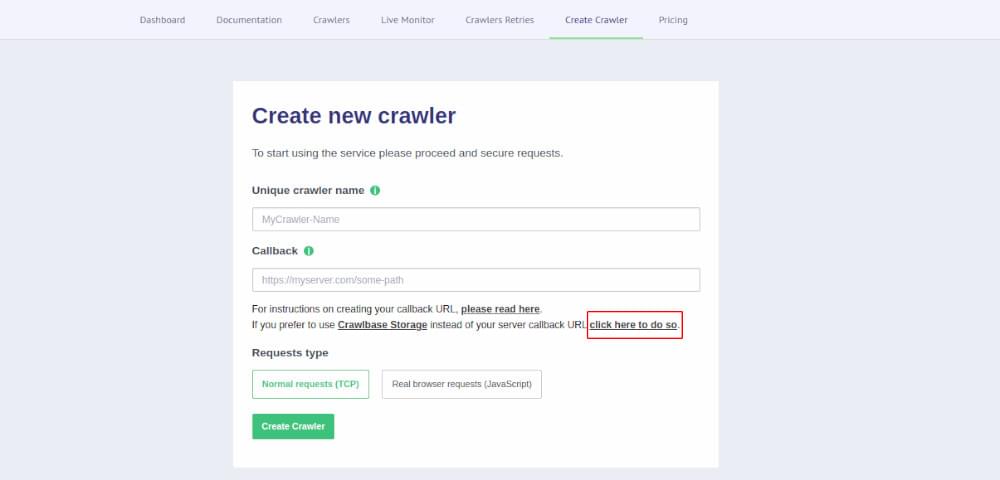

If you wish to not create your own webhook and store data your Crawler generates securely, Crawlbase offers a seamless solution through its Cloud Storage API. By configuring your Crawler to utilize the Storage webhook endpoint, you can easily store your valuable data crawled by the Crawler with added privacy and control. To do so, you can select Crawlbase storage option while creating the Crawler from the dashboard.

You can read more in Crawlbase Storage API.

Pushing URLs to the Crawler

We have now created a Crawler with the name “test-crawler.” Now the next thing is to push the URLs we want to be crawled to the Crawler. You must use the Crawling API with two additional parameters, “callback=true” and “crawler=YourCrawlerName,” to push the URLs. By default, you can push up to 30 URLs each second to the Crawler. This limit can be changed upon request to customer support. Let’s see the below example in Python, which uses Crawlbase library provided by Crawlbase to push URLs to the Crawler.

1 | # To install the crawlbase library |

Output:

1 | b'{"rid":"d756c32b0999b1c0507e364f"}' |

After running the above code, the Crawling API will push all the URLs to the Crawler queue. One thing to notice in the output is that we only receive the rid (Request ID) from the Crawler without any result. This is because Crawler has only given us rid so that we can track requests and continue pushing URLs without wasting the time Crawler will take to perform the Crawling. This is an asynchronous capability provided by the Crawler. We don’t have to worry about the output response as it will get auto-pushed (once ready) at the webhook we provided while creating the Crawler. There are multiple APIs Crawler offers, You can read about them here.

Note: The combined total for all Crawler waiting queues is capped at 1M pages. If any of the queues or all queues combined exceed 1M pages, your Crawler push will temporarily pause, and we will notify you via email. Crawler push will automatically resume once pages in the waiting queue(s) are below 1M pages.

Receiving data from the Crawler

After pushing the URLs to the Crawler, Crawler will crawl the page associated with every URL and push the response with crawled HTML as the body to the webhook.

1 | Headers: |

The default format of the response is HTML. If you want to receive a response in JSON format, you can pass a query param “format=json” with Crawling API while pushing data to the Crawler. JSON response will look like this

1 | Headers: |

In our example, we have received 3 requests from the Crawler since we only pushed 3 URLs to it.

As in the webhook, we have coded to save the request body into a .txt file. We will be able to see all the HTML content in that file like this.

Once you get the HTML at your webhook, you can scrape anything from it, depending on your needs. Also, you can change the Webhook URL of your Crawler anytime from your Crawlbase dashboard. Every time Crawler delivers a response to the webhook and your server fails to give back a successful response, Crawler will retry crawling the page and then retry the delivery again. Those retries are considered successful requests, so they are charged. Also, if your webhook goes down, Crawlbase Monitoring Bot will detect it and will pause the Crawler. Crawler will resume automatically when webhook goes up again. You can contact the technical support team of Crawlbase to change these settings as needed. For a more comprehensive understanding, refer to Crawlbase Crawler documentation.

Enhanced Callback Functionality with Custom Headers

In addition to the standard callback mechanism, Crawlbase provides an optional feature that allows you to receive custom headers through the “callback_headers” parameter. This enhancement empowers you to pass additional data for identification purposes, facilitating a more personalized and efficient integration with your systems.

Custom Header Format:

The format for custom headers is as follows:

HEADER-NAME:VALUE|HEADER-NAME2:VALUE2|and-so-on

It’s crucial to ensure proper encoding for seamless data transfer and interpretation.

Usage Example

For these headers & values pairs { ”id”: 123, type: “etc” }

&callback_headers=id%3A123%7Ctype%3Aetc

Receiving Customer Headers

Crawler will send all the custom headers in the header section of the response. You can easily access them along with your crawled data.

1 | Headers: |

With this upgrade, you now have greater flexibility and control over the information you receive through callbacks. By leveraging custom headers, you can tailor the callback data to your specific requirements, making aligning our services with your unique needs easier than ever.

Conclusion

Crawlbase Crawler offers its users a comprehensive and powerful solution for web crawling and scraping tasks. Capitalizing on its advanced features allows you to seamlessly gather extensive volumes of data, obtain real-time updates, and precisely manage the data extraction process from dynamic websites. As a tool of choice for businesses seeking actionable insights, Crawlbase Crawler is a key enabler of data-led decision-making, giving companies a competitive edge in the modern business landscape.

However, as we harness this powerful tool, it’s important to remember that with great power comes great responsibility. Compliance with website terms of service, adherence to ethical scraping norms, and responsible use of Crawlbase Crawler is paramount to preserving a healthy web ecosystem. Let’s navigate the web responsibly.

Frequently Asked Questions

Q: What are the benefits of using the Crawler vs not using it?

- Efficiency: The Crawler’s asynchronous capabilities allow for faster data extraction from websites, saving valuable time and resources.

- Ease of Use: With its user-friendly design, the Crawler simplifies the process of pushing URLs and receiving crawled data through webhooks.

- Scalability: The Crawler can efficiently handle large volumes of data, making it ideal for scraping extensive websites and dealing with substantial datasets.

- Real-time Updates: By setting the scroll time variable, you can control when the Crawler sends back the scraped website, providing real-time access to the most recent data.

- Data-Driven Decision Making: The Crawler empowers users with valuable insights from web data, aiding in data-driven decision-making and competitive advantage.

Q: Why I should use the Crawler?

- Efficient Data Extraction: The Crawler’s asynchronous nature enables faster and more efficient data extraction from websites, saving time and resources.

- Real-time Data: With the ability to set the scroll time variable, you can receive fresh website data in real-time, ensuring you have the most up-to-date information.

- Easy Integration: The Crawler’s webhook integration simplifies data retrieval, making it seamless to receive crawled pages directly to your server.

- Scalability: The Crawler can handle large-scale data scraping, allowing you to process vast amounts of information without compromising performance.

- Data-Driven Insights: By leveraging the Crawler, you gain access to valuable insights from web data, empowering data-driven decision-making and enhancing your competitive edge.

- Streamlined Workflow: The Crawler’s features eliminate the need for continuous polling, streamlining your data acquisition process and improving overall efficiency.

- User-Friendly Interface: The Crawler’s user-friendly design makes it accessible to developers, CEOs, and technical personnel, ensuring smooth adoption and usage.

- Customizable and Flexible: You can tailor the Crawler to suit your specific requirements, allowing for a highly flexible and customizable web scraping solution.

Overall, using the Crawler simplifies web data extraction, improves data accessibility, and equips you with valuable insights to drive informed decision-making for your business.

Q: Do I need to use python to use the Crawler?

No, you do not need to use Python exclusively to use the Crawlbase Crawler. The Crawler provides multiple libraries for various programming languages, enabling users to interact with it using their preferred language. Whether you are comfortable with Python, JavaScript, Java, Ruby, or other programming languages, Crawlbase has you covered. Additionally, Crawlbase offers APIs that allow users to access the Crawler’s capabilities without relying on specific libraries, making it accessible to a wide range of developers with different language preferences and technical backgrounds. This flexibility ensures that you can seamlessly integrate the Crawler into your projects and workflows using the language that best suits your needs.