Web Scraping

Web Scraping or Web data extraction is data scraping utilized for extracting information from sites. The web scraping software may straightforwardly access the World Wide Web utilizing the Hypertext Transfer Protocol or an internet browser. While web scraping can be possible manually by a software user, the term normally alludes to automated processes carried out utilizing a bot or web crawler. It is a type of copying in which explicit information is assembled and copied from the web, normally into a local database or spreadsheet, for later review or analysis.

Web crawling is the primary part of web scraping, to fetch pages for later handling. When fetched, at that point extraction can happen. The content of a page might be parsed, Searched, reformatted, its information copied into a spreadsheet, or stacked into a database. Web scrapers commonly take something from a page, to utilize it for another reason elsewhere.

Web scraping is utilized in an assortment of digital businesses that depend on data extraction. Genuine use cases include:

- Search engine bots crawling a webpage, breaking down its content, and afterward, rank it.

- Price Value comparison sites conveying bots to auto-fetch costs and item depictions for unified dealer sites.

- Statistical or marketing research organizations utilizing scrapers to pull information from forums and social media

Web scraping is likewise utilized for illicit purposes, including the undermining of costs and the burglary of copyrighted content. An online element targeted by a scraper can endure extreme monetary losses, particularly if it’s a business firmly depending on serious estimating models or deals in content distribution.

Web Scraping 101: Basic Web Scraping

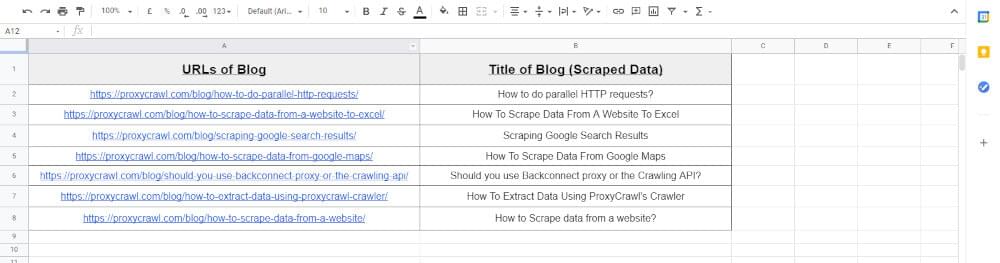

Basics of web scraping for the beginners in a simplest way with the help of Google spreadsheets is described as under in a comprehensive step-by-step process.

Step 01:

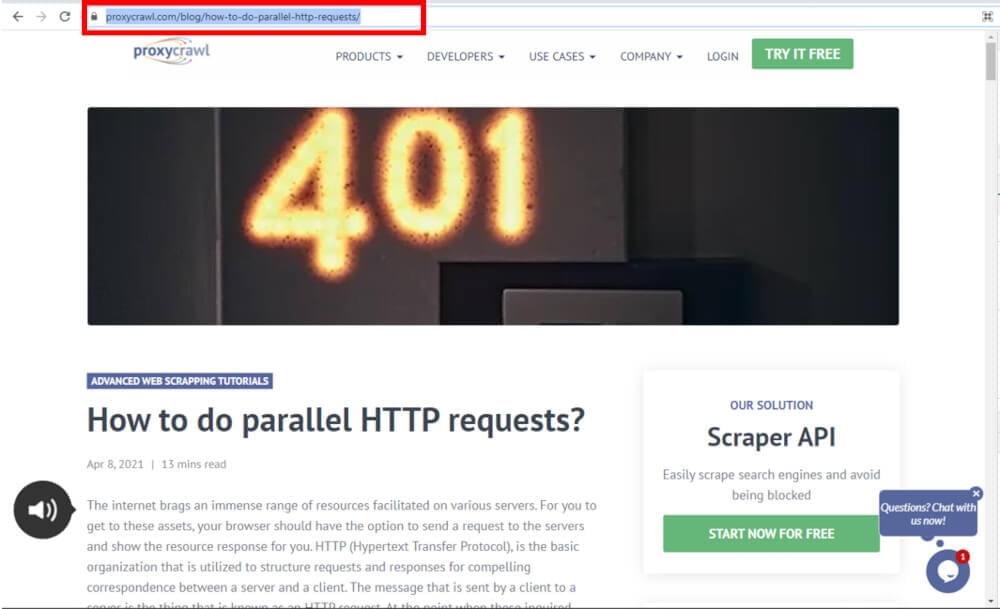

Decide what you want to scrape from the online webpage. Like here, we are intending to scrape the titles of the blogs in Google spreadsheet. The first step to process towards the objective of blog title scraping is to go to the respective webpage and copy the URL from that webpage.

Step 02:

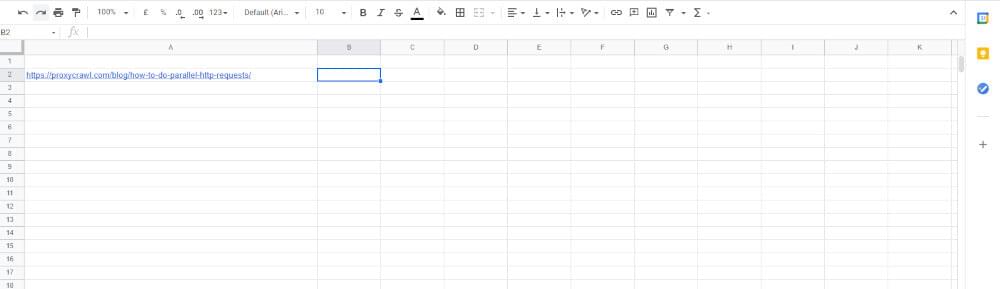

After copying the URL from the webpage whose data, you want to scrape. Then paste that copied URL into a cell of the Google spreadsheet. To fetch the data from any webpage by web scraping, you must need to copy the respective URL from the concerned webpage.

Step 03:

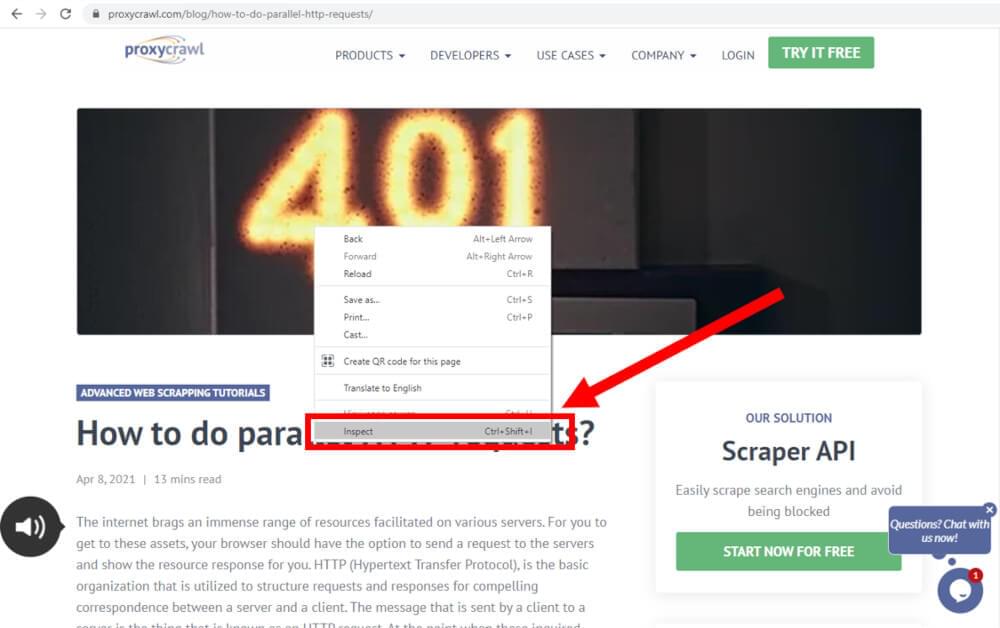

In the next step, come back to the webpage whose URL you have copied. Now, it is time to move your cursor to the data element (in this case, we are scraping titles of the blog) that you want to scrape. Then right click on it. A window is opened on clicking right, as shown in the following screenshot. From this window list items, move your cursor to the “Inspect”. You can also press a combination of the keys “Ctrl + Shift + C”. Both of the methods will generate the similar results.

Step 04:

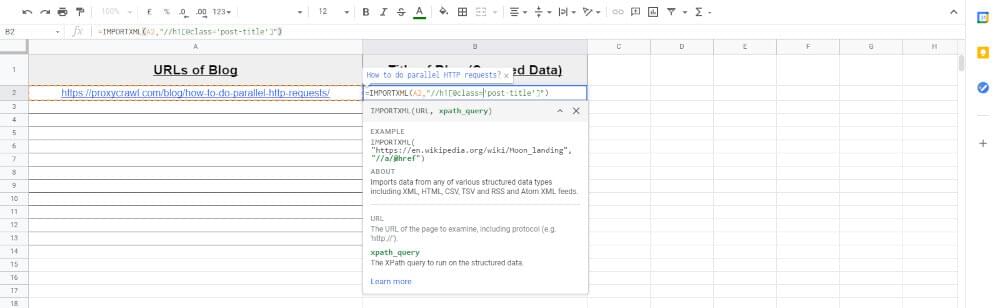

A side window is opened that contains developer’s information. In the “Elements” tab, move to the code section that highlights your required data element in the right-side portion of the screen as shown in the following screenshot.

Now look for the name of the class and the HTML element that contains that specific highlighted data that we want to scrape, i.e., title of the blog. In our case, the required information is as follows;

- The name of the class = “post-title”

- Name of HTML element = “h1”

Copy both the information from here.

Step 05:

Now, come back to the Google spreadsheet that you created. Write down the formula that is mentioned below in the corresponding cell of the spreadsheet in which you want to scrape the required data, i.e., title of the blog.

- Formula:

=IMPORTXML(A2, “//h1[@class=’post-title’]”) - Where,

- A2 = Address of the cell that contains URL

After writing the above-mentioned formula in the respective cell, just pre “Enter” from the keyboard. Then, the required data element that you were looking for to scrape, will be scraped and loaded into the spreadsheet cell where you inserted the formula as shown in the under-given screenshot.

- A2 = Address of the cell that contains URL

Step 06:

In the similar way, you can easily extract the required data from any webpage. You will just have to copy the URLs in the respective cells of the spreadsheet. After pasting URLs, the very next and final step is to drag the first cell in which, you inserted the formula in the last step. Thus, you can scrape the data as much as you want.

Web Scraping Basics by Using Crawlbase API:

The Crawlbase API permits developers to scrape any site utilizing real web browsers. This implies that regardless of whether a page is constructed utilizing just JavaScript, Crawlbase can crawl it and give the HTML important to scrape it. The API handles proxy management, avoids captchas and obstructs, and oversees automated programs and browsers.

What is Google:

Google follows three fundamental steps to generate results from web pages:

- Crawling

- Indexing

- Serving (and Ranking)

1. Crawling:

The initial step is discovering what pages exist on the web. There is no central registry for all website pages, so Google must continually look for new pages and adds them to its list of known pages. A few pages are known because Google has effectively visited them previously. Different pages are found when Google follows a connection from a known page to another page. All things considered, different pages are found when a site proprietor presents a list of pages (a sitemap) for Google to crawl. In case you’re utilizing a managed web host, like Wix or Blogger, they may advise Google to creep any refreshed or new pages that you make.

When Google finds a page URL, it visits or crawls the page to discover what’s on it. Google renders the page and examines both the content and non-text content and generally visual format to choose where it ought to show up in search results. The better the Google can comprehend your site, the better we can coordinate with it to individuals who are searching for your content.

2. Indexing:

After a page is found, Google attempts to comprehend what the page is about. This process is called indexing. Google dissects the substance of the page, indexes pictures and video records installed on the page, and otherwise, attempt to understand the page. This data is stored in the Google record.

3. Serving (and Ranking):

At the point when a client types an inquiry, Google attempts to track down the most significant answer from its record dependent on numerous variables. Google attempts to decide the best answers, and factor in different contemplations that will give the best client experience and most fitting answer, by considering things like the client’s area, language, and device (work area or telephone). For instance, looking for “bike repair shops” would show various responses to a client in Paris than it would to a client in Hong Kong. Google doesn’t acknowledge installment to rank pages higher, ranking is done automatically.

Google Web Scraping – Crawlbase API:

Web scraping using different APIs are incredible approaches to gather information from sites and applications that can later be utilized in data analytics.

Application Programming Interface (API) for Google SERP and pictures are powered by Crawlbase’s artificial intelligence framework that is intended to deal with the load from client’s application and diminishes your project costs since you will not have to purchase unique proxies again and again. It has JSON data output format that is upheld by proxies and access through API.

Crawlbase Google Scraper was not initially made as a regular web scraper, yet as a scraping API, you can utilize it to extract organized data from Google web search tool result pages. A portion of the data you can scrape incorporates key phrase-related data, for example, individuals additionally ask related search output, advertisements, and many more. This implies that the Crawlbase Google Scraper isn’t intended to be utilized by non-coders however by coders who are attempting to try not to deal with proxies, Captchas, and blocks. It is not difficult to utilize and particularly easy and effective.

What is Yahoo:

Yahoo! has been a web staple since the mid-‘90s. It may not be just about as famous as other site monsters nowadays yet its client base is as yet considerable. Yahoo additionally gives quality content that can’t be ignored like news, shopping, finance, sports, and utilizing its web search tool is as yet a significant option for people or organizations who needs to accumulate a wide range of data required for lead generation, advertising, or SEO.

Proxy Crawl will permit you to creep and scrap as much content as you need on Yahoo pages without limitations. You should simply execute a basic API call and A.I. will wrap up for you.

Yahoo Web Scraping – Crawlbase API:

Crawlbase API is the most improved and optimized API to scrape information from web pages. Extract any data you need from Yahoo with the assistance of these crawling and scraping tools. It is profoundly adaptable, limitless bandwidth using our worldwide proxies, very easy to utilize, fledgling amicable APIs, worked for greatest proficiency. You can likewise scrape Yahoo news in a quicker and fast manner utilizing this API. Make a simple GET request to API and immediately admittance to the full HTML source code of Yahoo News so you can simply scrape the information you need for your business. Extract boundless information for your projects without agonizing over setting up proxies or foundations, API will deal with that for you. It is a complete solution for your information assortment needs. You can get the full HTML code and scrape any substance that you need. For immense projects, you can utilize the Crawler with non-concurrent callbacks to save cost, retries, and transmission capacity.

What is Bing:

Bing is a web crawler made and worked by Microsoft, supplanting its previous Live Search, Windows Live Search, and MSN Search contributions. Microsoft Bing (in the past referred to simply known as Bing) is a web search tool possessed and operated by Microsoft. The service has its origins in Microsoft’s past web search tools: MSN Search, Windows Live Search, and later Live Search. Bing gives an assortment of search services, including web, video, image, and map search products. It is created utilizing ASP.NET.

Bing Web Scraping – Crawlbase API:

If you required at any point the need to scrape Bing search outputs, you realize how hard it is as Bing will hinder your solicitations and you need to continue to change your arrangement and framework to have the option to continue getting the information without getting Bing captchas and blocks. With Crawlbase’s Crawling API, service the entirety of this issue vanishes and you can concentrate and focus on the main thing, fabricating and improving your service and your organization to bring new clients. It hugely and safely scraps Bing search results and it is truly simple to utilize API for Bing with instant validation. You can likewise begin to crawl Bing SERP pages for ads now.

Conclusion:

Web data scraping is used to extract data from any web page that can further be utilized for data analytics in e-commerce, business and trade, study and research purposes and multiple benefits can be achieved by the scraped data.

Google, Yahoo, and Bing, all are longing to catch a more prominent market share that keeps them in the consistent transition of making advancements and improvements to their web search tool with the goal that they stay on top as compared to the other competitors. However, Google is the most efficient and in-structure web search tool having aced practically all of the previously mentioned criteria

You can scrape data by implementing the basic scraping technique in Google spreadsheet by using function “IMPORTXML”. But, you can also scrape data by using multiple APIs provided by different tools like the APIs from Crawlbase as discussed above. These APIs provide prebuilt functions that assist in web data scraping with customization and flexibility. This data is then, can be used for countless benefits.