Among the plethora of search engines available today, Yandex stands as a prominent player, particularly in Russia and neighboring countries. Just as Google dominates many parts of the world, Yandex holds a significant market share in Russia, with estimates suggesting it captures over 50% of the search engine market in the country. Beyond search, Yandex’s ecosystem encompasses services, with over 20 products and services [including maps, mail, and cloud storage] catering to diverse user needs. As of the latest statistics, Yandex processes billions of search queries every month, making it a prime target for those looking to scrape Yandex for data, whether for businesses, researchers, or data enthusiasts.

However, manually accessing and analyzing this wealth of data can take time and effort. This is where web scraping Yandex comes into play. By utilizing Python and the Crawlbase Crawling API, we can automate the process of gathering and scraping Yandex search results, providing valuable insights and data points that can drive decision-making and research.

In this guide, we’ll delve deep into the world of web scraping, focusing specifically on how to scrape Yandex search results. Whether you want to understand Yandex’s structure, set up your environment to scrape Yandex efficiently or store the scraped data for future analysis, this guide covers it.

Table Of Contents

- Benefits of Yandex Search Results

- Practical Uses of Yandex Data

- Layout and Structure of Yandex Search Pages

- Key Data Points to Extract

- Installing Python and required libraries

- Choosing the Right Development IDE

- Crawlbase Registration and API Token

- Crafting the URL for Targeted Scraping

- Making HTTP Requests using the Crawling API

- Inspecting HTML to Get CSS Selectors

- Extracting Search Result Details

- Handling Pagination

- Storing Scraped Data in CSV File

- Storing Scraped Data in SQLite Database

Why Scrape Yandex Search Results?

You get many results when you type something into Yandex and hit search. Have you ever wondered what more you could do with these results? That’s where scraping comes in. Let’s dive into why scraping Yandex can be a game-changer.

Benefits of Yandex Search Results

- Spotting Trends: Yandex gives us a window into what’s buzzing online. We can determine what topics or products are becoming popular by studying search patterns. For example, if many people search for “winter jackets” in October, it hints that winter shopping trends are starting early.

- Knowing Your Competition: If you have a business or a website, you’d want to know how you stack up against others. By scraping Yandex, you can see which websites appear often in searches related to your field. This gives insights into what others are doing right and where you might need to catch up.

- Content Creation: Are you a blogger, vlogger, or writer? Knowing what people are looking for on Yandex can guide your content creation. If “easy cookie recipes” are trending, maybe it’s time to share your favorite cookie recipe or make a video about it.

- Boosting Your Own Website: Every website wants to appear on the first page of Yandex. Website owners can tweak their content by understanding search patterns and popular keywords. This way, they have a better chance of appearing when someone searches for related topics.

Practical Uses of Yandex Data

- Comparing Prices: Many people check prices on different websites before buying something. You can gather this price data and make informed decisions by scraping Yandex.

- Research and Learning: For students, teachers, or anyone curious, Yandex search data can be a goldmine. You can learn about the interests, concerns, and questions of people in different regions.

- News and Reporting: Journalists and news outlets can use Yandex data to understand what news topics are gaining traction. This helps them prioritize stories and deliver content that resonates with readers.

To summarize, Yandex search results are more than just a list. They offer valuable insights into what people think, search, and want online. By scraping and analyzing this data, we can make smarter decisions, create better content, and stay ahead in the digital game.

Understanding Yandex’s Structure

When you visit Yandex and type in a search, the page you see isn’t random. It’s designed in a specific way. Let’s briefly examine how Yandex’s search page is put together and the essential things we can pick out from it.

Layout and Structure of Yandex Search Pages

Imagine you’re looking at a newspaper. There are headlines at the top, main stories in the middle, and some ads or side stories on the sides. Yandex’s search page is a bit like that.

- Search Bar: This is where you type what you’re looking for.

- Search Results: After typing, you get a list of websites related to your search. These are the main stories, like the main news articles in a newspaper.

- Side Information: Sometimes, there are extra bits on the side. These could be ads, related searches, or quick answers to common questions.

- Footer: At the bottom, there might be links to other Yandex services or more information about privacy and terms.

Key Data Points to Extract

Now that we know how Yandex’s page looks, what information can we take from it?

- Search Results: This is the main thing we want. It’s a list of websites related to our search. If we’re scraping, we’d focus on getting these website links.

- Title of Websites: Next to each link is a title. This title gives a quick idea of what the website is about.

- Website Description: Under the title, there’s usually a small description or snippet from the website. This can tell us more about the website’s content without clicking on it.

- Ads: Sometimes, the first few results might be ads. These are websites that paid Yandex to show up at the top. Knowing which results are ads and which are organic (not paid for) is good.

- Related Searches: At the bottom of the page, other search suggestions might be related to what you typed. These can give ideas for more searches or related topics.

Understanding Yandex’s structure helps us know where to look and what to focus on when scraping. Knowing the layout and key data points allows us to gather the information we need more efficiently.

Setting up Your Environment

Before scraping Yandex search results, we must ensure our setup is ready. We must install the tools and libraries needed, pick the right IDE, and get the critical API credentials.

Installing Python and Required Libraries

The first step in setting up your environment is to ensure you have Python installed on your system. If you still need to install Python, download it from the official website at python.org.

Once you have Python installed, the next step is to make sure you have the required libraries for this project. In our case, we’ll need three main libraries:

- Crawlbase Python Library: This library will be used to make HTTP requests to the Yandex search page using the Crawlbase Crawling API. To install it, you can use pip with the following command:

1

pip install crawlbase

- Beautiful Soup 4: Beautiful Soup is a Python library that makes it easy to scrape and parse HTML content from web pages. It’s a critical tool for extracting data from the web. You can install it using pip:

1

pip install beautifulsoup4

- Pandas: Pandas is a powerful data manipulation and analysis library in Python. We’ll use it to store and manage the scraped data. Install pandas with pip:

1

pip install pandas

Choosing the Right Development IDE

An Integrated Development Environment (IDE) provides a coding environment with features like code highlighting, auto-completion, and debugging tools. While you can write Python code in a simple text editor, an IDE can significantly improve your development experience.

Here are a few popular Python IDEs to consider:

PyCharm: PyCharm is a robust IDE with a free Community Edition. It offers features like code analysis, a visual debugger, and support for web development.

Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. Its vast extension library makes it versatile for various programming tasks, including web scraping.

Jupyter Notebook: Jupyter Notebook is excellent for interactive coding and data exploration. It’s commonly used in data science projects.

Spyder: Spyder is an IDE designed for scientific and data-related tasks. It provides features like a variable explorer and an interactive console.

Crawlbase Registration and API Token

To use the Crawlbase Crawling API for making HTTP requests to Yandex, you must sign up for an account on the Crawlbase website. Now, let’s get you set up with a Crawlbase account. Follow these steps:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase website Signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the instructions provided.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your tokens here.

Note: Crawlbase offers two types of tokens, one for static websites and another for dynamic or JavaScript-driven websites. Since we’re scraping Yandex, we’ll opt for the Normal Token. Crawlbase generously offers an initial allowance of 1,000 free requests for the Crawling API, making it an excellent choice for our web scraping project.

With Python and the required libraries installed, the IDE of your choice set up, and your Crawlbase token in hand, you’re well-prepared to start scraping Yandex search results.

Fetching and Parsing Search Results

The process involves multiple steps when scraping Yandex search results, from crafting the right URL to handling dynamic content. This section will walk you through each step, ensuring you have a clear roadmap to fetch and parse Yandex search results successfully.

Crafting the URL for Targeted Scraping

Yandex, like many search engines, provides a straightforward method to structure URLs for specific search queries. By understanding this structure, you can tailor your scraping process to fetch exactly what you need.

- Basic Structure: A typical Yandex search URL starts with the main domain followed by the search parameters. For instance:

1 | # Replace your_search_query_here with the desired search term. |

- Advanced Parameters: Yandex offers various parameters that allow for more refined searches. Some common parameters include:

- &lr=: Add the “lr” parameter followed by the language code to only display results in that language.

- &p=: For pagination, allowing you to navigate through different result pages.

- Encoding: Ensure that the search query is properly encoded. This is crucial, especially if your search terms contain special characters or spaces. You can use Python libraries like

urllib.parseto handle this encoding seamlessly.

By mastering the art of crafting URLs for targeted scraping on Yandex, you empower yourself to extract precise and relevant data, ensuring that your scraping endeavors yield valuable insights.

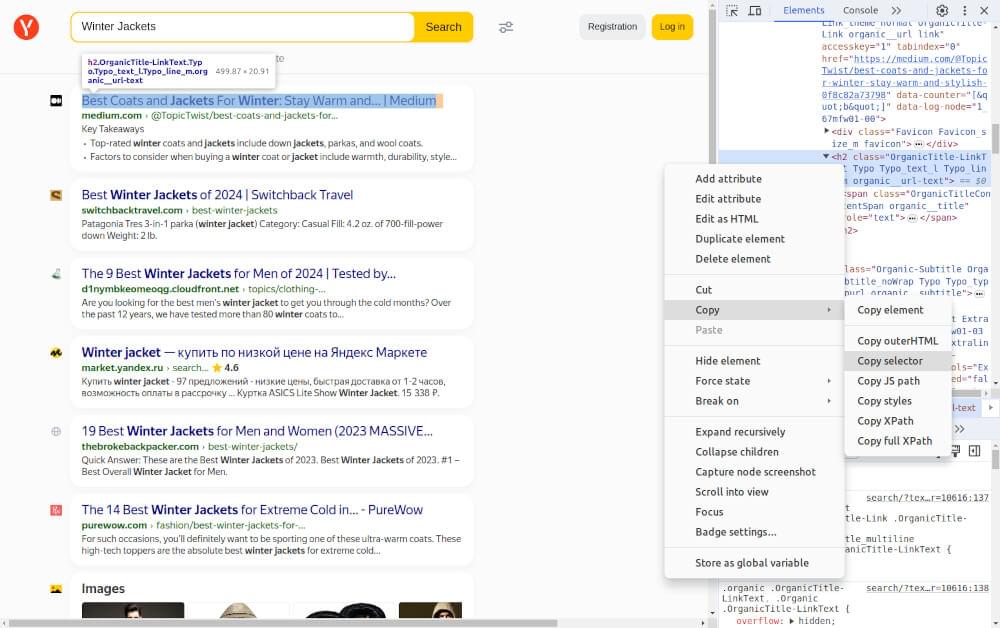

Making HTTP Requests using the Crawling API

Once we have our URL, the next step is to fetch the HTML content of the search results page. Platforms like Yandex monitor frequent requests from the same IP, potentially leading to restrictions or bans. This is where the Crawlbase Crawling API shines, offering a solution with its IP rotation mechanism.

Let’s use “Winter Jackets” as our target search query. Below is a code snippet illustrating how to leverage the Crawling API:

1 | from crawlbase import CrawlingAPI |

Executing the Script

After ensuring your environment is set up and the necessary dependencies are installed, running the script becomes a breeze:

- Save the script with a

.pyextension, e.g.,yandex_scraper.py. - Launch your terminal or command prompt.

- Navigate to the directory containing the script.

- Execute the script using:

python yandex_scraper.py.

By executing this script, it will interact with Yandex, search for “Winter Jackets,” and display the HTML content in your terminal.

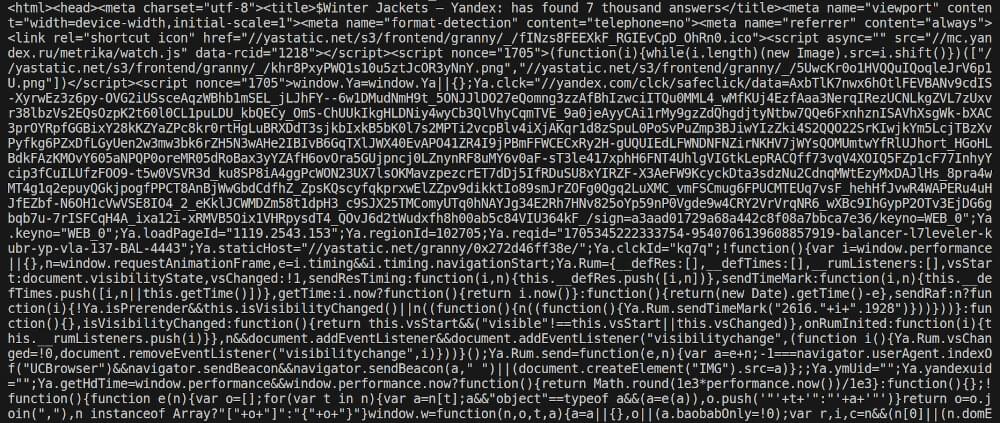

Inspecting HTML to Get CSS Selectors

With the HTML content obtained from the search results page, the next step is to analyze its structure and pinpoint the location of pricing data. This task is where web and browser developer tools come to our rescue. Let’s outline how you can inspect the HTML structure and unearth those precious CSS selectors:

- Open the Web Page: Navigate to the Yandex search URL you intend to scrape and open it in your web browser.

- Right-Click and Inspect: Employ your right-clicking prowess on an element you wish to extract and select “Inspect” or “Inspect Element” from the context menu. This mystical incantation will conjure the browser’s developer tools.

- Locate the HTML Source: Within the confines of the developer tools, the HTML source code of the web page will lay bare its secrets. Hover your cursor over various elements in the HTML panel and witness the corresponding portions of the web page magically illuminate.

- Identify CSS Selectors: To liberate data from a particular element, right-click on it within the developer tools and gracefully choose “Copy” > “Copy selector.” This elegant maneuver will transport the CSS selector for that element to your clipboard, ready to be wielded in your web scraping incantations.

Once you have these selectors, you can proceed to structure your Yandex scraper to extract the required information effectively.

Extracting Search Result Details

Python provides handy tools to navigate and understand web content, with BeautifulSoup being a standout choice.

Previously, we pinpointed specific codes, known as CSS selectors, acting like markers, directing our program precisely to the data we need on a webpage. For instance, we might want details like the title, URL, and search result description. Additionally, while we can’t directly scrape the position of a search result, we can certainly note it down for reference. Here’s how we can update our previous script and extract these details using BeautifulSoup:

1 | from crawlbase import CrawlingAPI |

The fetch_page_html function sends an HTTP GET request to Yandex’s search results page using the CrawlingAPI library and a specified URL. If the response status code is 200, indicating success, it decodes the UTF-8 response body and returns the HTML content; otherwise, it prints an error message and returns None.

Meanwhile, the scrape_yandex_search function utilizes BeautifulSoup to parse the HTML content of the Yandex search results page. The function iterates through the search results, structures the extracted information, and appends it to the search_results list. Finally, the function returns the compiled list of search results.

The main function is like a control center, starting the process of getting and organizing Yandex search results for a particular search query. It then shows the gathered results in an easy-to-read JSON-style format.

Example Output:

1 | [ |

Handling Pagination

Navigating through multiple pages is a common challenge when scraping Yandex search results. Understanding the structure of the HTML elements indicating page navigation is crucial. Typically, you’ll need to dynamically craft URLs for each page, adjusting parameters like page numbers accordingly. Implementing a systematic iteration through pages in your script ensures comprehensive data extraction. To optimize efficiency and prevent overloading Yandex’s servers, consider introducing delays between requests, adhering to responsible web scraping practices. Here’s how we can update our previous script to handle pagination:

1 | from crawlbase import CrawlingAPI |

The script iterates through multiple pages using a while loop, fetching the HTML content for each page. To respect the website’s server, a 2-second delay is introduced between requests. Search results are then extracted and aggregated in the all_search_results list. This systematic approach ensures the script navigates through various pages, retrieves the HTML content, and accumulates search results, effectively handling pagination during the scraping process.

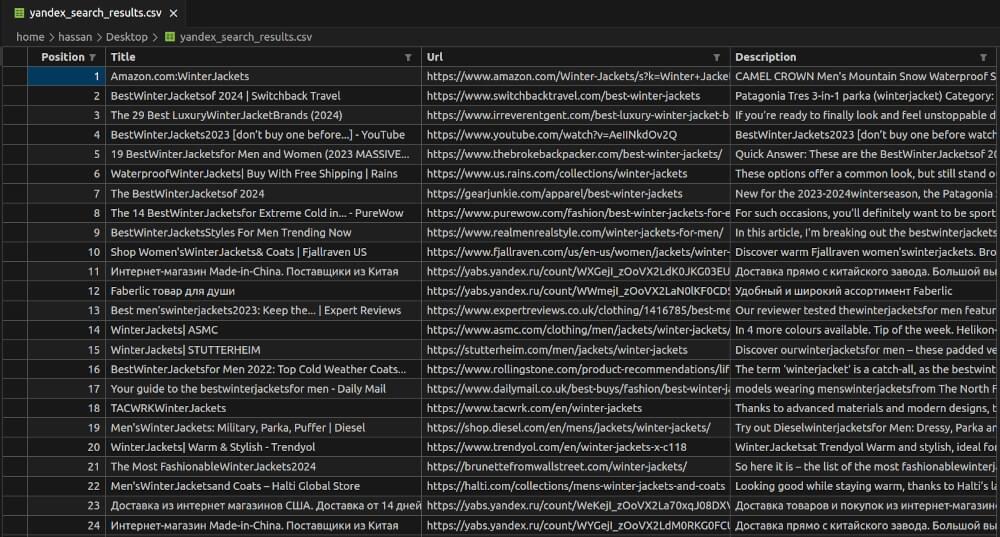

Storing the Scraped Data

After successfully scraping data from Yandex’s search results, the next crucial step is storing this valuable information for future analysis and reference. In this section, we will explore two common methods for data storage: saving scraped data in a CSV file and storing it in an SQLite database. These methods allow you to organize and manage your scraped data efficiently.

Storing Scraped Data in CSV File

CSV is a widely used format for storing tabular data. It’s a simple and human-readable way to store structured data, making it an excellent choice for saving your scraped Yandex search results data.

We’ll extend our previous web scraping script to include a step for saving the scraped data into a CSV file using the popular Python library, pandas. Here’s an updated version of the script:

1 | from crawlbase import CrawlingAPI |

In this updated script, we’ve introduced pandas, a powerful data manipulation and analysis library. After scraping and accumulating the search results in the all_search_results list, we create a pandas DataFrame from this data. Then, we use the to_csv method to save the DataFrame to a CSV file named “yandex_search_results.csv” in the current directory. Setting index=False ensures that we don’t save the DataFrame’s index as a separate column in the CSV file.

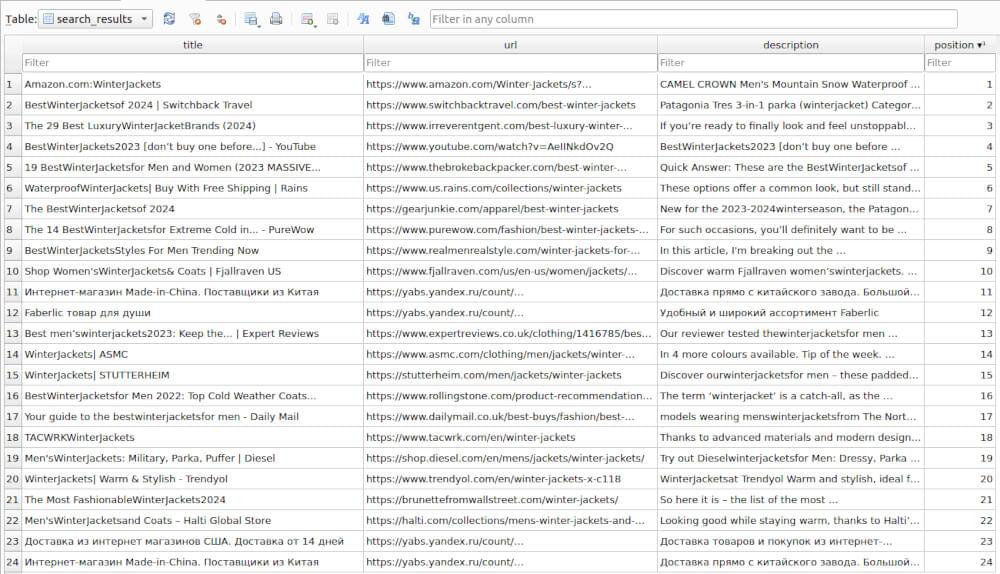

yandex_search_results.csv File Snapshot:

Storing Scraped Data in SQLite Database

If you prefer a more structured and query-friendly approach to data storage, SQLite is a lightweight, serverless database engine that can be a great choice. You can create a database table to store your scraped data, allowing for efficient data retrieval and manipulation. Here’s how you can modify the script to store data in an SQLite database:

1 | from crawlbase import CrawlingAPI |

Functions, initialize_database() and insert_search_results(result_list), deal with managing a SQLite database. The initialize_database() function is responsible for creating or connecting to a database file named search_results.db and defining a table structure to store the search results. The insert_search_results(result_list) function inserts the scraped search results into this database table name as search_results.

search_results Table Snapshot:

Final Words

This guide has provided the necessary insights to scrape Yandex search results utilizing Python and the Crawlbase Crawling API. As you continue your web scraping journey, remember the versatility of these skills extends beyond Yandex. Explore our additional guides for platforms like Google and Bing, broadening your search engine scraping expertise.

Here are some other web scraping python guides you might want to look at:

📜 How to scrape Images from DeviantArt

We understand that web scraping can present challenges, and it’s important that you feel supported. Therefore, if you require further guidance or encounter any obstacles, please do not hesitate to reach out. Our dedicated team is committed to assisting you throughout your web scraping endeavors.

Frequently Asked Questions

Q. What is Yandex?

Yandex is a leading search engine called the “Google of Russia.” It’s not just a search engine; it’s a technology company that provides various digital services, including but not limited to search functionality, maps, email services, and cloud storage. Originating from Russia, Yandex has expanded its services to neighboring countries and has become a significant player in the tech industry.

Q. Why would someone want to scrape Yandex search results?

There can be several reasons someone might consider scraping Yandex search results. Researchers might want to analyze search patterns, businesses might want to gather market insights, and developers might want to integrate search results into their applications. By scraping search results, one can understand user behavior, track trends, or create tools that rely on real-time search data.

Q. Is it legal to scrape Yandex?

The legality of web scraping depends on various factors, including the website’s terms of service. Yandex, like many other search engines, has guidelines and terms of service in place. It’s crucial to review and understand these terms before scraping. Always ensure that the scraping activity respects Yandex’s robots.txt file, doesn’t overload their servers, and doesn’t violate any copyrights or privacy laws. If in doubt, seeking legal counsel or using alternative methods to obtain the required data is advisable.

Q. How can I prevent my IP from getting blocked while scraping?

Getting your IP blocked is a common challenge when scraping websites. Tools like the Crawlbase Crawling API come in handy to mitigate this risk. The API offers IP rotation, automatically switching between multiple IP addresses. This feature ensures that you only send a few requests from a single IP in a short period, reducing the chances of triggering security measures like IP bans. Additionally, it’s essential to incorporate delays between requests, use user-agents, and respect any rate-limiting rules the website sets to maintain a smooth scraping process