Target, a retail juggernaut, boasts a robust online platform attracting millions of visitors. With a user-friendly interface and an extensive product catalog, Target’s website has become a treasure trove for data enthusiasts. The site features diverse categories, from electronics and apparel to home goods, making it a prime target for those seeking comprehensive market insights.

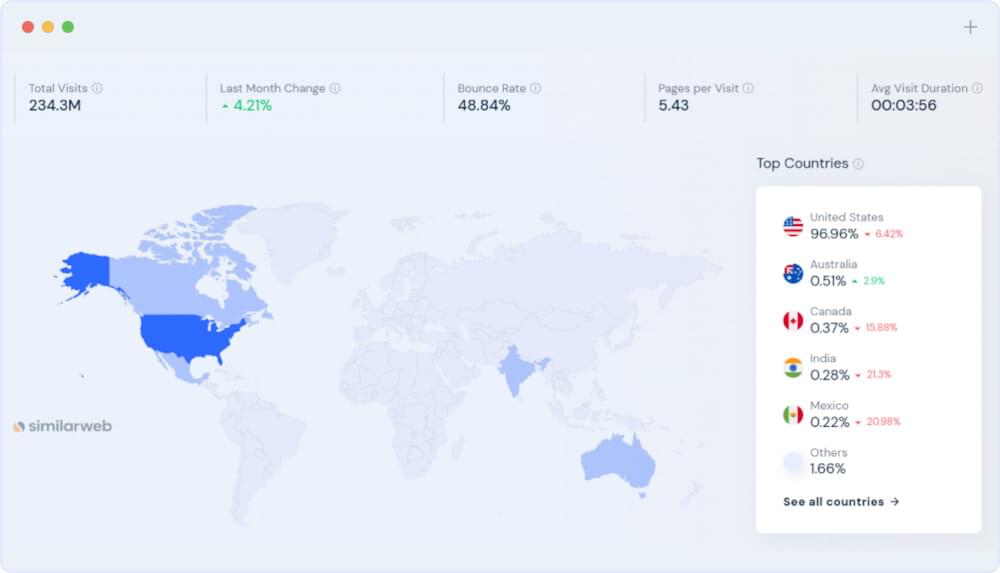

Target’s website offers a rich landscape. Boasting millions of product pages, customer reviews, and dynamic pricing information, the potential for valuable data extraction is vast. As of December 2023, the statistics further underscore the significance of Target’s online presence. A staggering 234.3 million people from different corners of the globe visited the site, with a predominant influx from the United States.

Whether tracking product trends, monitoring competitor prices, or analyzing customer sentiments through reviews, the data hidden within Target’s digital shelves holds immense value for businesses and researchers alike.

Why delve into target scraping? The answer lies in the wealth of opportunities it unlocks. By harnessing the power of a Target scraper, one can gain a competitive edge, staying ahead in the ever-evolving market landscape. Join us on this journey as we navigate the nuances of web scraping using Python, starting with a hands-on do-it-yourself (DIY) approach and later exploring the efficiency of the Crawlbase Crawling API. Let’s uncover the secrets buried in Target’s digital aisles and empower ourselves with the tools to scrape Target data effectively.l

Table Of Contents

- Structure of Target Product Listing Pages

- Key Data Points to Extract

- Installing Python and required libraries

- Choosing a Development IDE

- Using the requests library

- Inspect the Target Website for CSS selectors

- Utilizing BeautifulSoup for HTML parsing

- Issues related to reliability and scalability

- Maintenance challenges over time

- How it simplifies the web scraping process

- Benefits of using a dedicated API for web scraping

- Crawlbase Registration and API Token

- Accessing Crawling API With Crawlbase Library

- Extracting Target product data effortlessly

- Showcase of improved efficiency and reliability

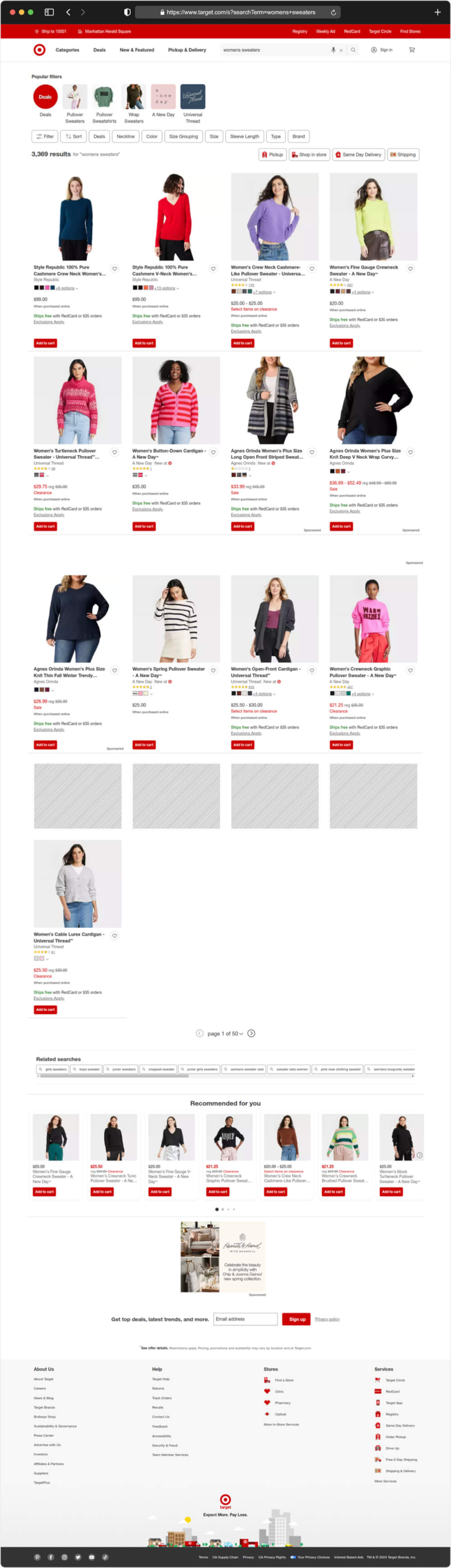

Understanding Target’s SERP Structure

When navigating Target’s website using web scraping, it’s essential to comprehend the structure of Target’s Search Engine Results Page (SERP). Here’s a breakdown of the components on these pages and the crucial data points we aim to extract:

Structure of Target Product Listing Pages

Envision the Target website as a well-organized catalog. Like a newspaper has headlines, main stories, and side sections, Target’s product listing pages follow a structured format.

- Product Showcase: This is akin to the main stories in a newspaper featuring the products that match your search criteria.

- Search Bar: Similar to the headline space in a newspaper, the search bar is where you input what you’re looking for.

- Additional Information: Sometimes, you’ll find extra bits on the side – promotional content, related products, or quick details about selected items.

- Footer: At the bottom, you may encounter links to other sections of the Target website or find more information about policies and terms.

Understanding this layout equips our Target scraper to navigate the virtual aisles efficiently.

Key Data Points to Extract

Now, armed with an understanding of Target’s SERP structure, let’s pinpoint the essential data points for extraction:

- Product Listings: The primary focus of our scrape Target mission is obtaining a list of products relevant to the search.

- Product Names: Just as a newspaper’s headlines provide a quick idea of the main stories, the product names serve as the titles of each listed item.

- Product Descriptions: Beneath each product name, you’ll typically find a brief description or snippet offering insights into the product’s features without clicking on it.

- Promotional Content: Occasionally, the initial results may include promotional content. Recognizing these as promotional and differentiating them from organic listings is crucial.

- Related Products: Towards the bottom, there may be suggestions for related products, providing additional ideas for further searches or related topics.

Understanding Target’s SERP structure guides our scraping efforts, allowing us to gather pertinent information from Target’s digital shelves efficiently.

Setting up Your Environment

Embarking on our journey to scrape Target data requires a well-prepared environment. Let’s start by ensuring you have the essential tools at your disposal.

Installing Python and Required Libraries

Begin by installing Python, the versatile programming language that will serve as our foundation for web scraping. Visit the official Python website and download the latest version suitable for your operating system. During installation, make sure to check the box that says “Add Python to PATH” for a seamless experience.

Now, let’s equip ourselves with the key libraries for our scraping adventure:

- Requests Library: An indispensable tool for making HTTP requests in Python. Install it by opening your terminal or command prompt and entering the following command:

1 | pip install requests |

- BeautifulSoup Library: This library, coupled with its parser options, empowers us to navigate and parse HTML, extracting the desired information. Install it with:

1 | pip install beautifulsoup4 |

- Crawlbase Library: To leverage the Crawlbase Crawling API seamlessly, install the Crawlbase Python library:

1 | pip install crawlbase |

Your Python environment is now armed with the necessary tools to initiate our Target scraping endeavor.

Choosing a Development IDE

Selecting a comfortable Integrated Development Environment (IDE) enhances your coding experience. Popular choices include:

- Visual Studio Code (VSCode): A lightweight, feature-rich code editor. Install it from VSCode’s official website.

- PyCharm: A powerful Python IDE with advanced features. Download the community edition here.

- Google Colab: A cloud-based platform allowing you to write and execute Python code in a collaborative environment. Access it through Google Colab.

With Python, Requests, and BeautifulSoup in your arsenal, and your chosen IDE ready, you’re well-prepared to embark on the journey of building your Target Scraper. Let’s dive into the DIY approach using these tools.

DIY Approach with Python

Now that our environment is set up, let’s roll up our sleeves and delve into the do-it-yourself approach of scraping Target data using Python, Requests, and BeautifulSoup. Follow these steps to navigate the intricacies of the Target website and extract the desired information.

Using the Requests Library

The Requests library will be our gateway to the web, allowing us to retrieve the HTML content of the Target webpage. In our example, let’s focus on scraping data related to “women’s sweaters” from the Target website. Employ the following code snippet to make a request to the Target website:

1 | import requests |

Open your preferred text editor or IDE, copy the provided code, and save it in a Python file. For example, name it target_scraper.py.

Run the Script:

Open your terminal or command prompt and navigate to the directory where you saved target_scraper.py. Execute the script using the following command:

1 | python target_scraper.py |

As you hit Enter, your script will come to life, sending a request to the Target website, retrieving the HTML content and displaying it on your terminal.

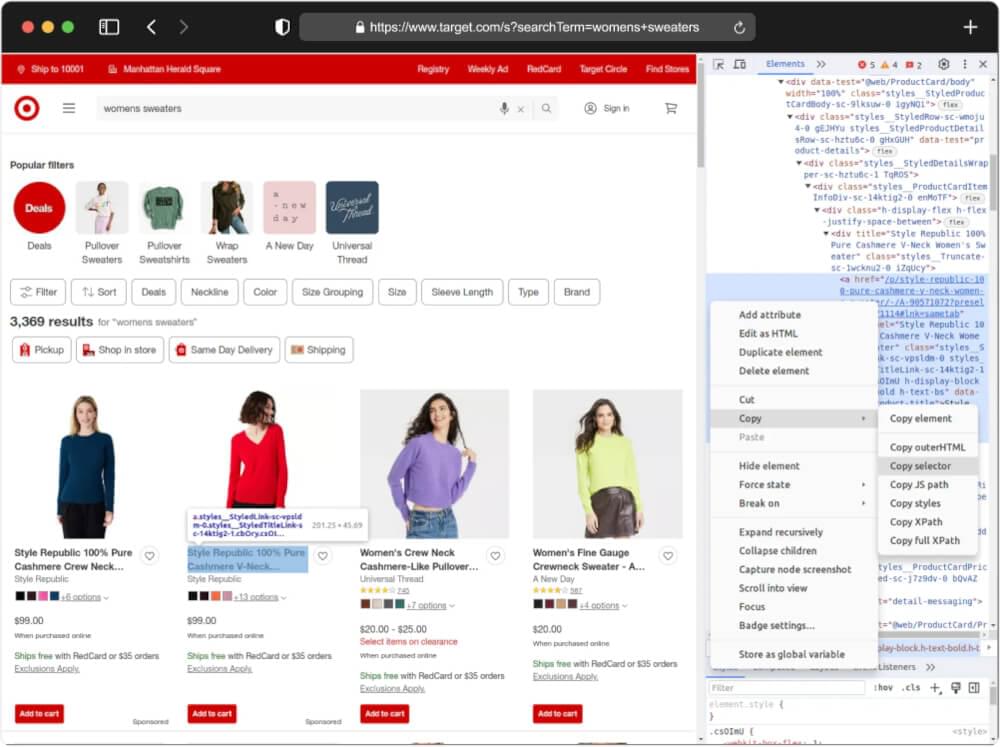

Inspect the Target Website for CSS Selectors

- Access Developer Tools: Right-click on the webpage in the browser and select ‘Inspect’ (or ‘Inspect Element’). This opens the Developer Tools, allowing you to explore the HTML structure.

- Navigate Through HTML: Within the Developer Tools, navigate through the HTML elements to identify the specific data you wish to scrape. Look for unique identifiers, classes, or tags associated with the target information.

- Identify CSS Selectors: Note down the CSS selectors corresponding to the elements of interest. These selectors will serve as pointers for your Python script to locate and extract the desired data.

Utilizing BeautifulSoup for HTML Parsing

With the HTML content in hand and the CSS selectors identified, let’s utilize BeautifulSoup to parse and navigate through the structure. For the example, we will extract essential details like the product title, rating, review count, price, and URL link (Product Page URL) for every product listed on the specified Target search page. The data retrieved is then structured and can be stored for further analysis or processing. Let’s extend our previous script and scrape this information from HTML.

1 | import requests |

You will receive the output as empty:

1 | [] |

But Why? This occurs because Target employs JavaScript to dynamically generate search results on its SERP page. When you send an HTTP request to the Target URL, the HTML response lacks meaningful data, leading to an absence of valuable information.

This DIY approach lays the groundwork for scraping Target data using Python. However, it comes with its limitations, including potential challenges in handling dynamic content, reliability, and scalability.

Drawbacks of the DIY Approach

While the DIY approach using Python, Requests, and BeautifulSoup provides a straightforward entry into web scraping, it is crucial to be aware of its inherent drawbacks. While creating the Target scraper with a do-it-yourself approach, two prominent challenges surface:

Issues Related to Reliability and Scalability

- Dynamic Content Handling: DIY scraping may falter when dealing with websites that heavily rely on dynamic content loaded through JavaScript. As a result, the extracted data might not fully represent the real-time information available on the Target website.

- Rate Limiting and IP Blocking: Web servers often implement rate limiting or IP blocking mechanisms to prevent abuse. DIY scripts may inadvertently trigger these mechanisms, leading to temporary or permanent restrictions on access, hindering the reliability and scalability of your scraping operation.

Maintenance Challenges Over Time

- HTML Structure Changes: Websites frequently undergo updates and redesigns, altering the HTML structure. Any modifications to the Target site’s structure can disrupt your DIY scraper, necessitating regular adjustments to maintain functionality.

- CSS Selector Changes: If Target changes the CSS selectors associated with the data you’re scraping, your script may fail to locate the intended information. Regular monitoring and adaptation become essential to counteract these changes.

Understanding these drawbacks emphasizes the need for a more robust and sustainable solution.

Crawlbase Crawling API: Overcoming DIY Limitations

In our pursuit of efficiently scraping Target product data, the Crawlbase Crawling API emerges as a powerful solution, offering a dedicated approach that transcends the limitations of the DIY method. Let’s explore how this API simplifies the web scraping process and unravels the benefits of using a specialized tool for this task.

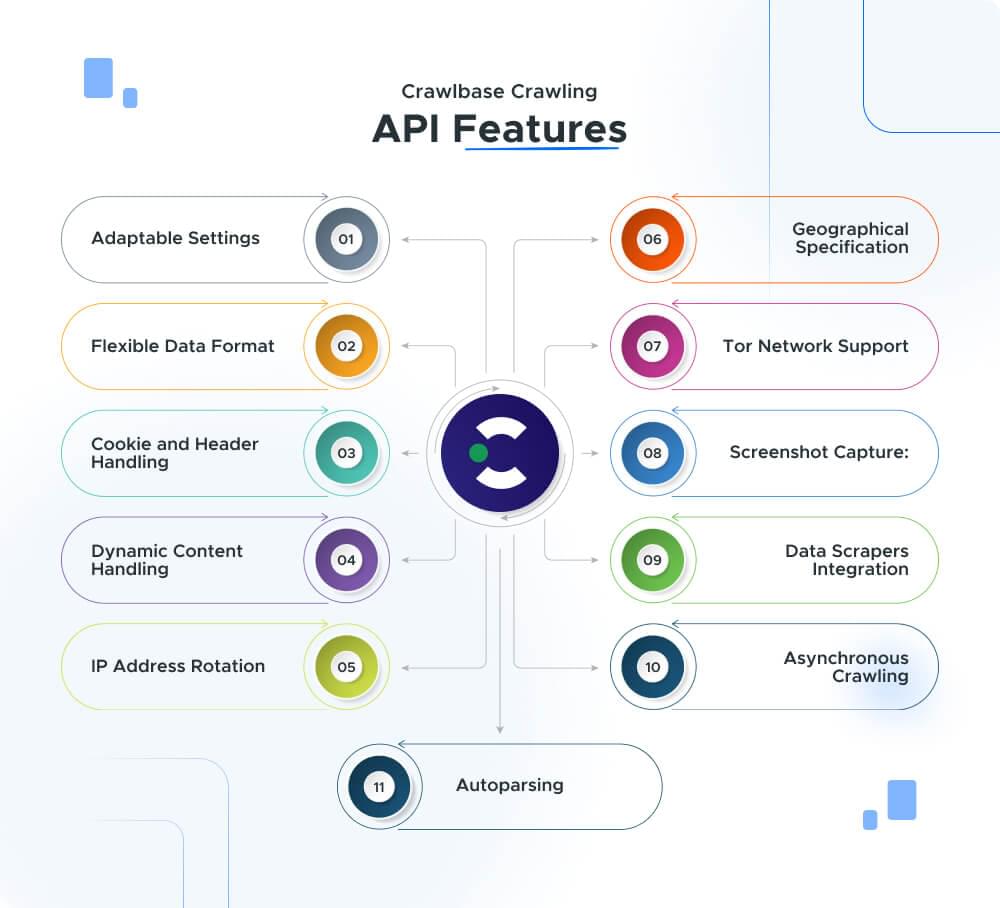

How it simplifies the web scraping process

The Crawlbase Crawling API simplifies web scraping for developers with its user-friendly and efficient design. Using the parameters provided by this API, we can handle any scraping problem. Here’s a concise overview of its key features:

- Adaptable Settings: Customize API requests with settings like “format,” “user_agent,” and “page_wait” to tailor the scraping process to specific requirements.

- Flexible Data Format: Choose between JSON and HTML response formats, aligning the API with diverse developer needs and simplifying data extraction.

- Cookie and Header Handling: Access crucial information like cookies and headers from the target website using “get_cookies” and “get_headers,” which are essential for authentication or tracking tasks.

- Dynamic Content Handling: Excel at crawling pages with dynamic content, including JavaScript elements, using parameters like “page_wait” and “ajax_wait.”

- IP Address Rotation: Enhance anonymity by switching IP addresses, minimizing the risk of being blocked by websites, and ensuring successful web crawling.

- Geographical Specification: Utilize the “country” parameter to specify geographical locations, which is invaluable for extracting region-specific data.

- Tor Network Support: Enable the “tor_network” parameter for crawling onion websites over the Tor network, securely enhancing privacy and access to dark web content.

- Screenshot Capture: Capture visual context with the screenshot feature, providing an additional layer of comprehension to the collected data.

- Data Scrapers Integration: Seamlessly use pre-defined data scrapers to simplify extracting specific information from web pages, reducing the complexity of custom scraping logic.

- Asynchronous Crawling: Support for asynchronous crawling with the “async” parameter, providing developers with a request identifier (RID) for easy retrieval of crawled data from cloud storage.

- Autoparsing: Reduce post-processing workload by utilizing the autoparse parameter, which provides parsed information in JSON format, enhancing the efficiency of data extraction and interpretation.

Benefits of Using a Dedicated API for Web Scraping

The Crawlbase Crawling API brings forth a multitude of benefits, making it a preferred choice for developers engaged in web scraping tasks:

- Reliability: The API is designed to handle diverse scraping scenarios, ensuring reliability even when faced with dynamic or complex web pages.

- Scalability: Crawlbase’s infrastructure allows for efficient scaling, accommodating larger scraping projects and ensuring consistent performance.

- Customization: Developers can tailor their scraping parameters, adapting the API to the unique requirements of their target websites.

- Efficiency: The API’s optimization for speed and performance translates to quicker data extraction, enabling faster insights and decision-making.

- Comprehensive Support: Crawlbase provides extensive documentation and support, assisting developers in navigating the API’s features and resolving any challenges encountered.

As we transition from the DIY approach, the implementation of Crawlbase Crawling API promises to simplify the web scraping process and unlock a spectrum of advantages that elevate the efficiency and effectiveness of Target scraping endeavors. In the next section, we’ll guide you through the practical steps of using Crawlbase Crawling API to extract Target product data effortlessly.

Target Scraper With Crawlbase Crawling API

Now that we’ve explored the capabilities of the Crawlbase Crawling API, let’s guide you through the practical steps of building a Target Scraper using this powerful tool.

Crawlbase Registration and API Token

Fetching Target data using Crawlbase Crawling API begins with creating an account on the Crawlbase platform. Let’s guide you through the account setup process for Crawlbase:

- Navigate to Crawlbase: Open your web browser and head to the Crawlbase website’s Signup page to kickstart your registration journey.

- Provide Your Credentials: Enter your email address and craft a password for your Crawlbase account. Ensure you fill in the necessary details accurately.

- Verification Process: A verification email may land in your inbox after submitting your details. Look out for it and complete the verification steps outlined in the email.

- Log In: Once your account is verified, return to the Crawlbase website and log in using the credentials you just created.

- Secure Your API Token: Accessing the Crawlbase Crawling API requires an API token, and you can find yours in your account documentation.

Quick Note: Crawlbase provides two types of tokens – one tailored for static websites and another designed for dynamic or JavaScript-driven websites. Since our focus is on scraping Target, we will use JS token. Bonus: Crawlbase offers an initial allowance of 1,000 free requests for the Crawling API, making it an ideal choice for our web scraping expedition.

Accessing Crawling API With Crawlbase Library

Utilize the Crawlbase library in Python to seamlessly interact with the Crawling API. The provided code snippet demonstrates how to initialize and utilize the Crawling API through the Crawlbase Python library.

1 | from crawlbase import CrawlingAPI |

Extracting Target Product Data Effortlessly

Using the Crawlbase Crawling API, we can easily gather Target product information. By utilizing a JS token and adjusting API parameters like ajax_wait and page_wait, we can manage JavaScript rendering. Let’s improve our DIY script by incorporating the Crawling API.

1 | from crawlbase import CrawlingAPI |

Sample Output:

1 | [ |

Handling Pagination

Collecting information from Target’s search results involves navigating through multiple pages, each displaying a set of product listings. To ensure a thorough dataset, we must manage pagination. This means moving through the result pages and requesting more data when necessary.

Target website use &Nao parameter in the URL to handle pagination. It specifies the starting point for displaying results on each page. For example, &Nao=1 signifies the first set of 24 results, and &Nao=24 points to the next set. This parameter enables us to systematically gather data across different pages and build a comprehensive dataset for analysis.

Let’s enhance our existing script to seamlessly handle pagination.

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase has many built-in scrapers you can use with our Crawling API. Learn more about them in our documentation. We also create custom solutions based on what you need. Our skilled team can make a solution just for you. This way, you don’t have to worry about watching website details and CSS selectors all the time. Crawlbase will handle it for you, so you can focus on your goals. Contact us here.

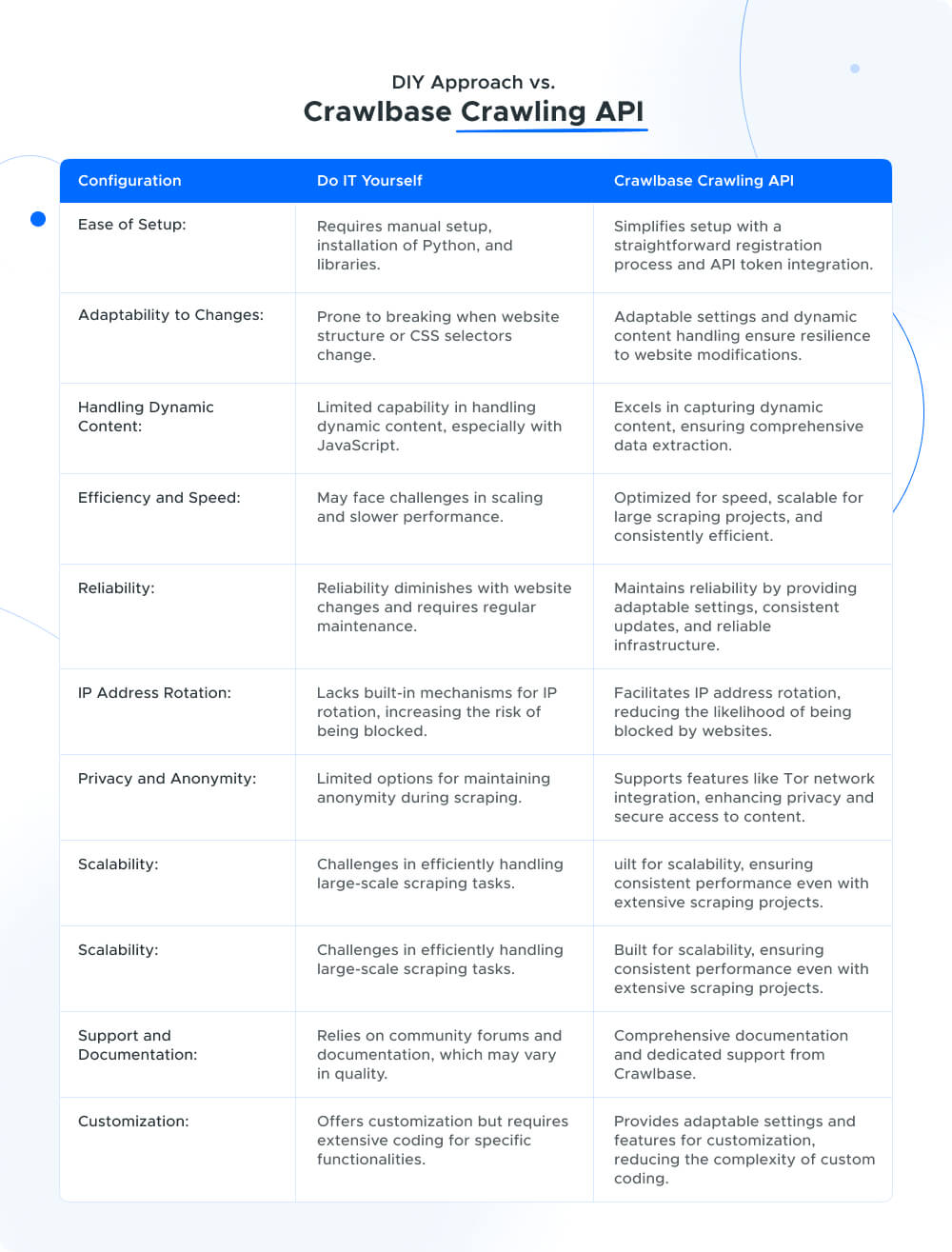

Comparison: DIY vs. Crawlbase Crawling API

When it comes to scraping Target product data, choosing the right method can significantly impact the efficiency and success of your web scraping endeavors. Let’s compare the traditional Do-It-Yourself (DIY) approach with Python, Requests, and BeautifulSoup against the streamlined Crawlbase Crawling API.

Final Thoughts

Scraping Target product data, simplicity and effectiveness are key. While the DIY approach offers a learning curve, the Crawlbase Crawling API stands out as the savvy choice. Say goodbye to reliability concerns and scalability hurdles; opt for the Crawlbase Crawling API for a straightforward, reliable, and scalable solution to scrape Target effortlessly.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

Web scraping can pose challenges, and your success matters. If you need additional guidance or encounter hurdles, reach out without hesitation. Our dedicated team is here to support you on your journey through the world of web scraping. Happy scraping!

Frequently Asked Questions

Q1: Is it legal to scrape Target using web scraping tools?

Web scraping practices may be subject to legal considerations, and it is essential to review Target’s terms of service and robots.txt file to ensure compliance with their policies. Always prioritize ethical and responsible scraping practices, respecting the website’s terms and conditions. Additionally, staying informed about relevant laws and regulations pertaining to web scraping in the specific jurisdiction is crucial for a lawful and respectful approach.

Q2: What are the common challenges faced in web scraping?

Regardless of the chosen approach, web scraping often encounters challenges that include dynamic content, adaptability to website changes, and the importance of maintaining ethical and legal compliance. Handling dynamic content, such as JavaScript-generated elements, requires sophisticated techniques for comprehensive data extraction. Additionally, websites may undergo structural changes over time, necessitating regular updates to scraping scripts. Adhering to ethical and legal standards is crucial to ensure responsible and respectful web scraping practices.

Q3: Why choose the Crawlbase Crawling API for scraping Target over the DIY method?

The Crawlbase Crawling API is the preferred choice for scraping Target due to its streamlined process, adaptability, efficiency, and reliability. It excels in handling dynamic content, supporting IP rotation to maintain anonymity, and ensuring consistent performance even in large-scale scraping projects. The API’s user-friendly design and comprehensive features make it a superior solution to the DIY method.

Q4: Is the Crawlbase Crawling API suitable for large-scale scraping projects?

Absolutely. The Crawlbase Crawling API is specifically designed for scalability, making it well-suited for large-scale scraping projects. Its efficient architecture ensures optimal performance, allowing developers to handle extensive data extraction tasks effortlessly. The adaptability and reliability of the API make it a robust choice for projects of varying scales.