Product Hunt, established in 2013, has evolved into a dynamic platform that prominently features new products and startups across diverse sectors. It boasts a substantial community of creators and enthusiasts. From its inception, Product Hunt has accumulated an extensive array of profiles and products. Presently, the platform harbors many registered profiles and products, rendering it an invaluable resource for exploring information. Scrape Product Hunt to find detailed descriptions and reviews of products, along with insights into user engagement. The platform offers a wealth of information ripe for discovery.

In this blog post, we will scrape information from Product Hunt profiles and products using the Crawlbase Crawling API and JavaScript. With these tools, we can scrape important data like product names, descriptions, details about the makers, upvote counts, release dates, and what users are saying about Product Hunt platform. Let’s take a closer look at how this whole process works and what interesting things we can learn from the data on Product Hunt.

Table of Contents:

- Product Data

- User Data

- Engagement Metrics

- Trending and Historical Data

Featured Products and Profiles

- Curated Selection

- Increased Visibility

- Learn JavaScript Basics

- Get Crawlbase API Token

- Setting Up the Environment

Fetching Product Hunt Products data HTML

Scrape Product Hunt Products meaningful Data

Scrape Product Hunt Profile Data

Product Hunt Data to Scrape

Product Hunt provides a rich dataset that encompasses a variety of information, offering a comprehensive view of the products and the community. Here’s a breakdown of the key types of data available:

- Product Data:

- Name and Description: Each product listed on Product Hunt comes with a name and a detailed description, outlining its features and purpose.

- Category: Products are categorized into different sections, ranging from software and mobile apps to hardware and books.

- Launch Date: The date when a product was officially launched is recorded, providing insights into the timeline of innovation.

- User Data:

- Profiles: Users have profiles containing information about themselves, their submitted products, and their interactions within the community.

- Products Submitted: A record of the products a user has submitted, reflecting their contributions to the platform.

- Engagement Metrics: Information on how users engage with products, including upvotes, comments, and followers.

- Engagement Metrics:

- Upvotes: The number of upvotes a product receives indicates its popularity and acceptance within the community.

- Comments: User comments provide qualitative insights, feedback, and discussions around a particular product.

- Popularity: Metrics that quantify a product’s overall popularity, which can be a combination of upvotes, comments, and other engagement factors.

- Trending and Historical Data:

- Trending Products: Identification of products currently gaining momentum and popularity.

- Historical Trends: Analysis of how a product’s popularity has changed over time, helping identify patterns and factors influencing success.

Featured Products and Profiles

Product Hunt prominently features a curated selection of products and profiles on its homepage. Understanding the criteria for featuring provides valuable insights into the dynamics of the platform:

Curated Selection:

- Product Hunt Team Selection: The Product Hunt team curates and features products they find particularly innovative, interesting, or relevant.

- Community Engagement: Products that receive significant user engagement, such as upvotes and comments, are more likely to be featured.

Increased Visibility:

- Homepage Exposure: Featured products enjoy prime placement on the Product Hunt homepage, increasing their visibility to a broader audience.

- Enhanced Recognition: Being featured lends credibility and recognition to a product, potentially attracting more attention from users, investors, and the media.

It’s essential for anyone using the Product Hunt platform to understand how different types of data work together and the things that affect which products get featured. This knowledge helps you navigate and make the most of Product Hunt effectively.

Scraping Product Hunt Data

Learn JavaScript Basics:

Before scraping data from Product Hunt, we must understand some basics of JavaScript, the programming language we’ll be using. Familiarize yourself with concepts like DOM manipulation, which helps us interact with different parts of a webpage, make HTTP requests to get data, and handle asynchronous operations for smoother coding. Knowing these basics will be helpful for our project.

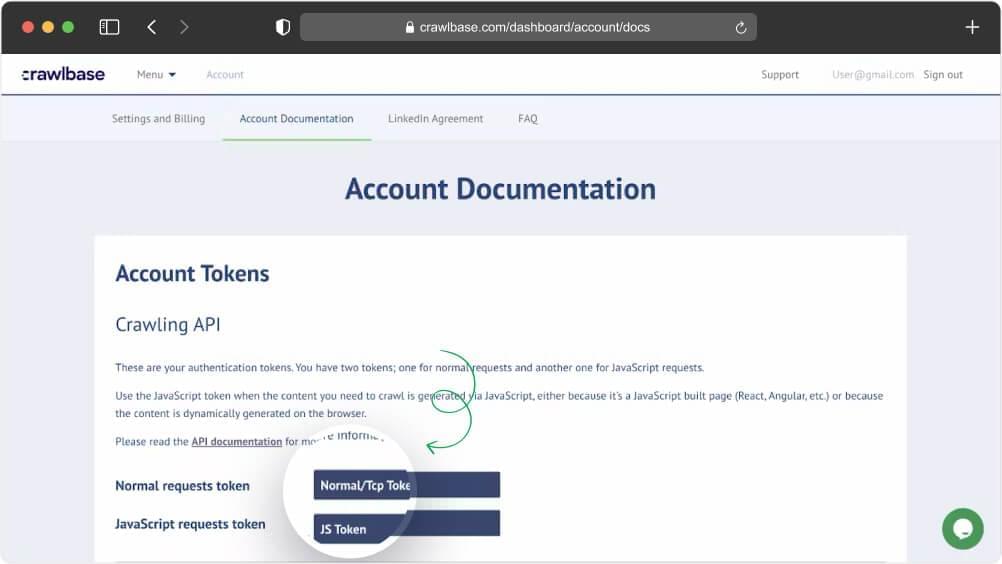

Get Crawlbase API Token:

Let’s talk about getting the token we need from Crawlbase to make our Product Hunt scraping work.

- Log in to your Crawlbase account on their website.

- Once logged in, find the “Account Documentation“ page inside your Crawlbase dashboard.

- Look for a code called “JavaScript token” on that page. Copy this code – it’s like a secret key that ensures our code can communicate with Product Hunt properly.

Now that you have this token, you can complete the setup for our Product Hunt scraping project to work smoothly.

Setting Up the Environment

Now that we have everything ready let’s set up the tools we need for our JavaScript code. Follow these steps in order:

- Create Project Folder:

Open your terminal and type mkdir producthunt_scraper to create a new folder for your project. You can name this folder whatever you want.

1 | mkdir producthunt_scraper |

- Navigate to Project Folder:

Type cd producthunt_scraper to go into the new folder. This helps you manage your project files better.

1 | cd producthunt_scraper |

- Create JavaScript File:

Type touch scraper.js to create a new file called scraper.js. You can name this file differently if you prefer.

1 | touch scraper.js |

- Install Crawlbase Package:

Type npm install crawlbase to install a package called Crawlbase. This package is crucial for our project as it helps us interact with the Crawlbase Crawling API, making it easier to get information from websites.

1 | npm install crawlbase |

By following these steps, you’re setting up the basic structure for your Product Hunt scraping project. You’ll have a dedicated folder, a JavaScript file to write your code, and the necessary Crawlbase tool to make the scraping process smooth and organized.

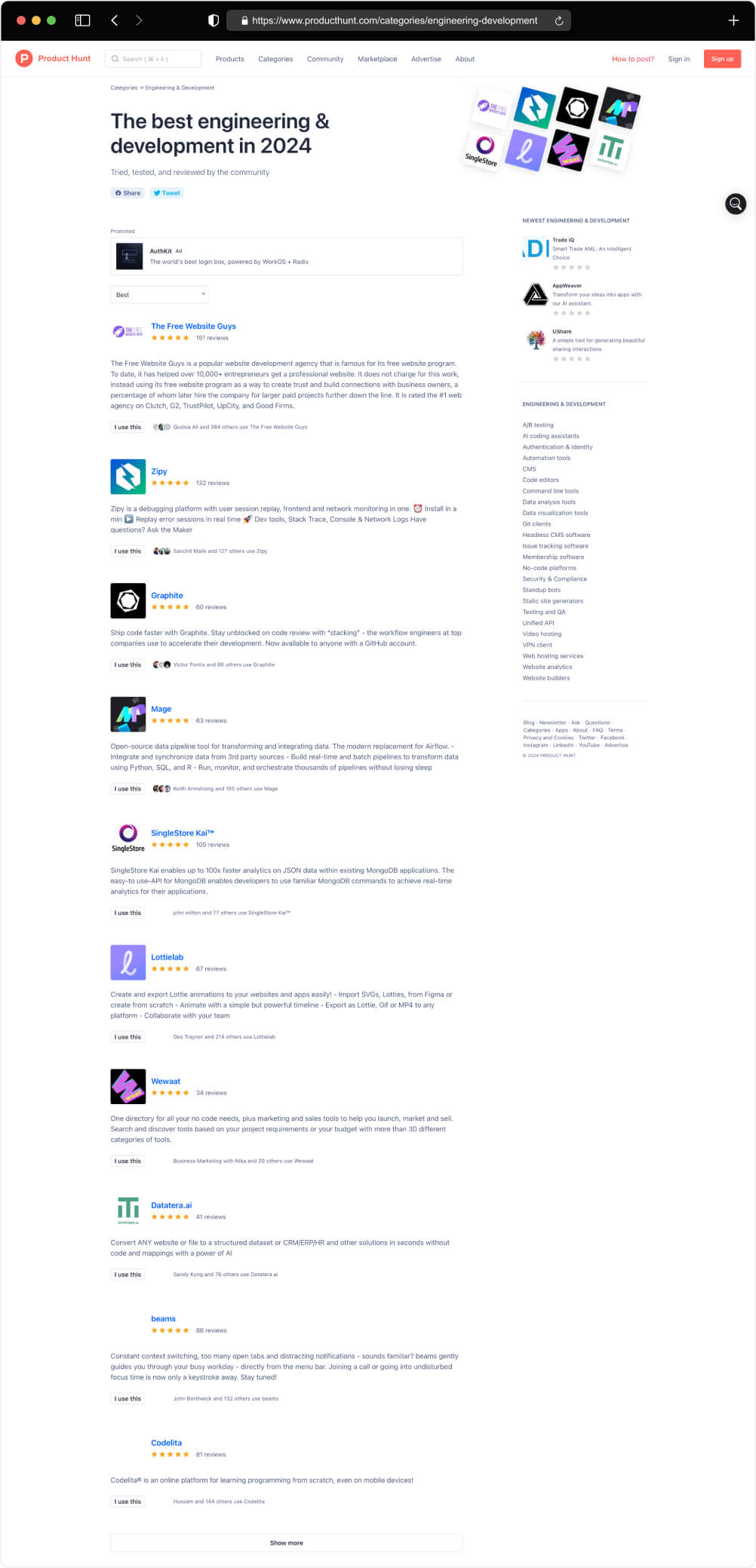

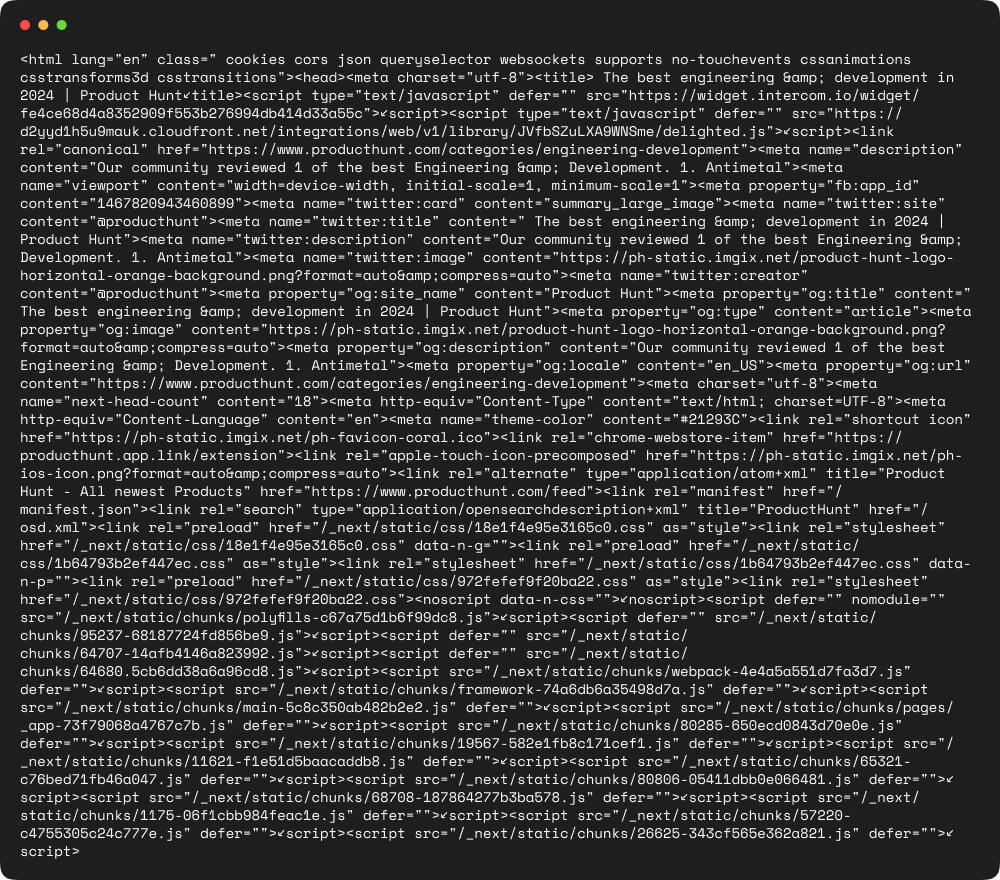

Fetching Product Hunt Products Data HTML

After getting your API credentials and installing the Node.js library for web scraping, it’s time to work on “scraper.js” file. Now, choose the Product Hunt category page you want to scrape. For this example, let’s focus on the Product Hunt category page for “Best Engineering & Development Products of 2024“ to scrape different product data. In the “scraper.js” file, you’ll use Node.js and the Cheerio library to extract information from the chosen Product Hunt page. Make sure to replace the code’s placeholder URL with the page’s actual URL.

To make the Crawlbase Crawling API work, follow these steps:

- Make sure you have the “scraper.js” file created, as explained before.

- Copy and paste the script provided into that file.

- Run the script in your terminal by typing “node scraper.js” and pressing Enter.

1 | const { CrawlingAPI } = require('crawlbase'), |

HTML Response:

Scrape Product Hunt Products meaningful Data

This example shows you how to scrape different product data from a Product Hunt category page. This includes the product’s name, description, stars, and reviews. We’ll be using two JavaScript libraries: Cheerio, which is commonly used for web scraping, and fs, which is often used for handling files.

The provided JavaScript code uses the Cheerio library to extract details from a Product Hunt page. It takes the HTML content you obtained in the previous step from “scraper.js,” processes it with Cheerio, and collects information like the product’s name, description, stars, and reviews. The script reviews each product listing and saves the gathered data in a JSON array.

1 | const fs = require('fs'), |

JSON Response:

1 | [ |

Scrape Product Hunt Profile Data

In this example, we’ll explain how to extract information from a Product Hunt user profile, specifically focusing on the Saas Warrior profile. The data we want to collect includes user details like user ID, name, about section, followers, following, points, interests, badges, and more. To do this, we’ll first get the HTML code of the Product Hunt user profile page and then create a custom JavaScript Product Hunt scraper to extract the desired data from this HTML code.

For this task, we’ll use two JavaScript libraries: cheerio, commonly used for web scraping, and fs, which helps with file operations. The provided script reads through the HTML code of the Product Hunt user profile page, extracts the relevant data, and saves it into a JSON array.

1 | const { CrawlingAPI } = require('crawlbase'), |

JSON Response:

1 | { |

Conclusion

This guide offers information and tools to help you scrape data from Product Hunt using JavaScript and the Crawlbase Crawling API. You can gather various data sets, such as user profile details (user id, name, followers, following, points, social links, interests, badges) and information about different products (product name, image, description, rating, reviews). Whether you’re new to web scraping or have some experience, these tips will get you started. If you’re interested in trying scraping on other websites like Etsy, Walmart, or Glassdoor, we have more guides for you to explore.

Related JavaScript guides:

Frequently Asked Questions

Are there any rate limits or IP blocking measures for scraping data from Product Hunt?

Product Hunt might enforce rate limits and IP blocking measures to prevent abuse and ensure fair usage of their platform. Excessive or aggressive scraping could trigger these mechanisms, resulting in temporary or permanent blocks. To mitigate this, it’s recommended to use a reliable solution like the Crawlbase Crawling API. This API allows users to scrape websites without concerns about rate limits or IP blocks as it manages requests through a pool of rotating IP addresses. Integrating Crawlbase into your development process ensures a smoother scraping experience, avoids disruptions, and ensures you follow Product Hunt’s policies effectively.

What information can be extracted from Product Hunt profiles?

You can extract useful information from Product Hunt profiles. This info includes the product’s name, description, details about the maker, upvote count, release date, and user comments. The product description tells you about its features, while the maker information details who created it. Upvote counts show how much the community likes it. Release dates give you a timeline, and user comments offer feedback and discussions, giving you an idea of user experiences.

Can I use the scraped data for commercial purposes?

If you want to use data you get from scraping Product Hunt for commercial reasons, you have to follow Product Hunt’s rules. It’s important to read and follow their policies because they say what you can and can’t do with their data. Using the data for commercial purposes without permission might break their rules and lead to legal problems. If you plan to use the data for commercial purposes, ask Product Hunt for permission or check if they have an official way (like an API) that lets you use the data for business. Following the platform’s rules is important to use the data fairly and legally.

What are the limitations of Product Hunt API?

The Product Hunt API has several limitations, including the default commercial use restriction. Users must contact Product Hunt for approval to use it for business purposes. Additionally, the API employs OAuth2 token authentication and may have rate limits to prevent misuse. For alternative scraping solutions, Crawlbase Crawling API offers a robust option. It facilitates web scraping without rate limits or IP blocks, using a pool of rotating IP addresses. This helps ensure uninterrupted data retrieval. Crawlbase is a useful tool for developers seeking a reliable and efficient solution for web scraping, particularly in scenarios where rate limits are a concern.