DeviantArt stands out as the biggest social platform for digital artists and art fans. With over 60 million members sharing tens of thousands of artworks daily, it’s a top spot for exploring and downloading diverse creations, from digital paintings to wallpapers, pixel art, anime, and film snapshots.

However, manually collecting thousands of data points from websites can be very time-consuming. Instead of manually copying information, we automate the process using programming languages like Python.

Scraping DeviantArt gives us a chance to look at different art styles, see what’s trending, and build our collection of favorite pictures. It’s not just about enjoying art; we can also learn more about it.

In this guide, we’ll use Python, a friendly programming language. And to help us with scraping, we’ve got the Crawlbase Crawling API – a handy tool that makes getting data from the web a lot simpler. Together, Python and the Crawlbase API make exploring and collecting digital art a breeze.

Table Of Contents

- DeviantArt Search Page Structure

- Why Scrape Images from DeviantArt?

- Installing Python and Libraries

- Obtaining Crawlbase API Key

- Choosing the Development IDE

- Technical Benefits of Crawlbase Crawling API

- Sending Request With Crawling API

- API Response Time and Format

- Crawling API Parameters

- Free Trial, Charging Strategy, and Rate Limit

- Crawlbase Python library

- Importing Necessary Libraries

- Constructing the URL for DeviantArt Search

- Making API Requests with Crawlbase Crawling API to Retrieve HTML

- Running Your Script

- Understanding Pagination in DeviantArt

- Modifying API Requests for Multiple Pages

- Ensuring Efficient Pagination Handling

- Inspecting DeviantArt Search Page for CSS Selectors

- Utilizing CSS Selectors for Extracting Image URLs

- Storing Extracted Data in CSV and SQLite Database

- Using Python to Download Images

- Organizing Downloaded Images

Understanding DeviantArt Website

DeviantArt stands as a vibrant and expansive online community that serves as a haven for artists, both seasoned and emerging. Launched in 2000, DeviantArt has grown into one of the largest online art communities, boasting millions of users and an extensive collection of diverse artworks.

At its core, DeviantArt is a digital gallery where artists can exhibit a wide range of creations, including digital paintings, illustrations, photography, literature, and more. The platform encourages interaction through comments, critiques, and the creation of collaborative projects, fostering a dynamic and supportive environment for creative minds.

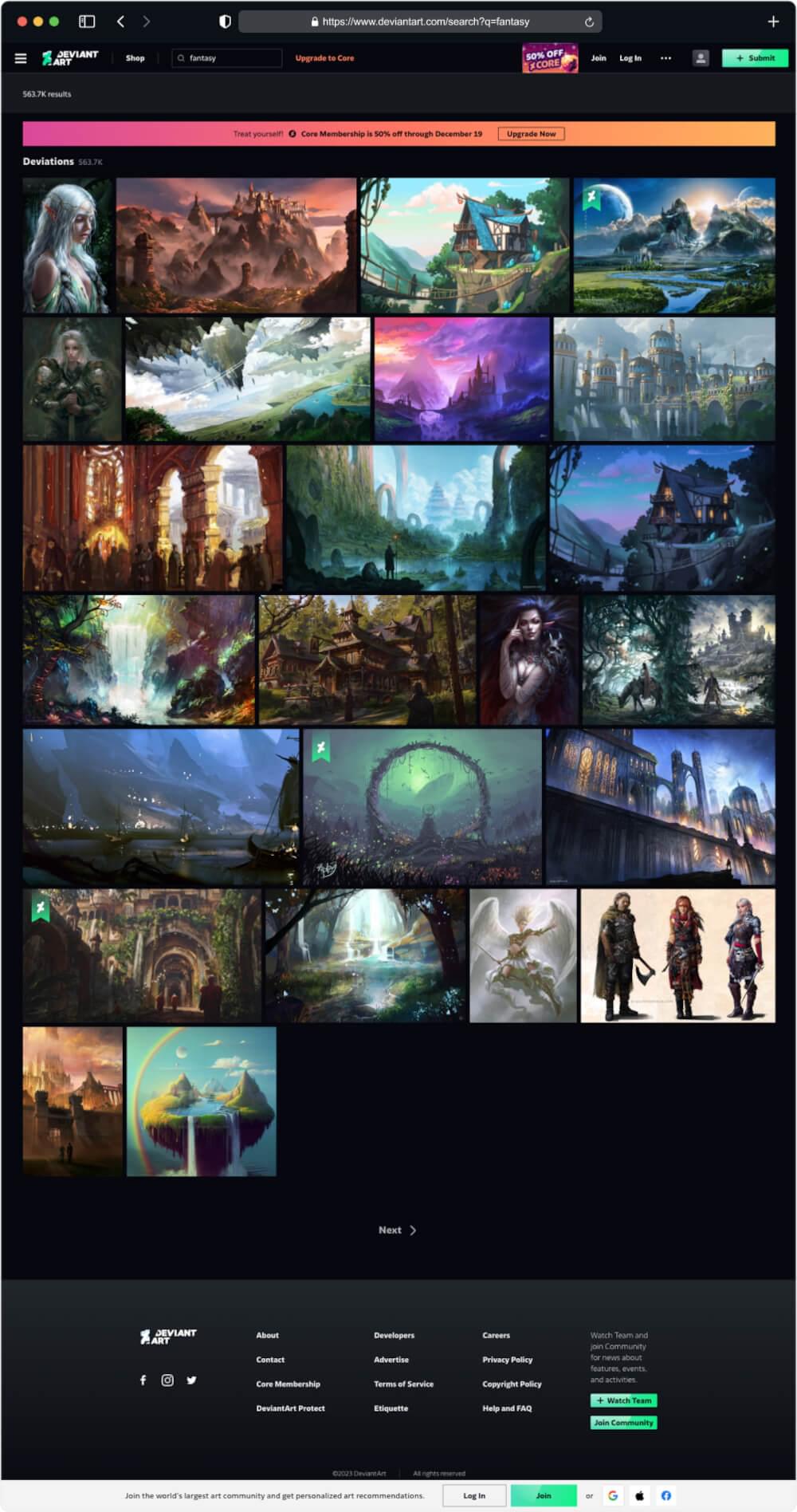

DeviantArt Search Page Structure

The search page is a gateway to a multitude of artworks, providing filters and parameters to refine the search for specific themes, styles, or artists.

Key components of the DeviantArt Search Page structure include:

- Search Bar: The entry point for users to input keywords, tags, or artists’ names.

- Filters: Options to narrow down searches based on categories, types, and popularity.

- Results Grid: Displaying a grid of thumbnail images representing artworks matching the search criteria.

- Pagination: Navigation to move through multiple pages of search results.

Why Scrape Images from DeviantArt?

People, including researchers, scrape images for various reasons. Firstly, it allows enthusiasts to discover diverse artistic styles and talents on DeviantArt, making it an exciting journey of artistic exploration. For researchers and analysts, scraping provides valuable data to study trends and the evolution of digital art over time. Artists and art enthusiasts also use scraped images as a source of inspiration and create curated collections, showcasing the immense creativity within the DeviantArt community. Additionally, scraping helps in understanding the dynamics of the community, including popular themes, collaboration trends, and the impact of different art styles. In essence, scraping from DeviantArt is a way to appreciate, learn from, and contribute to the rich tapestry of artistic expression on the platform.

Setting Up Your Environment

For Image scraping from Deviantart, let’s ensure your environment is primed and ready. This section will guide you through the installation of essential tools, including Python, and the setup of the necessary libraries — Crawlbase, BeautifulSoup, and Pandas.

Installing Python and Libraries

Python Installation:

Begin by installing Python, the programming language that will drive our scraping adventure. Visit the official Python website and download the latest version suitable for your operating system. Follow the installation instructions to set up Python on your machine.

Create a Virtual Environment:

To maintain a clean and organized development environment, consider creating a virtual environment for your project. Use the following commands in your terminal:

1 | # Create a virtual environment |

Library Installation:

Once Python is installed, open your terminal or command prompt and install the required libraries using the following commands:

1 | pip install crawlbase |

Crawlbase: The crawlbase library is a Python wrapper for the Crawlbase API, which will enable us to make web requests efficiently.

Beautiful Soup: Beautiful Soup is a library for parsing HTML and XML documents. It’s especially useful for extracting data from web pages.

Pandas: Pandas is a powerful data manipulation library that will help you organize and analyze the scraped data efficiently.

Requests: The requests library is a Python module for effortlessly sending HTTP requests and managing responses. It simplifies common HTTP operations, making it a widely used tool for web-related tasks like web scraping and API interactions.

Obtaining Crawlbase API Key

Sign Up for Crawlbase:

Navigate to the Crawlbase website and sign up for an account if you haven’t already. Once registered, log in to your account.

Retrieve Your API Key:

After logging in, go to your account documentation on Crawlbase. Locate your API key, which is crucial for interacting with the Crawlbase Crawling API. Keep this key secure, as it will be your gateway to accessing the web data you seek.

Choosing the Development IDE

An Integrated Development Environment (IDE) is like a special space for writing code. It helps by highlighting the code, suggesting words as you type, and providing tools for fixing errors. Even though you can write Python code in a basic text editor, using an IDE makes the process much easier and better for your development work.

Here are a few popular Python IDEs to consider:

PyCharm: PyCharm is a robust IDE with a free Community Edition. It offers features like code analysis, a visual debugger, and support for web development.

Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. Its vast extension library makes it versatile for various programming tasks, including web scraping.

Jupyter Notebook: Jupyter Notebook is excellent for interactive coding and data exploration. It’s commonly used in data science projects.

Spyder: Spyder is an IDE designed for scientific and data-related tasks. It provides features like a variable explorer and an interactive console.

With these steps, your environment is now equipped with the necessary tools for our DeviantArt scraping endeavor. In the upcoming sections, we’ll leverage these tools to craft our DeviantArt Scraper and unravel the world of digital artistry.

Exploring Crawlbase Crawling API

Embarking on your journey to use web scraping for DeviantArt, it’s crucial to understand the Crawlbase Crawling API. This part will break down the technical details of Crawlbase’s API, giving you the know-how to smoothly use it in your Python job-scraping project.

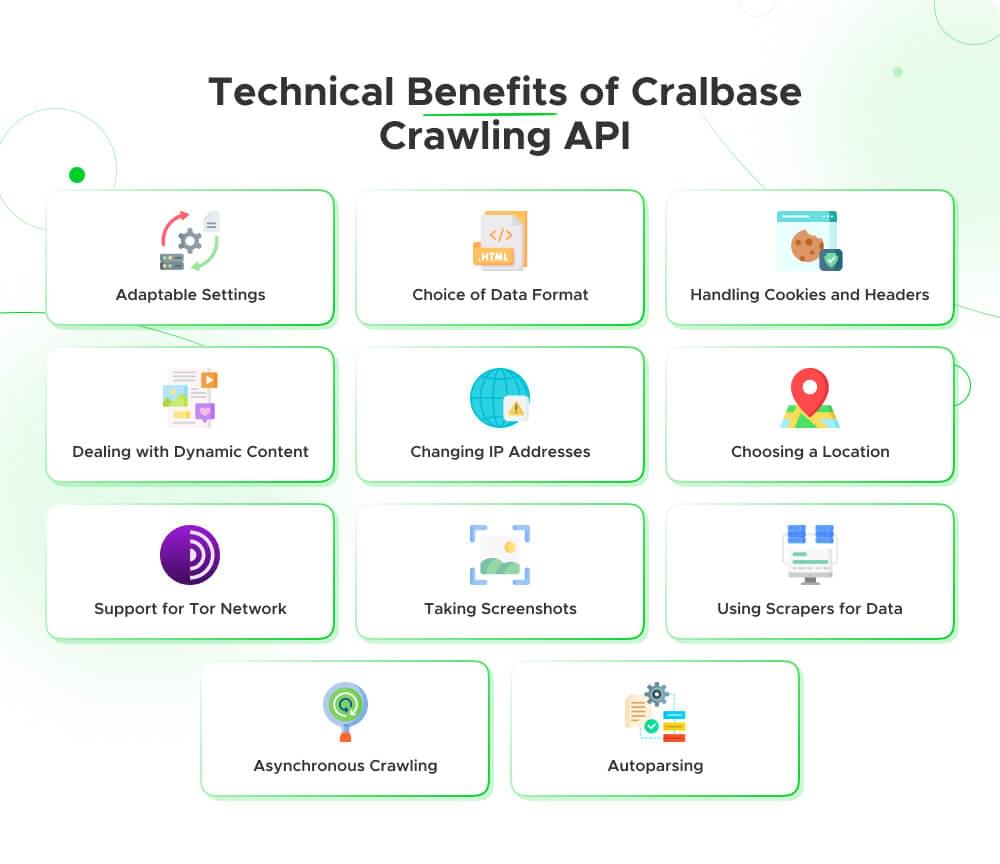

Technical Benefits of Crawlbase Crawling API

The Crawlbase Crawling API offers several important advantages, helping developers collect web data and manage different parts of the crawling process easily. Here are some notable benefits:

- Adaptable Settings: Crawlbase Crawling API gives a lot of settings, letting developers fine-tune their API requests. This includes parameters like “format”, “user_agent”, “page_wait”, and more, allowing customization based on specific needs.

- Choice of Data Format: Developers can pick between JSON and HTML response formats based on what they prefer and what suits their data processing needs. This flexibility makes data extraction and handling easier.

- Handling Cookies and Headers: By using parameters like “get_cookies“ and “get_headers,” developers can get important information like cookies and headers from the original website, crucial for certain web scraping tasks.

- Dealing with Dynamic Content: This API is good at handling dynamic content, useful for crawling pages with JavaScript. Parameters like “page_wait“ and “ajax_wait“ help developers make sure the API captures all the content, even if it takes time to load.

- Changing IP Addresses: This API lets you switch IP addresses, keeping you anonymous and reducing the chance of being blocked by websites. This feature makes web crawling more successful.

- Choosing a Location: Developers can specify a country for requests using the “country“ parameter, which is handy for situations where you need data from specific places.

- Support for Tor Network: Turning on the “tor_network“ parameter allows crawling onion websites over the Tor network, making it more private and giving access to content on the dark web.

- Taking Screenshots: With the screenshot API or “screenshot“ paramter you can capture screenshots of web pages, giving a visual context to the data collected.

- Using Scrapers for Data: This API lets you use pre-defined data scrapers using “scraper“ parameter, making it easier to get specific information from web pages without much hassle.

- Asynchronous Crawling: When you need to crawl asynchronously, the API supports the “async“ parameter. Developers get a request identifier (RID) to easily retrieve crawled data from cloud storage.

- Autoparsing: The “autoparse“ parameter makes data extraction simpler by providing parsed information in JSON format, reducing the need for a lot of extra work after getting the HTML content.

In summary, Crawlbase’s Crawling API is a strong tool for web scraping and data extraction. It offers a variety of settings and features to fit different needs, making web crawling efficient and effective, whether you’re dealing with dynamic content, managing cookies and headers, changing IP addresses, or getting specific data.

Sending Request With Crawling API

Crawlbase’s Crawling API is designed for simplicity and ease of integration into your web scraping projects. All API URLs begin with the base part: https://api.crawlbase.com. Making your first API call is as straightforward as executing a command in your terminal:

1 | curl 'https://api.crawlbase.com/?token=YOUR_CRAWLBASE_TOKEN&url=https%3A%2F%2Fgithub.com%2Fcrawlbase%3Ftab%3Drepositories' |

Here, you’ll notice the token parameter, which serves as your authentication key for accessing Crawlbase’s web scraping capabilities. Crawlbase offers two token types: a normal (TCP) token and JavaScript (JS) token. Choose the normal token for websites that don’t change much, like static websites. But if you want to get information from a site that only works when people use web browsers with JavaScript or if JavaScript makes the important stuff you want on the user’s side, then you should use the JavaScript token. Like with DeviantArt, normal token is a good choice.

API Response Time and Format

When engaging with the Crawlbase Crawling API, it’s vital to grasp the dynamics of response times and how to interpret success or failure. Let’s take a closer look at these components:

Response Timings: Ordinarily, the API exhibits response times within a spectrum of 4 to 10 seconds. To ensure a smooth encounter and accommodate potential delays, it’s recommended to set a timeout for calls to a minimum of 90 seconds. This safeguards your application, allowing it to manage fluctuations in response times without disruptions.

Response Formats: When making requests to Crawlbase, you enjoy the flexibility to opt for either HTML or JSON response formats, depending on your preferences and parsing needs. By appending the “format” query parameter with the values “HTML” or “JSON,” you can specify your desired format.

In the scenario where you choose the HTML response format (the default setting), the API will furnish the HTML content of the webpage as the response. The response parameters will be conveniently incorporated into the response headers for easy accessibility. Here’s an illustrative response example:

1 | Headers: |

If you opt for the JSON response format, you’ll receive a structured JSON object that can be easily parsed in your application. This object contains all the information you need, including response parameters. Here’s an example response:

1 | { |

Response Headers: Both HTML and JSON responses include essential headers that provide valuable information about the request and its outcome:

url: The original URL that was sent in the request or the URL of any redirects that Crawlbase followed.original_status: The status response received by Crawlbase when crawling the URL sent in the request. It can be any valid HTTP status code.pc_status: The Crawlbase (pc) status code, which can be any status code and is the code that ends up being valid. For instance, if a website returns an original_status of 200 with a CAPTCHA challenge, the pc_status may be 503.body: This parameter is available in JSON format and contains the content of the web page that Crawlbase found as a result of proxy crawling the URL sent in the request.

These response parameters empower you to assess the outcome of your requests and determine whether your web scraping operation was successful.

Crawling API Parameters

Crawlbase offers a comprehensive set of parameters that allow developers to customize their web crawling requests. These parameters enable fine-tuning of the crawling process to meet specific requirements. For instance, you can specify response formats like JSON or HTML using the “format” parameter or control page waiting times with “page_wait” when working with JavaScript-generated content.

Additionally, you can extract cookies and headers, set custom user agents, capture screenshots, and even choose geolocation preferences using parameters such as “get_cookies,” “user_agent,” “screenshot,” and “country.” These options provide flexibility and control over the web crawling process. For example, to retrieve cookies set by the original website, you can simply include get_cookies=true query param in your API request, and Crawlbase will return the cookies in the response headers.

You can read more about Crawlbase Crawling API parameters here.

Free Trial, Charging Strategy, and Rate Limit

Crawlbase extends a trial period encompassing the first 1,000 requests, offering a chance to delve into its capabilities before making a commitment. Yet, optimizing this trial window is crucial to extracting the utmost value from it.

Operating on a “pay-as-you-go” model, Crawlbase charges exclusively for successful requests, ensuring a cost-effective and efficient solution for your web scraping endeavors. The determination of successful requests is contingent upon scrutinizing the original_status and pc_status within the response parameters.

The API imposes a rate limit, capping requests at a maximum of 20 per second, per token. Should you necessitate a more elevated rate limit, reaching out to support allows for a tailored discussion to accommodate your specific requirements.

Crawlbase Python library

The Crawlbase Python library offers a simple way to interact with the Crawlbase Crawling API. You can use this lightweight and dependency-free Python class as a wrapper for the Crawlbase API. To begin, initialize the Crawling API class with your Crawlbase token. Then, you can make GET requests by providing the URL you want to scrape and any desired options, such as custom user agents or response formats. For example, you can scrape a web page and access its content like this:

1 | from crawlbase import CrawlingAPI |

This library simplifies the process of fetching web data and is particularly useful for scenarios where dynamic content, IP rotation, and other advanced features of the Crawlbase API are required.

Crawling DeviantArt Search Page

Now that we’re equipped with an understanding of DeviantArt and a configured environment, let’s dive into the exciting process of crawling the DeviantArt Search Page. This section will walk you through importing the necessary libraries, constructing the URL for the search, and making API requests using the Crawlbase Crawling API to retrieve HTML content.

Importing Necessary Libraries

Open your favorite Python editor or create a new Python script file. To initiate our crawling adventure, we need to equip ourselves with the right tools. Import the required libraries into your Python script:

1 | from crawlbase import CrawlingAPI |

Here, we bring in the CrawlingAPI class from Crawlbase, ensuring we have the capabilities to interact with the Crawling API.

Constructing the URL for DeviantArt Search

Now, let’s construct the URL for our DeviantArt search. Suppose we want to explore digital art with the keyword “fantasy.” The URL construction might look like this:

1 | # Replace 'YOUR_CRAWLBASE_TOKEN' with your actual Crawlbase API token |

Making API Requests with Crawlbase Crawling API to Retrieve HTML

With our URL ready, let’s harness the power of the Crawlbase Crawling API to retrieve the HTML content of the DeviantArt Search Page:

1 | # Making the API request |

In this snippet, we’ve used the get method of the CrawlingAPI class to make a request to the constructed search URL. The response is then checked for success, and if successful, the HTML content is extracted for further exploration.

Running Your Script

Now that your script is ready, save it with a .py extension, for example, deviantart_scraper.py. Open your terminal or command prompt, navigate to the script’s directory, and run:

1 | python deviantart_scraper.py |

Replace deviantart_scraper.py with the actual name of your script. Press Enter, and your script will execute, initiating the process of crawling the DeviantArt Search Page.

Example Output:

With these steps, we’ve initiated the crawling process of the DeviantArt Search Page. In the upcoming sections, we’ll delve deeper into parsing and extracting image URLs, bringing us closer to the completion of our DeviantArt Scraper.

Handling Pagination

Navigating through multiple pages is a common challenge when scraping websites with extensive content, and DeviantArt is no exception. In this section, we’ll delve into the intricacies of handling pagination, ensuring our DeviantArt scraper efficiently captures a broad range of search results.

Understanding Pagination in DeviantArt

DeviantArt structures search results across multiple pages to manage and present content systematically. Each page typically contains a subset of results, and users progress through these pages to explore additional content. Understanding this pagination system is essential for our scraper to collect a comprehensive dataset.

Modifying API Requests for Multiple Pages

To adapt our scraper for pagination, we’ll need to modify our API requests dynamically as we move through different pages. Consider the following example:

1 | # Assuming 'page_number' is the variable representing the page number |

In this snippet, we’ve appended &page={page_number} to the search URL to specify the desired page. As our scraper progresses through pages, we can update the page_number variable accordingly.

Ensuring Efficient Pagination Handling

Efficiency is paramount when dealing with pagination to prevent unnecessary strain on resources. Consider implementing a loop to systematically iterate through multiple pages. Lets update the script from previous section to incorporate the pagination:

1 | from crawlbase import CrawlingAPI |

The scrape_page function encapsulates the logic for constructing the URL, making an API request, and handling the HTML content extraction. It checks the response status code and, if successful (status code 200), processes the HTML content for data extraction. The main function initializes the Crawlbase API, sets the base URL, keyword, and total number of pages to scrape. It then iterates through the specified number of pages, calling the scrape_page function for each page. The extracted data, represented here as a placeholder list, is printed for demonstration purposes.

In the next sections, we will delve into the detailed process of parsing HTML content to extract image URLs and implementing mechanisms to download these images systematically.

Parsing and Extracting Image URLs

Now that we’ve successfully navigated through multiple pages, it’s time to focus on parsing and extracting valuable information from the HTML content. In this section, we’ll explore how to inspect the DeviantArt Search Page for CSS selectors, utilize these selectors for image extraction, clean the extracted URLs, and finally, store the data in both CSV and SQLite formats.

Inspecting DeviantArt Search Page for CSS Selectors

Before we can extract image URLs, we need to identify the HTML elements that contain the relevant information. Right-click on the web page, select “Inspect” (or “Inspect Element”), and navigate through the HTML structure to find the elements containing the image URLs.

For example, DeviantArt structure its image URLs within HTML tags like:

1 | <a |

In this case, the CSS selector for the image URL could be a[data-hook="deviation_link"] img[property="contentUrl"].

Utilizing CSS Selectors for Extracting Image URLs

Let’s integrate the parsing logic into our existing script. By using the BeautifulSoup library, we can parse the HTML content and extract image URLs based on the identified CSS selectors. Update the scrape_page function to include the parsing logic using CSS selectors.

1 | from crawlbase import CrawlingAPI |

scrape_page(api, base_url, keyword, page_number): This function takes parameters for the Crawlbase API instance api, the base URL base_url, a search keyword keyword, and the page number page_number. It constructs the URL for the current page, makes a request to the Crawlbase API to retrieve the HTML content, and then extracts image URLs from the HTML using BeautifulSoup. The CSS selector used for image URLs is ‘a[data-hook="deviation_link"] img[property="contentUrl"]‘. The extracted image URLs are stored in a list of dictionaries parsed_data.main(): This function is the main entry point of the script. It initializes the Crawlbase API with a provided token, sets the base URL to “https://www.deviantart.com,” specifies the search keyword as “fantasy,” and defines the total number of pages to scrape (in this case, 2). It iterates through the specified number of pages, calling the scrape_page function for each page and appending the extracted data to the all_data list. Finally, it prints the extracted data in a formatted JSON representation using json.dumps.

Example Output:

1 | [ |

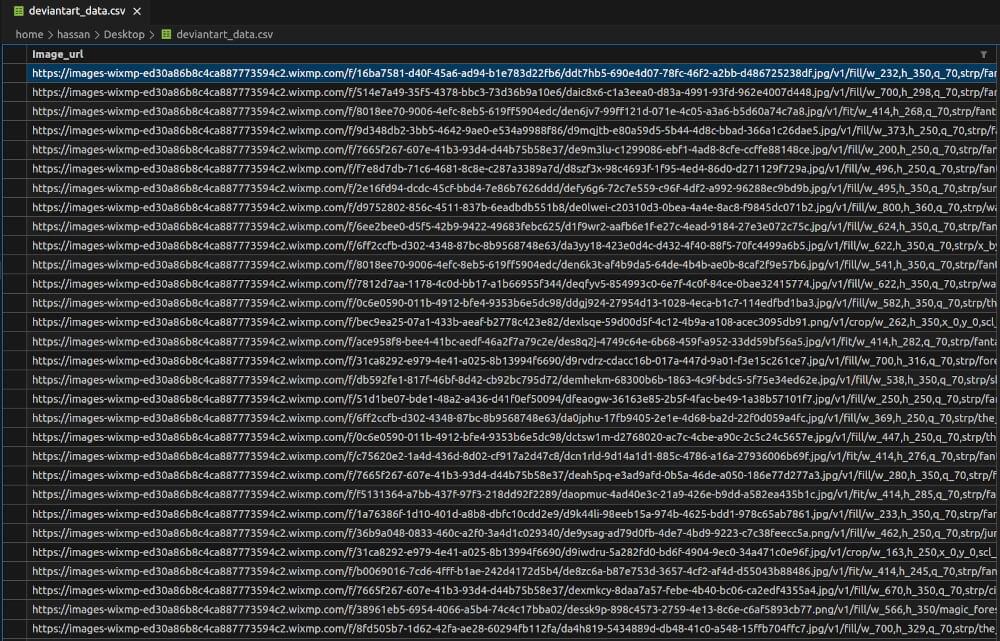

Storing Extracted Data in CSV and SQLite Database

Now, let’s update the main function to handle the extracted data and store it in both CSV and SQLite formats.

1 | import sqlite3 |

- For CSV storage, the script uses the Pandas library to create a DataFrame df from the extracted data and then writes the DataFrame to a CSV file

deviantart_data.csvusing the to_csv method. - For SQLite database storage, the script initializes the database using the

initialize_databasefunction and inserts the extracted data into thedeviantart_datatable using theinsert_data_into_databasefunction. The database filedeviantart_data.dbis created and updated with each run of the script, and it includes the ID and image URL columns for each record.

deviantart_data.csv preview:

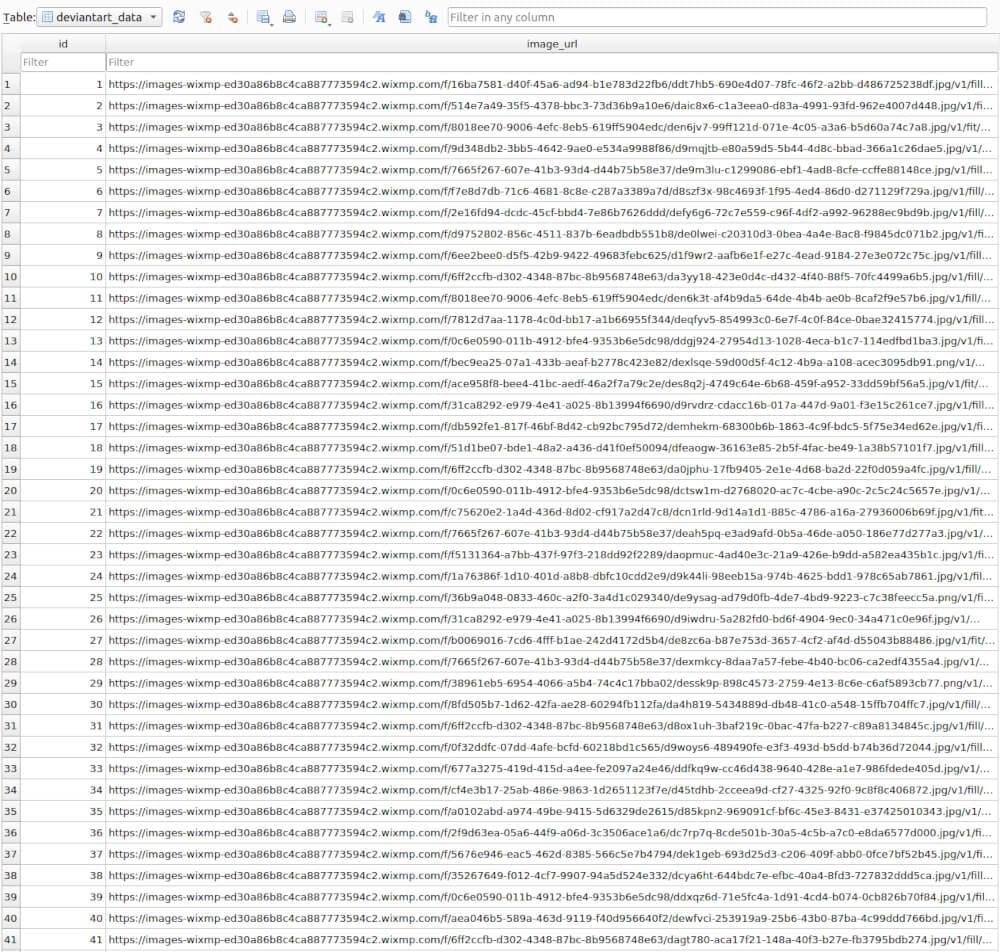

deviantart_data.db preview:

Downloading Images from Scraped Image URLs

This section will guide you through the process of utilizing Python to download images from URLs scraped from DeviantArt, handling potential download errors, and organizing the downloaded images efficiently.

Using Python to Download Images

Python offers a variety of libraries for handling HTTP requests and downloading files. One common and user-friendly choice is the requests library. Below is a basic example of how you can use it to download an image:

1 | import requests |

This function, download_image, takes an image URL and a local path where the image should be saved. It then uses the requests library to download the image.

Organizing Downloaded Images

Organizing downloaded images into a structured directory can greatly simplify further processing. Consider creating a folder structure based on categories, keywords, or any other relevant criteria. Here’s a simple example of how you might organize downloaded images:

1 | downloaded_images/ |

This organization can be achieved by adjusting the download_path in the download_image function based on the category or any relevant information associated with each image.

With these steps, you’ll be equipped to not only download images from DeviantArt but also handle errors effectively and organize the downloaded images for easy access and further analysis.

Final Words

I hope now you are able to easily download and scrape Images from DeviantArt using Python and the Crawlbase Crawling API. And also, by using Python and checking out the DeviantArt Search Pages, you’ve learned how to take out and organize picture links effectively.

Whether you’re making a collection of digital art or trying to understand what’s on DeviantArt, it’s important to scrape the web responsibly. Always follow the rules of the platform and be ethical.

Now that you have these useful skills, you can start scraping the web on your own. If you run into any problems, you can ask the Crawlbase support team for help.

Frequently Asked Questions

Q. Is web scraping on DeviantArt legal?

While web scraping itself is generally legal, it’s essential to navigate within the boundaries set by DeviantArt’s terms of service. DeviantArt Scraper operates with respect for ethical scraping practices. Always review and comply with DeviantArt’s guidelines to ensure responsible and lawful use.

Q. How can I handle pagination when scraping DeviantArt?

Managing pagination in DeviantArt involves constructing URLs for various pages in the search results. The guide illustrates how to adjust API requests for multiple pages, enabling a smooth traversal through the DeviantArt Search Pages. This ensures comprehensive data retrieval for a thorough exploration.

Q. Can I customize the data I scrape from DeviantArt?

Absolutely. The guide provides insights into inspecting the HTML structure of DeviantArt Search Pages and leveraging CSS selectors. This customization empowers you to tailor your data extraction, allowing you to focus on specific information like image URLs. Adapt the scraping logic to suit your individual needs and preferences.

Q. What are the benefits of storing data in both CSV and SQLite formats?

Storing data in CSV and SQLite formats offers a versatile approach. CSV facilitates easy data sharing and analysis, making it accessible for diverse applications. On the other hand, SQLite provides a lightweight database solution, ensuring efficient data retrieval and management within your Python projects. This dual-format approach caters to different use cases and preferences.