Glassdoor is a top job and recruiting platform focused on workplace transparency. It provides tools for job seekers to make informed career decisions. The platform stands out by collecting insights directly from employees, offering authentic information about companies.

Glassdoor features millions of job listings and a growing database of company reviews, covering CEO ratings, salaries, interviews, benefits, and more. It is a popular job search and company review platform, and extracting data from it can be useful for various purposes

In this blog, we will explore how to scrape data from Glassdoor using JavaScript and Cheerio with Crawlbase for research, analysis, and decision-making in the job-seeking and recruitment landscape. Let’s begin.

Table of Contents

II. Understanding Glassdoor Data Structure

IV. Setting Up the Glassdoor Scraper Project

V. Fetching HTML using the Crawling API

VI. Scraping Job Details from the HTML response

VII. Saving the scraped data to a JSON file

IX. Frequently Asked Questions (FAQ)

I. Why Scrape Glassdoor?

Web scraping Glassdoor data is a strategic move for individuals and organizations aiming to gain valuable insights into the job market and corporate landscape. Despite a 12% decrease in traffic compared to the previous month, Glassdoor continues to attract a solid average of over 40 million visitors per month. It maintains its position among the top 5 similarly ranked sites, showcasing its ongoing relevance and popularity in the online job and recruiting landscape.

The diverse range of data available on Glassdoor provides comprehensive information about companies, job opportunities, compensation trends, and industry dynamics.

Here’s why scraping Glassdoor is valuable:

- Comprehensive Company Information:

- Obtain details about companies, including size, location, industry, and revenue.

- Gain a holistic view of the corporate landscape for business decisions, market analysis, and competitor research.

- Expansive Job Listings:

- Access a vast repository of job listings across various industries and sectors.

- Analyze job market trends, identify emerging roles, and tailor job searches based on specific criteria.

- Salary and Compensation Insights:

- Gain valuable data on salary averages for different job titles.

- Explore details about benefits and perks offered by companies, aiding in compensation benchmarking and negotiation.

- Company Reviews and Ratings:

- Tap into employee reviews and ratings to understand company culture, leadership, and overall employee satisfaction.

- Make informed decisions about potential employers or assess the reputational impact of a company.

- Interview Preparation:

- Access information about common interview questions and tips shared by Glassdoor users.

- Enhance interview preparedness by understanding the experiences of individuals who have gone through the interview process.

- Industry Trends and Insights:

- Leverage Glassdoor’s extensive database to uncover insights into industry trends.

- Identify shifts in job demand, predict future growth areas, and stay informed about changes within specific industries.

II. Understanding Glassdoor Data Structure

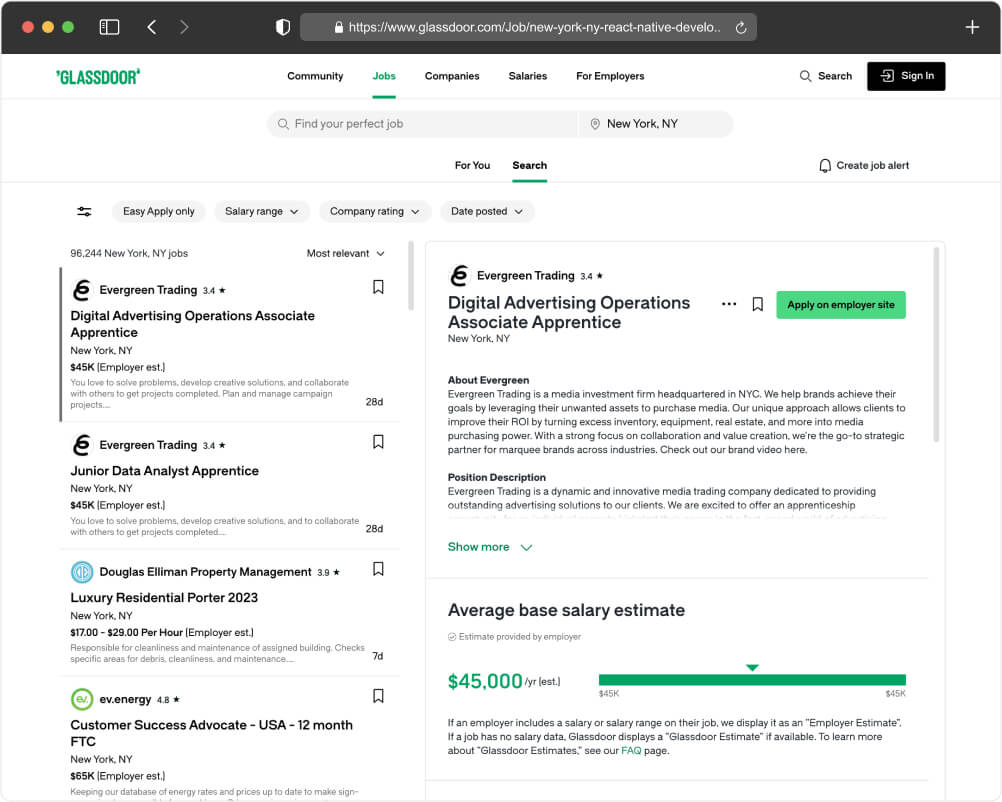

Before diving into the code for our Glassdoor scraper, it’s important to understand where valuable data resides within the HTML page. The image below illustrates key elements we can extract information from, including Company Name, Reviews, Location, Salary, and Post Date.

Key Data Points:

- Company Name:

- Located prominently, the company name is crucial for identifying the hiring entity.

- Reviews:

- The reviews section provides insights into the employee experiences and overall satisfaction with the company.

- Location:

- Clearly indicated, the location specifies where the job opportunity is based.

- Salary:

- The salary information, when available, provides an estimate of the compensation range for the position.

- Post Date:

- Indicates how long ago the job listing was posted, offering a sense of urgency or relevance.

By targeting these elements in our scraping code, we can extract detailed information about each job listing, allowing users to make informed decisions in their job search or analysis of the job market.

Understanding the structure of Glassdoor’s HTML page is crucial for pinpointing the right HTML tags and classes that contain the data we seek. In the next sections of our guide, we will translate this understanding into code, enabling the extraction of valuable insights from Glassdoor’s job listings. Let’s proceed to the next steps and bring this data structure comprehension into actionable code.

III. Prerequisites

Now that we have identified the data we are targeting and its location on the Glassdoor website, let’s take a moment to briefly go over the prerequisites for our Glassdoor scraper project.

Basic Knowledge of JavaScript and Node.js:

- Ensure you have a foundational understanding of JavaScript, the programming language that will drive our web scraping efforts. Additionally, have Node.js installed on your machine to execute JavaScript code outside of a web browser.

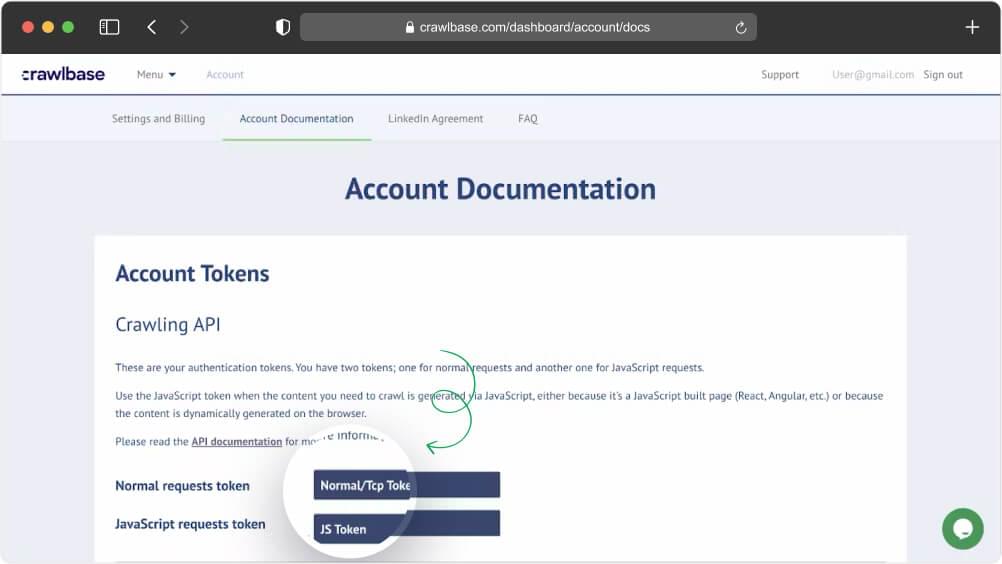

Active Crawlbase API Account with API Credentials:

- Obtain API credentials by signing up for an active Crawlbase account and going to the Account documentation page. For this specific project, we will utilize the JavaScript token for the Crawlbase Crawling API. This enables us to crawl the entire content of the Glassdoor page and extract the necessary data.

Node.js Installed:

- Confirm that Node.js is installed on your development machine. You can download and install the latest version of Node.js from the official website: Node.js Downloads.

Familiarity with Express.js for Creating an Endpoint:

- Express.js, a popular Node.js framework, will be utilized to create an endpoint for handling web scraping requests. Familiarize yourself with Express.js to seamlessly integrate the scraping functionality into your web application.

IV. Setting Up the Glassdoor Scraper Project

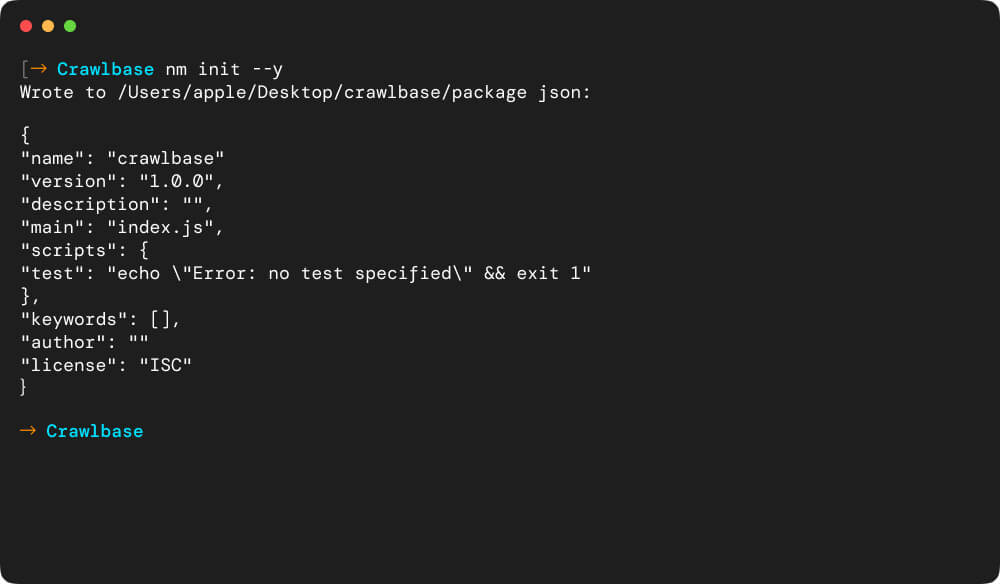

Let’s kick off our Glassdoor scraper project by initializing a new npm project and executing a series of commands to get everything up and running:

Create a New Directory:

1 | mkdir glassdoor-scraper |

This command establishes an empty directory with the name “glassdoor-scraper.”

Initialize Node.js Project:

1 | npm init --y |

This command swiftly initiates a new Node.js project, bypassing the interactive setup by accepting default values.

Create Scraper.js File:

1 | touch scraper.js |

The touch command generates an empty file named “scraper.js” in the current directory.

Install Essential Dependencies:

In your Node.js project, install the necessary dependencies to set up the web scraping environment. These include:

- Cheerio: A powerful library for HTML parsing, enabling data extraction from web pages.

- Express (Optional): If you plan to create an endpoint for receiving scraped data, you can use the Express.js framework to set up your server.

- Crawlbase Node Library (Optional): This package facilitates interaction with the Crawlbase Crawling API, efficiently fetching HTML content from websites.

Execute the following command to install these dependencies:

1 | npm i express crawlbase cheerio |

With these steps, you’ve initiated your Glassdoor scraper project, created the necessary files, and installed essential dependencies for effective web scraping. Next, we’ll delve into writing the actual scraper code.

V. Fetching HTML using the Crawling API

Now that we’ve sorted out our API credentials and primed the server endpoint with essential dependencies, it’s time to explore the capabilities of the Crawlbase API. This API serves as your avenue to initiate HTTP requests, capture raw HTML data from Glassdoor’s job listing page, and pave the way for an insightful data extraction process.

Copy the complete code below and save it in your scraper.js file

1 | const express = require('express'); |

What’s Happening:

- Express Setup: We’ve configured an Express app to handle requests.

- Crawling API Instance: The Crawlbase API is initiated with your unique JavaScript token, granting access to its powerful crawling capabilities.

- Scraping Endpoint: A

/scrapeendpoint is defined to receive requests. When a URL is provided, it triggers the Crawlbase API to fetch the HTML. - Raw HTML Logging: The fetched HTML data is logged for now, serving as the canvas for your subsequent data extraction process.

Let’s execute the above code by running the command:

1 | node scraper.js |

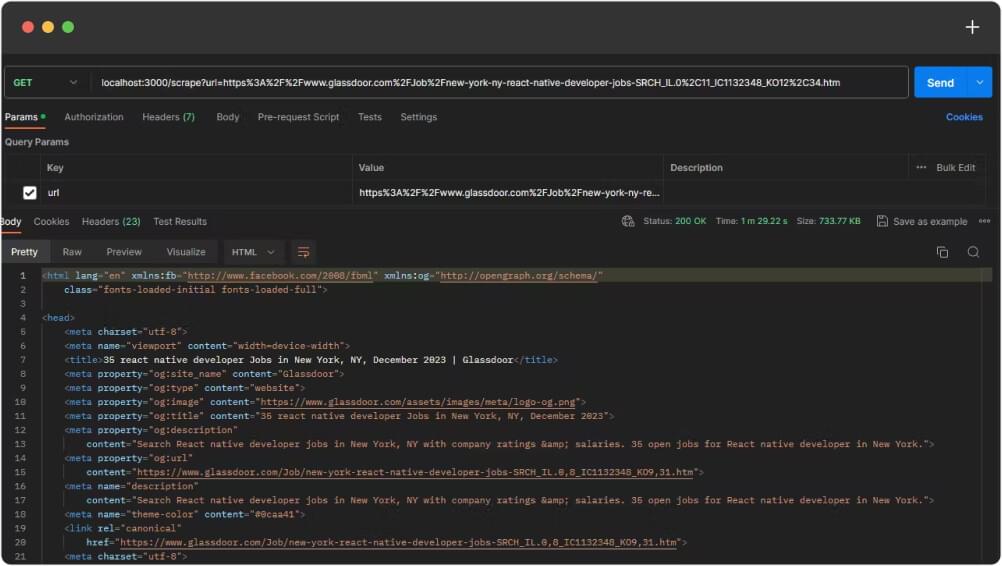

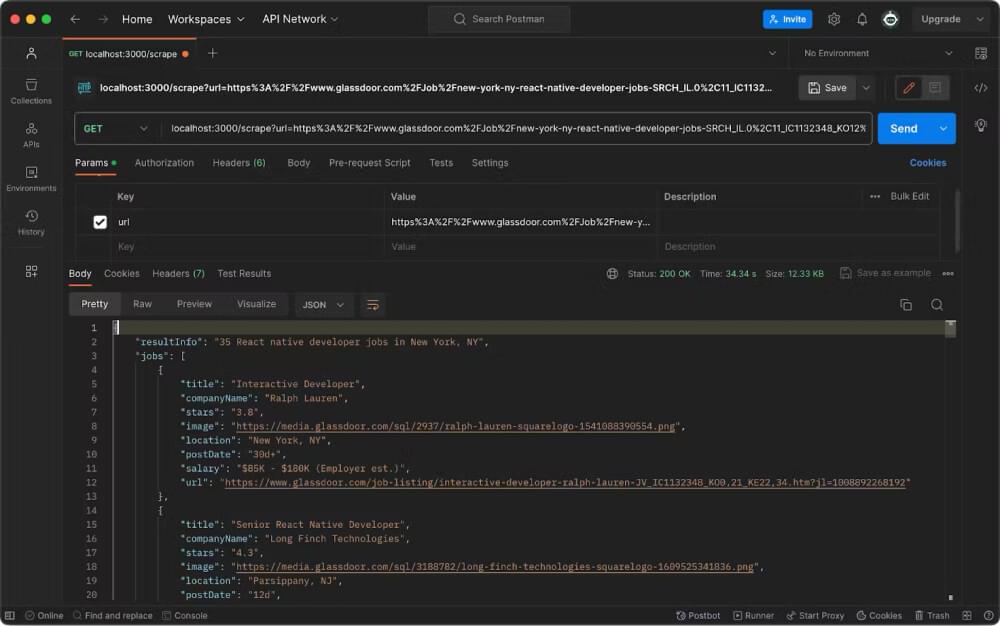

The above code will start a local server and prepare the /scrape route. At this point, we can use Postman to do a GET request to crawl the complete HTML code of the target URL.

Here are the steps:

Download and install Postman

Launch the Postman application.

Create a New Request by clicking the “New” button and choosing a request name (e.g., “Crawlbase API”).

Select the appropriate HTTP method (GET, POST, etc.). In this case, choose GET request.

Enter the local server scrape route: localhost:3000/scrape

At the query params below, add the URL key and your target URL as the value.

Encode your target URL by highlighting the URL, clicking the meatballs menu, and selecting Encode URI.

Click on the “Send” button to execute the request.

After sending the request, you’ll see the response below the request details. It will display the status code, headers, and the response body.

Examine the response body to ensure it contains the expected data. You can switch between different views like “Pretty,” “Raw,” “Preview,” etc., to better understand the response.

VI. Scraping Job Details from the HTML response

After successfully fetching the HTML content using the Crawlbase API, our focus will be on the extraction of vital job details from this raw data such as job titles, salaries, company name, location, and date. The code snippet below takes you through this process, utilizing the power of Cheerio to navigate the HTML structure and pinpoint the information we need.

Copy the complete code below and save it in your scraper.js file:

1 | // Import required modules |

Highlights of the Code:

- Module Imports: Essential modules, including Express, Cheerio, and the Crawlbase API, are imported to kickstart the process.

- Crawlbase API Initialization: The Crawlbase API is initialized with your token, paving the way for seamless interactions.

- Express App Setup: The Express app is set up to handle incoming requests on the designated endpoint.

- Data Parsing with Cheerio: The

parseDataFromHTMLfunction utilizes Cheerio to navigate the HTML structure, extracting essential job details. - Scraping Endpoint Execution: When a request is made to the

/scrapeendpoint, the Crawlbase API fetches the HTML, and the parsed data is sent as a JSON response. - Server Activation: The Express server is initiated, ready to transform HTML into meaningful job details.

Use Postman once again to get the JSON Response:

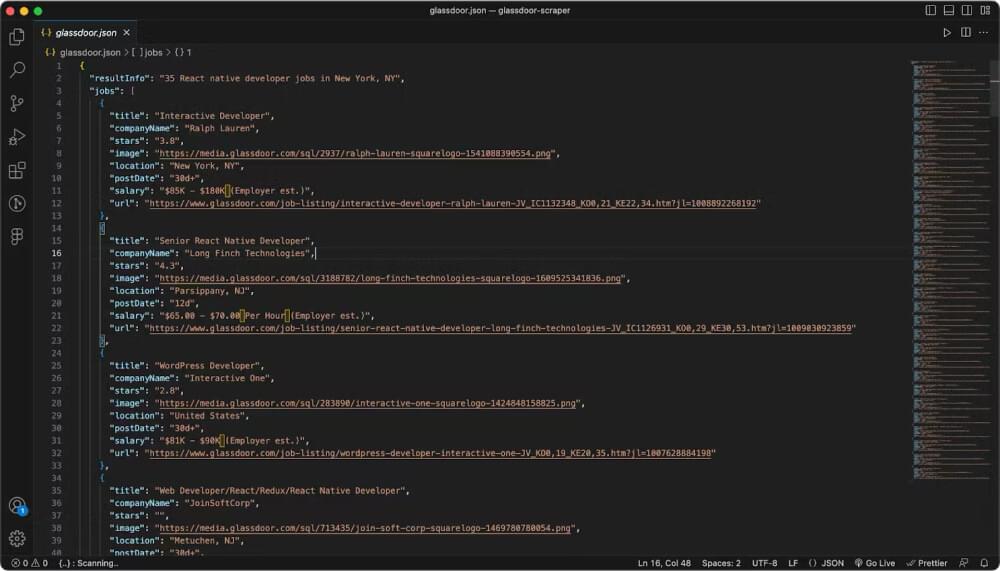

VII. Saving the scraped data to a JSON file

While the primary goal of web scraping is to extract valuable data, it’s equally important to know how to preserve these insights for future reference or analysis. In this optional step, we’ll explore how to save the scraped job details to a JSON file. Keep in mind that this is just one approach, and users have the flexibility to save the data in various formats, such as CSV, databases, or any other preferred method.

To save the data in a JSON file, we will use the fs module, which is a built-in module in Node.js:

1 | // Import fs module |

Here’s the complete code you can copy and save to your scraper.js file:

1 | // Import required modules |

Running the code using Postman not only gives you the Glassdoor data but also saves it as a JSON file on your machine.

VIII. Conclusion

This web scraping project succeeded thanks to the robust Crawling API provided by Crawlbase. By integrating this powerful tool, we’ve unlocked the potential to effortlessly retrieve and analyze valuable job data from Glassdoor.

As you explore and interact with the code, remember that this is just the beginning of your web scraping journey. Feel free to tailor and enhance the code to align with your specific project requirements. The flexibility of this implementation allows you to adapt, modify, and innovate, ensuring that the code becomes a valuable asset in your toolkit.

Whether you’re a seasoned developer or just starting, the door is wide open for improvements and customization. Take this opportunity to further refine the code, explore additional features, or integrate it into your existing projects. The goal is not just to scrape data but to inspire continuous learning and innovation in the realm of web development.

Feel encouraged to experiment, enhance, and make this code your own. Your journey in web scraping has just begun, and the possibilities are endless. If you are interested in similar projects, please check more of our blogs below:

Web Scrape Expedia Using JavaScript

Web Scrape Booking Using JavaScript

How to Build a YouTube Channel Scraper in JS

If you encounter any challenges or have questions while working with Crawlbase or implementing this code, don’t hesitate to reach out to the Crawlbase support team. Happy coding!

IX. Frequently Asked Questions (FAQ)

1. Can I build a Glassdoor web scraper using a different programming language?

Certainly! While this guide focuses on JavaScript with Cheerio, you can implement a Glassdoor web scraper using various programming languages. Adapt the code and techniques based on your language of choice and the tools available.

2. Is Crawlbase compatible with other languages?

Yes! Crawlbase offers libraries and SDKs for multiple programming languages, making integration seamless. Explore the Crawlbase libraries for easier integration with your preferred language: Crawlbase Libraries & SDK.

3. Will I get blocked while crawling Glassdoor?

No need to worry. Crawlbase has you covered! The platform employs smart AI systems and rotating proxies to protect your web scraper from blocks and captchas. This ensures a smooth and uninterrupted web scraping experience, allowing you to focus on extracting valuable data without interruptions.

4. What are the limitations to Glassdoor API?

The Glassdoor API, while providing valuable insights into company data, has limitations such as restricted public access and specific endpoint requirements. Users must adhere to a prescribed attribution and be mindful of version dependencies. The format support is limited to JSON, with future plans for XML.

In contrast, Crawlbase’s Crawling API offer more freedom in data extraction, allowing users to define the data they wish to scrape without endpoint restrictions. Format agnosticism and the absence of attribution requirements provide flexibility, and integration is often more straightforward. Crawlbase, for instance, excels in cross-domain scraping without relying on JSONP callbacks. Ultimately, the choice between Glassdoor’s API and a Crawling API depends on project requirements, with Crawling API offering greater customization and ease of integration.