Are you interested in unlocking the hidden insights within Amazon’s vast product database? If so, you’ve come to the right place. In this step-by-step Amazon data scraping guide, we will walk you through the process of scraping Amazon product data and harnessing its power for business growth. We’ll cover everything from understanding the importance of product data to handling CAPTCHAs and anti-scraping measures. So grab your tools and get ready to dive into the world of Amazon data scraping!

We use Crawlbase Crawling API alongside JavaScript to efficiently scrape Amazon product data. The dynamic capabilities of JavaScript in engaging with web elements, paired with the API’s anti-scraping mechanisms, guarantee a seamless process of collecting data. The end result will be a wealth of Amazon product data, neatly organized in both HTML and JSON formats.

Table of Contents

- Exploring the Anatomy of an Amazon Product Page

- Scrape Amazon Product Data with Crawlbase Crawling API

- Scrape Key Amazon Product Data Content with Crawlbase Scrapers

- Scrape Amazon Product Reviews with Crawlbase’s Integrated Scraper

- Overcoming Amazon Product Data Scraping Challenges with Crawlbase Crawling API

- Applications of Scrape Amazon product data

- Conclusion

- Frequently Asked Questions

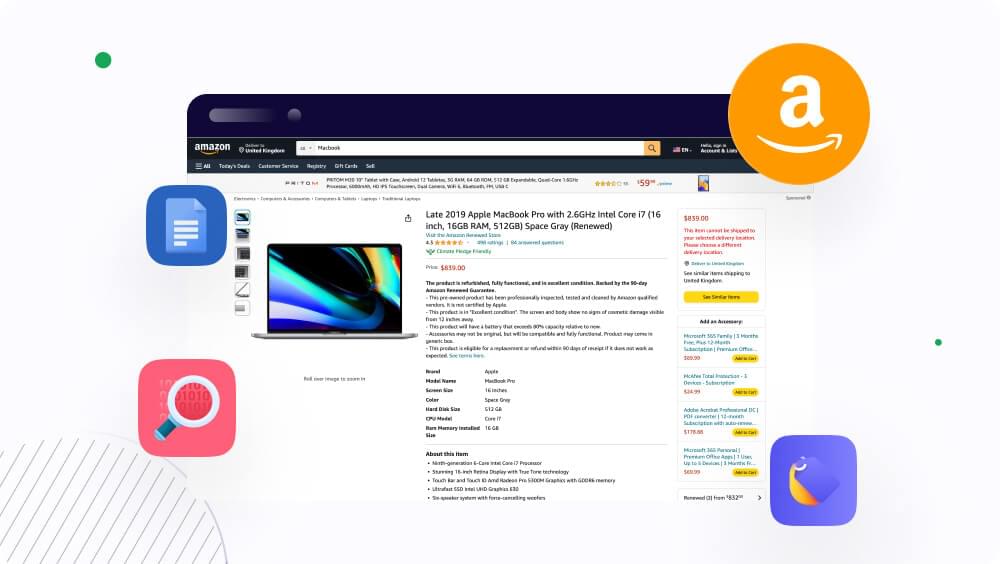

Exploring the Anatomy of an Amazon Product Page

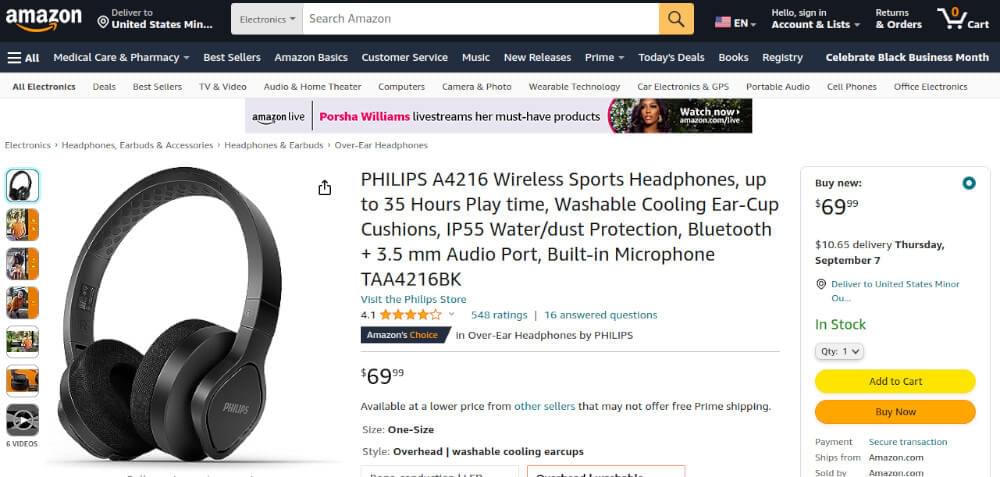

An Amazon product page serves as a digital storefront where customers can explore and make informed decisions about purchasing a wide range of products. These pages are carefully organized to offer vital details, attractive visuals, and a smooth shopping experience. Let’s take a look into the key components that make up the structure of an Amazon product page.

- Product Title and Images:

The product title is the first thing that catches a shopper’s eye. It concisely describes the item and its primary features. Alongside the title, you’ll find high-quality images displaying the product from different angles, giving potential buyers a visual sense of what they’re considering.

- Price and Purchase Options:

The price is prominently displayed, along with any available discounts or deals. Customers can also choose product variations, such as size, color, or quantity, directly from this section.

- Product Description:

In this section, a detailed product description provides valuable information about the item’s specifications, features, and benefits. It helps customers understand whether the product meets their needs and expectations.

- Customer Reviews and Ratings:

Genuine customer reviews and ratings offer insights into the product’s real-world performance and quality. Shoppers can read about others’ experiences, making it easier to make an informed decision.

- Q&A and Customer Interactions:

Customers can ask questions about the product, and both the seller and other customers can provide answers. This interactive section addresses uncertainties and provides additional information.

- Product Specifications:

Technical details such as dimensions, materials used, and compatibility are listed here. This information helps customers ensure whether the product suits their specific requirements.

- Related Products and Recommendations:

Amazon often suggests related or complementary products based on the customer’s browsing and purchase history. This section encourages upselling and cross-selling.

- Add to Cart and Buy Now:

Customers can add the product to their cart or use the “Buy Now” option for immediate purchase. These actions initiate the checkout process.

- Shipping and Delivery Information:

Details about shipping options, estimated delivery times, and associated costs are provided to manage customer expectations.

Scrape Amazon Product Data with Crawlbase Crawling API

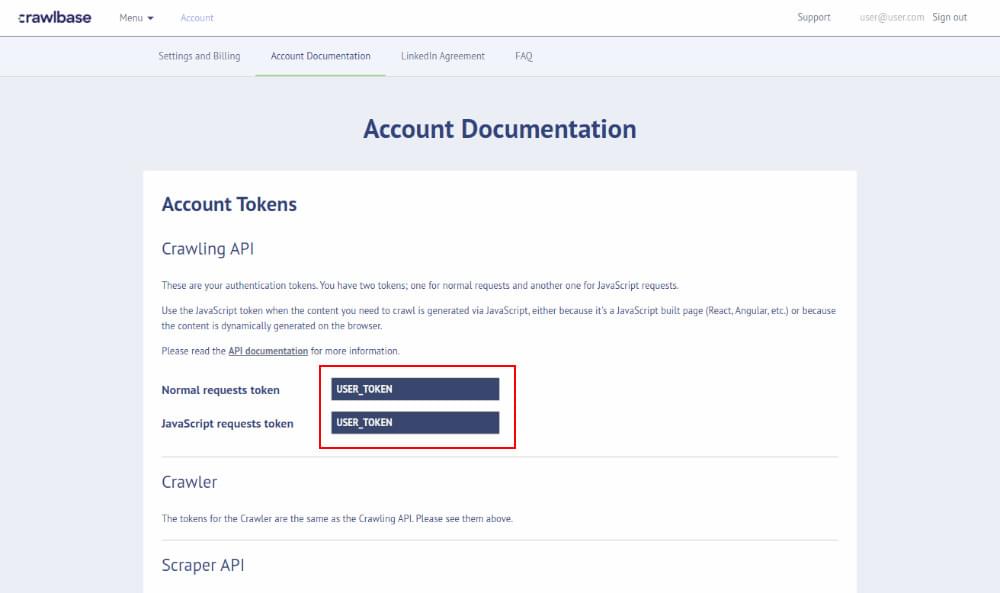

Step 1: Sign up to Crawlbase and get your private token. You can get this token from the Crawlbase account documentation section within your account.

Step 2: Choose the specific Amazon product page that you want to scrape. For this purpose, we chose the Amazon product page for PHILIPS A4216 Wireless Sports Headphones. It’s essential to select a product page with different elements to showcase the versatility of the scraping process.

Step 3: Install the Crawlbase node.js library.

First, confirm that Node.js is installed on your system if it’s not installed, you can download and install it from here, then proceed to install the Crawlbase Node.js library via npm :

npm i crawlbase

Step 4: Create amazon-product-page-scraper.js file by using the below command:

touch amazon-product-page-scraper.js

Step 5: Configure the Crawlbase Crawling API. This involves setting up the necessary parameters and endpoints for the API to function. Paste the following script in amazon-product-page-scraper.js file which you created in step 4. In order to run the below script, paste this command node amazon-product-page-scraper.js in the terminal:

1 | // Import the Crawling API |

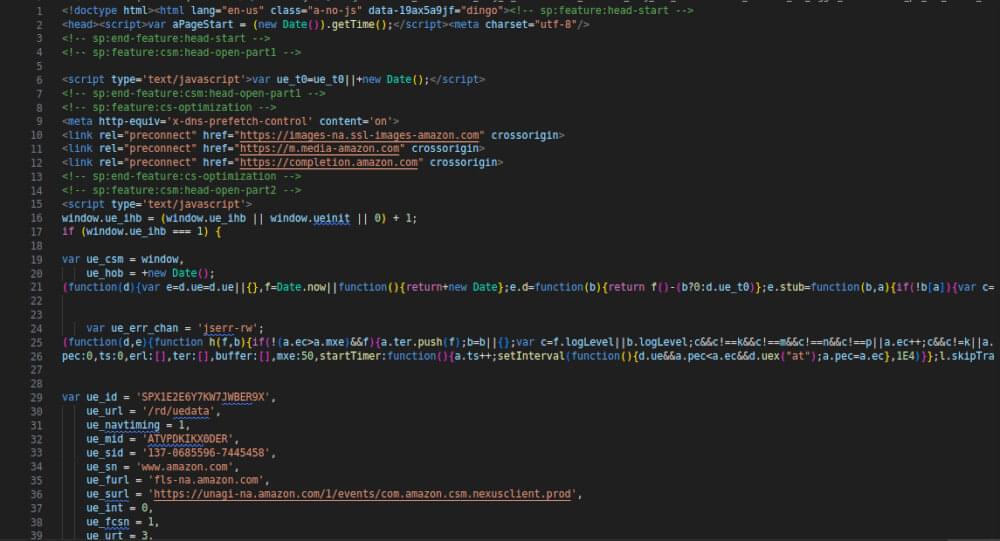

The above script shows how to use Crawlbase’s Crawling API to access and retrieve data from an Amazon product page. By setting up the API token, defining the target URL, and making a GET request. The output of this code will be the raw HTML content of the specified Amazon product page (https://www.amazon.com/dp/B099MPWPRY). It will be displayed in the console, showing the unformatted HTML structure of the page. The console.log(response.body) line prints this HTML content to the console as shown below:

Scrape Key Amazon Product Data Content with Crawlbase Scrapers

In the above examples, we talked about how we only get the basic structure of an Amazon product data (the HTML). But sometimes, we don’t need this raw data instead, we want the important stuff from the page. No worries! Crawlbase Crawling API has built-in Amazon Scrapers to scrape important content from Amazon pages. To make this work, we need to add a “scraper” parameter when using the Crawling API. This “scraper” parameter helps us get the good parts of the page in a JSON format. We are making edits to the same file amazon-product-page-scraper.js. Let’s look at an example below to get a better picture:

1 | // Import the Crawling API |

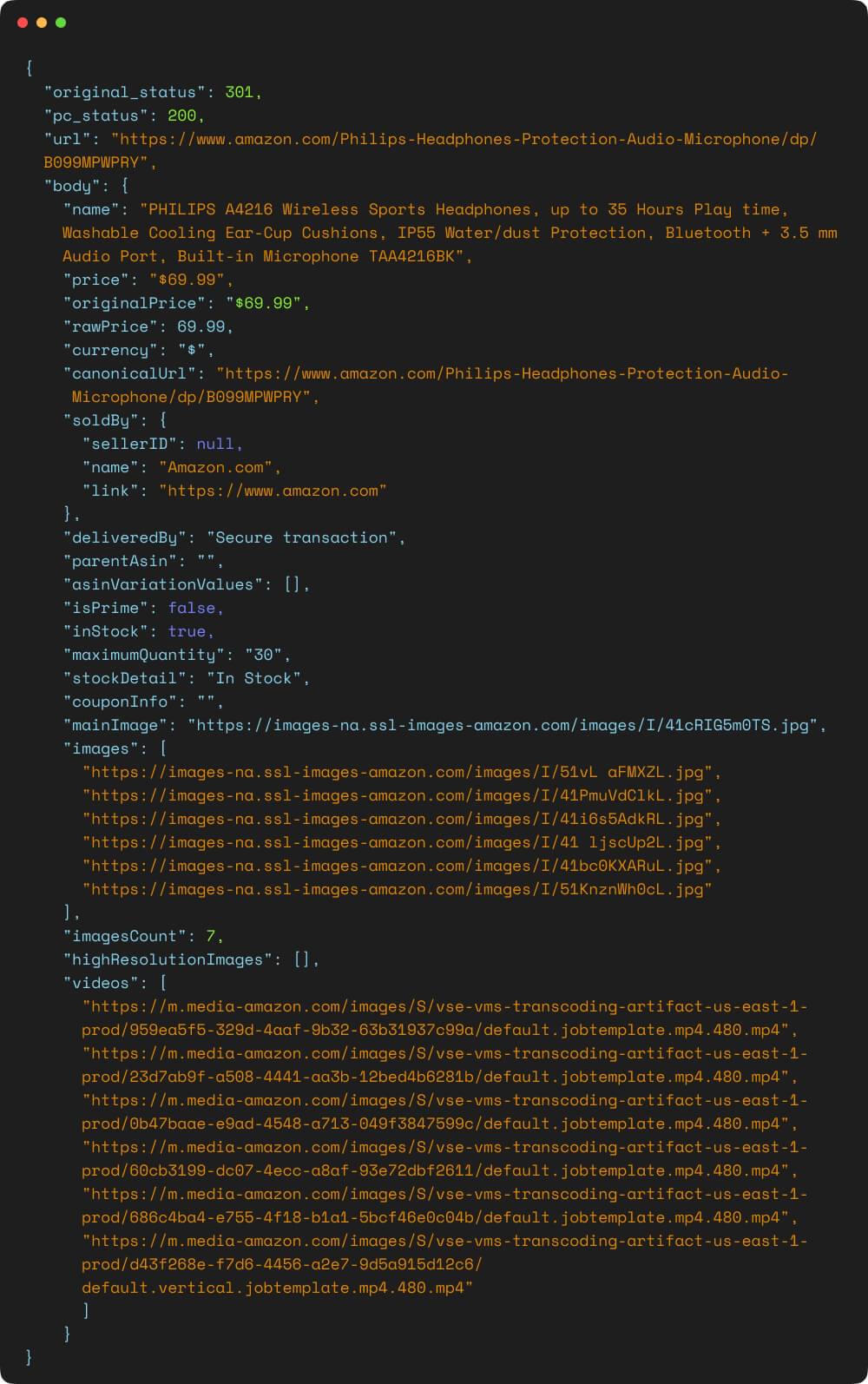

The output of the above code block will be the parsed JSON response containing specific Amazon product details such as the product’s name, description, price, currency, parent ASIN, seller name, stock information, and more. This data will be displayed on the console, showcasing organized information extracted from the specified Amazon product page.

We will now retrieve the Amazon product’s name, price, rating, and image from the JSON response mentioned earlier. To do this, we must store the JSON response in a file named "amazon-product-scraper-response.json". To achieve this, execute the following script in your terminal:

1 | // Import the required modules |

This code successfully crawls the Amazon product page, retrieves the JSON response, and saves it to the file. A message in the console indicates that the JSON response has been saved to 'amazon-product-scraper-response.json'. You will see appropriate error messages in the console if any errors occur during these steps.

Scrape Product Name

1 | // Import fs module |

The above code block reads data from a JSON file named "amazon-product-scraper-response.json" using the fs (file system) module in Node.js. It then attempts to parse the JSON data, extract a specific value (in this case, the "name" property from the "body" object), and prints it to the console. If there are any errors, such as the JSON data not being well-formed or the specified property not existing, the error messages will be displayed accordingly.

Scrape Product Price

1 | // Import fs module |

This code uses the Node.js fs module to interact with the file system and read the contents of a JSON file named "amazon-product-scraper-response.json". Upon reading the file, it attempts to parse the JSON data contained within it. If the parsing is successful, it extracts the "price" property from the "body" object of the JSON data. This extracted price value is then printed to the console.

Scrape Product Rating

1 | // Import fs module |

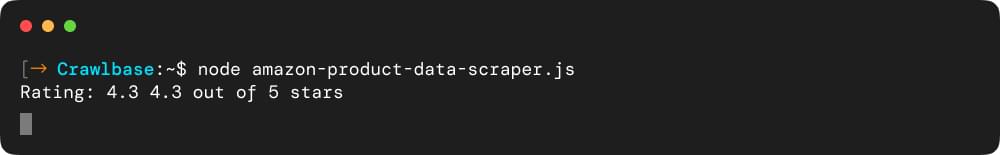

The code reads the contents of a JSON file named "amazon-product-scraper-response.json". It then attempts to parse the JSON data and extract the value stored under the key "customerReview" from the "body" object. The extracted value, which seems to represent a product’s rating, is printed as “Rating:” followed by the value.

Scrape Product Image

1 | // Import fs module |

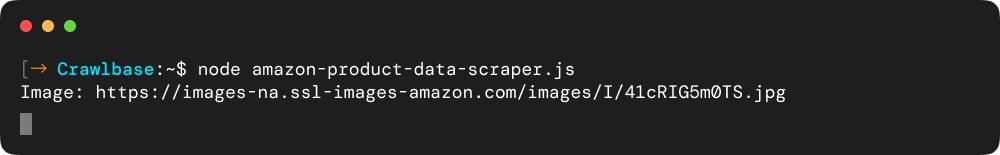

The above script attempts to parse the JSON data and extract the value stored under the key "mainImage" within the "body" object. The extracted value, likely representing a product image, is printed as "Image:" followed by the value. This obtained image value is logged to the console.

Scrape Amazon Product Reviews with Crawlbase’s Integrated Scraper

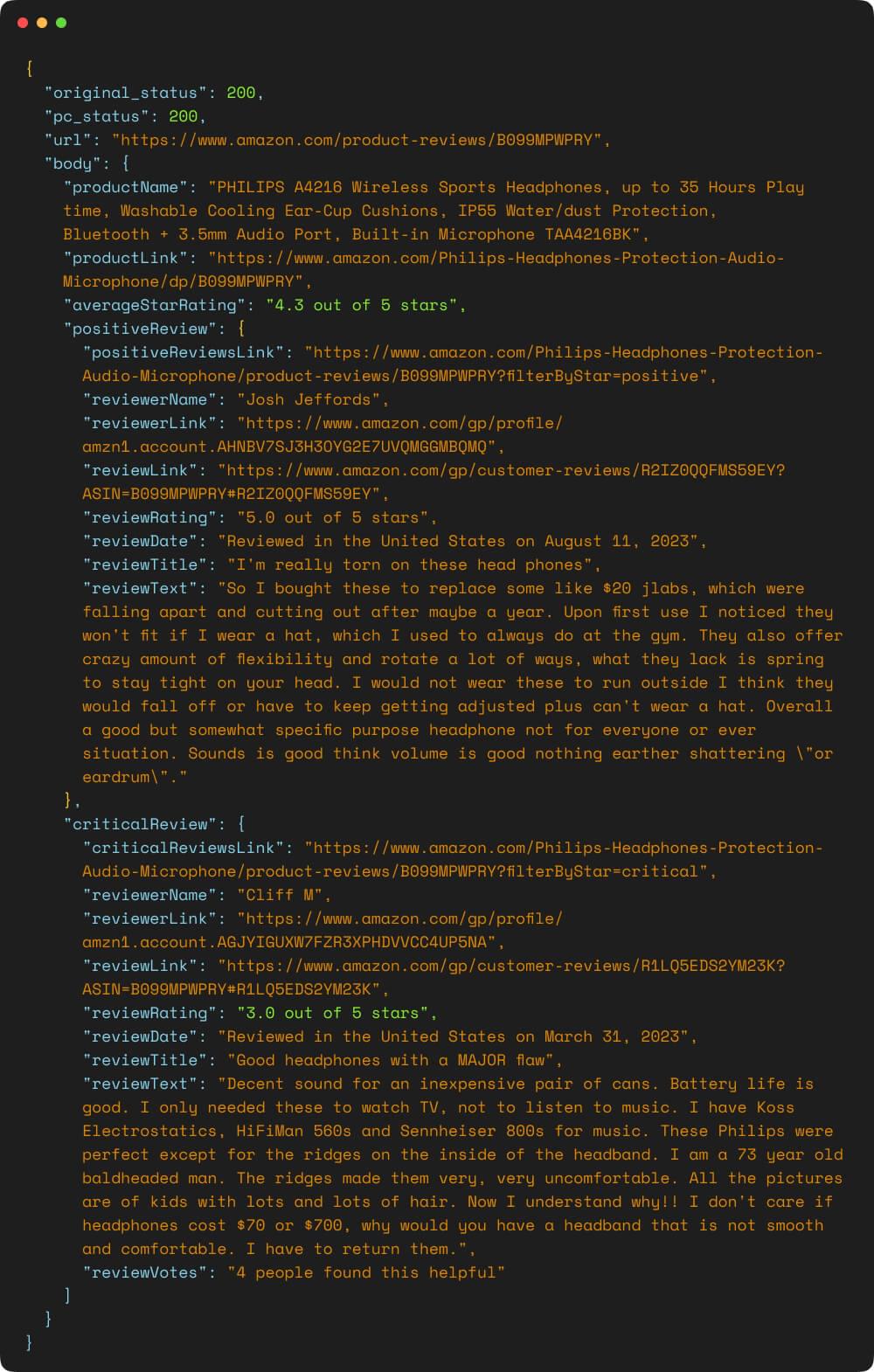

In this example, we’ll scrape the customer reviews of the same Amazon product. The target URL that we scraped is https://www.amazon.com/product-reviews/B099MPWPRY. Crawlbase’s Crawling API has an integrated scraper designed for Amazon product reviews. This scraper allows us to retrieve customer reviews from an Amazon product. To achieve this, all we need to do is incorporate a “scraper” parameter into our usage of the Crawling API, assigning it the value "amazon-product-reviews". Let’s explore an example below to get a clearer picture:

1 | // Import the Crawling API |

Running the above script will result in the extraction of Amazon product review data through the Crawlbase Crawling API. As the code executes, it fetches information about the reviews related to the specified Amazon product page. This data will be formatted in JSON and displayed on the console, presenting valuable insights into customers’ experiences and opinions. The structured output showcases various aspects of the reviews, including reviewer names, ratings, review dates, review titles, and more.

We have revealed the potential of data extraction through a detailed step-by-step guide. Starting from the utilization of Crawlbase Crawling API’s integrated Amazon Scrapers to scrape complex Amazon product information like product descriptions, prices, sellers, and stock availability. Furthermore, the guide shows how the Crawlbase Crawling API seamlessly facilitates the extraction of customer reviews, providing a wealth of information such as reviewer names, ratings, dates, and review texts.

Overcoming Amazon Product Data Scraping Challenges with Crawlbase Crawling API

Crawlbase Crawling API is designed to address the challenges associated with web scraping, particularly in scenarios where scraping Amazon product data is concerned. Here’s how the Crawlbase Crawling API can help mitigate these challenges:

- Anti-Scraping Measures: Crawlbase Crawling API utilizes advanced techniques to bypass anti-scraping mechanisms like CAPTCHAs, IP blocking, and user-agent detection. This enables seamless data collection without triggering alarms.

- Dynamic Website Structure: The API is equipped to adapt to changes in website structure by utilizing smart algorithms that automatically adjust scraping patterns to match the evolving layout of Amazon’s pages.

- Legal and Ethical Concerns: Crawlbase respects the terms of use of websites like Amazon, ensuring that scraping is conducted in a responsible and ethical manner. This minimizes the risk of legal actions and ethical dilemmas.

- Data Volume and Velocity: The API efficiently manages large data volumes by distributing scraping tasks across multiple servers, enabling fast and scalable data extraction.

- Complexity of Product Information: Crawlbase’s Crawling API employs intelligent data extraction techniques that accurately capture complex product information, such as reviews, pricing, images, and specifications.

- Rate Limiting and IP Blocking: The API manages rate limits and IP blocking by intelligently throttling requests and rotating IP addresses, ensuring data collection remains uninterrupted.

- Captcha Challenges: Crawlbase’s Crawling API can handle CAPTCHAs through automated solving mechanisms, eliminating the need for manual intervention and speeding up the scraping process.

- Data Quality and Integrity: The API offers data validation and cleansing features to ensure that scraped data is accurate and up-to-date, reducing the risk of using outdated or incorrect information.

- Robustness of Scraping Scripts: The API’s robust architecture is designed to handle various scenarios, errors, and changes in the website’s structure, reducing the need for constant monitoring and adjustments.

Crawlbase Crawling API provides a comprehensive solution that addresses the complexities and challenges of scraping Amazon product data. By offering intelligent scraping techniques, robust architecture, and adherence to ethical standards, the API empowers businesses to gather valuable insights without the typical hurdles associated with web scraping.

Applications of Scrape Amazon product data

- One of the key areas where scraped data can be utilized is in analyzing customer reviews for product improvement. By carefully examining feedback, businesses can identify areas where their products can be improved, leading to enhanced customer satisfaction.

- Another valuable application of scraped data is identifying market trends and demand patterns. By analyzing patterns and trends in customer behavior, businesses can anticipate consumer needs and adapt their offerings accordingly. This allows them to stay ahead of the competition and offer high-demand products or services.

- Monitoring competitor pricing strategies is another important use of scraped data. By closely examining how competitors are pricing their products, businesses can make informed decisions regarding their pricing adjustments. This ensures that they stay competitive in the market and can adjust their pricing strategies in real-time.

- E-commerce businesses can use scraped product data to generate website content, such as product descriptions, features, and specifications. This can improve search engine optimization (SEO) and enhance the online shopping experience.

- Brands can monitor Amazon for unauthorized or counterfeit products by scraping product data and comparing it with their genuine offerings.

Read more: How To Scrape Amazon Data In Ruby?

Conclusion

In conclusion, the world of Amazon data scraping offers businesses an invaluable opportunity to unlock hidden insights and strategic advantages. This step-by-step Amazon data scraping guide has illuminated the significance of Amazon product data and its potential for driving business growth. Companies can make informed decisions across various operational facets by efficiently extracting and analyzing this data.

The understanding of Amazon product data’s importance is foundational. This data serves as a treasure trove of market trends, competitor strategies, and customer preferences. With this knowledge, businesses can optimize pricing, fine-tune marketing campaigns, streamline inventory management, and effectively shape overall business strategies to meet consumer demands.

Frequently Asked Questions

Q: Is it possible to scrape public data from Amazon?

Scraping public data from Amazon, such as product listings, prices, descriptions, and customer reviews, is possible due to the open nature of the content. This data is accessible to website users and can be gathered through web scraping techniques.

However, it’s important to note that Amazon’s Terms of Use prohibit certain types of automated data collection, so anyone interested in scraping Amazon should review and comply with their terms to avoid any legal or ethical issues.

Q: What are the different types of Amazon product data?

Sales rank and category information: provide valuable insights into the popularity and competitiveness of products on Amazon. By analyzing sales rank data, you can identify high-demand items and strategically position your own offerings. Additionally, understanding the product’s category allows you to gauge market trends and tailor your marketing strategies accordingly.

Product descriptions and features: play a crucial role in attracting potential customers. A detailed product description with compelling language helps consumers understand a particular item’s benefits and unique selling points. Similarly, highlighting key features provides clarity about what sets the product apart from others in its category.

Customer questions and answers: offer valuable social proof for prospective buyers. By scraping this data, you gain access to real-time feedback from customers who have already purchased or are considering purchasing the product. This insight enables you to address common concerns or misconceptions, improving customer satisfaction while boosting sales conversion rates.

Q: What are Amazon ASINs?

Amazon Standard Identification Numbers (ASINs) are unique identifiers assigned to each product listed on Amazon’s platform. These alphanumeric codes are crucial for cataloging and differentiating products, making them essential for various data analysis and scraping tasks.

Read more: Scrape Amazon ASIN at Scale: The Power of Crawlbase’s Smart Proxy

Q: Is scraping Amazon product data legal?

Scraping publicly available data on the internet, including Amazon, is legal. It is absolutely legal to scrape information such as product descriptions, details, ratings, prices, or the number of reactions to a certain product. Just be careful with personal information and copyright protection.

For example, when scraping product reviews, you must account for potential personal data like the reviewer’s name and avatar, which require careful handling. Additionally, the text of reviews might be subject to copyright protection in some instances. It’s advisable to take extra care and potentially seek legal advice when dealing with such data.

Q: Is it possible to detect web scraping activities?

Yes, anti-bot software can detect scraping by checking your IP address, browser settings, user agents, and other characteristics. After being discovered, the website will display a CAPTCHA; if it is not solved, your IP address will be blacklisted.

Q: How to bypass CAPTCHA while scraping Amazon product data?

To overcome CAPTCHAs, which are one of the most challenging hurdles while collecting public data, you should avoid encountering them as much as possible. Of course, it’s important that avoiding them can be difficult. Here are some tips to help you get there:

- Use a headless browser.

- Make use of trustworthy proxies and rotate your IP addresses.

- Reduce the speed of scraping by inserting random breaks between requests.