In this blog, we will learn how to scrape TikTok comments. If you want a detailed tutorial on scraping TikTok data, check out to our guide on ‘How to Scrape TikTok’.

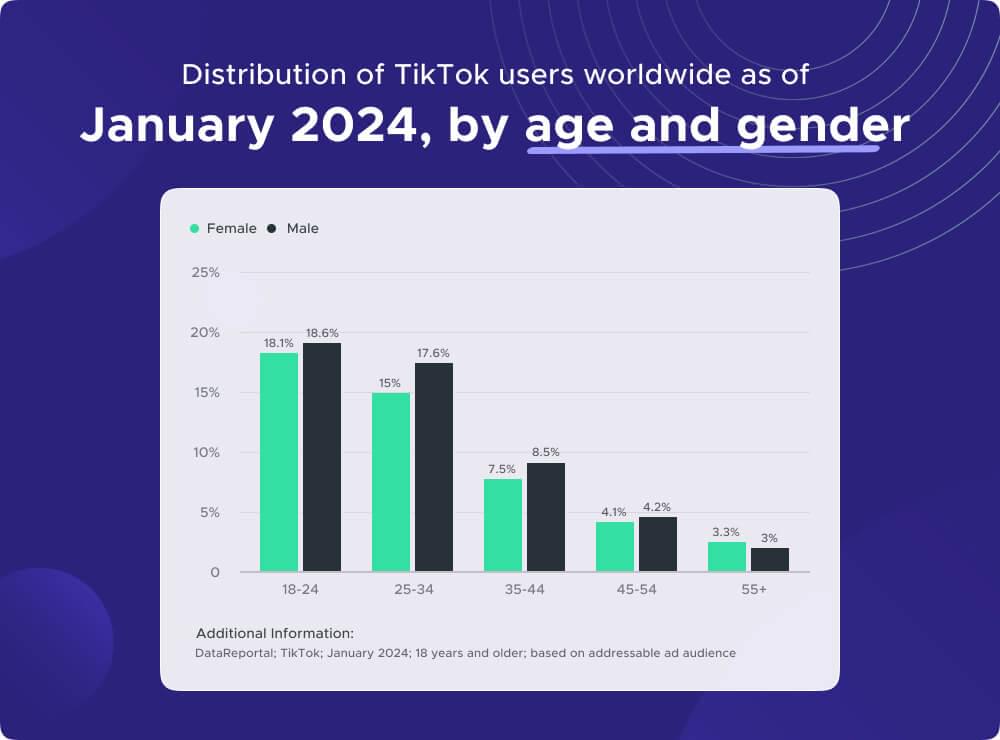

In 2024, TikTok has more than 4 billion downloads worldwide, making it one of the fastest-growing social media platforms lately. People of all ages, from teens to young adults and even older folks, are using it, which adds to its huge popularity and influence on culture.

Comments on TikTok videos provide insights into user engagement, sentiments, trends, and much more. TikTok comments data enable researchers, marketers, and data enthusiasts to delve into user interactions, identify trending content, and gain a better understanding of TikTok’s vibrant community.

So let’s start scraping TikTok comments in Python.

Table of Contents

- TikTok Comment Scraper Essentials

- Setup the Python Environment and Install Necessary Libraries

- Extract TikTok Video Comments HTML

- Extract TikTok Comments in JSON format

- Handle Pagination in TikTok comments Scraping

- Saving Scraped TikTok Comments Data

- Complete Code with Pagination & Saving

- Frequently Asked Questions (FAQs)

1. TikTok Comment Scraper Essentials

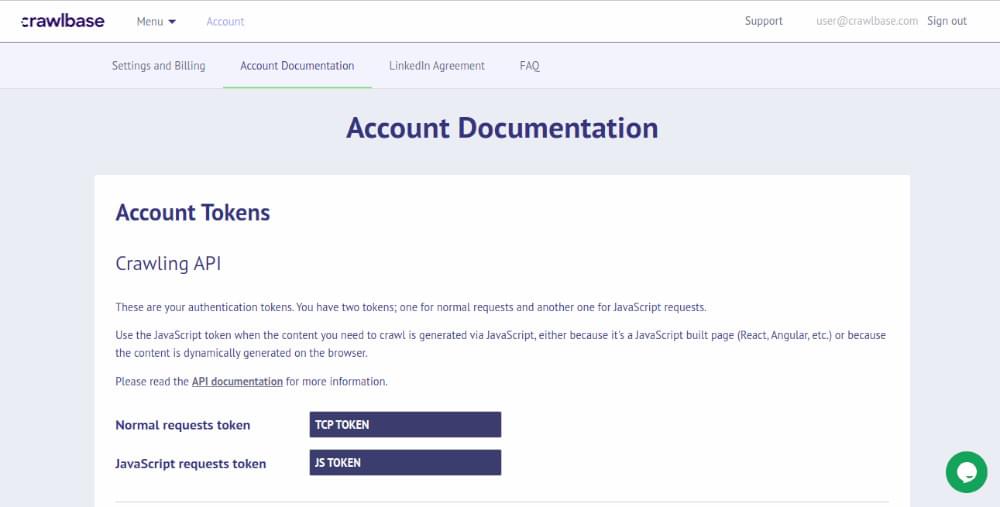

You need to have basic knowledge of Python language, HTML and CSS for this tutorial. Secondly, setup Crawlbase Crawling API to scrape comments from TikTok. All you need to do is signup to Crawlbase and you’ll get free 1000 requests to scrape TikTok comments free.

Head towards Account Documentation to get your Token.

In your dashboard you’ll find two types of tokens.The Normal Token is for regular websites, and the JS Token is for websites with a lot of dynamic or JavaScript content. Since TikTok uses a lot of JavaScript, we’ll use the JS Token.

Now for the Python setup, make sure you have Python installed. You can download and install Python from the official Python website based on your operating system. Additionally, make sure you have pip, the Python package manager, installed to install necessary libraries and dependencies.

Now you’re well-equipped to start scraping TikTok video comments using Python and the Crawlbase Crawling API.

2. Setup the Python Environment and Install Necessary Libraries

To begin scraping TikTok video comments, follow these steps to set up your project environment:

Python Installation: If you haven’t already, download and install Python from the official Python website. Make sure to add Python to your system’s PATH during installation. You can verify the installation by opening a command prompt or terminal and running the following command:

1 | python --version |

If Python is installed correctly, you’ll see the installed version number.

Create a New Python Environment: It’s recommended to work within a virtual environment to manage project dependencies. Create a new virtual environment using the following commands:

1 | # Create a new virtual environment |

Install Necessary Libraries: Install the required Python libraries for web scraping and data extraction. Key libraries include requests, beautifulsoup4, and crawlbase. You can install these libraries using pip, the Python package manager:

1 | pip install requests beautifulsoup4 crawlbase |

Initialize Project Files: Create a new Python script file tiktok_comments_scraper.py for your TikTok comments scraping project. You can use any text editor or integrated development environment (IDE) of your choice to write your Python code.

With your project environment set up and libraries installed, you’re ready to start scraping TikTok video comments. Let’s move into the next step.

3. Extract TikTok Video Comments HTML

To begin scraping TikTok video comments, we need to retrieve the HTML content of the TikTok page where the comments are located. There are two common approaches to accomplish this: using a standard HTTP request library like requests or using the Crawlbase Crawling API..

Extracting TikTok Comments HTML using Common Approach

In the common approach, we use Python libraries such as requests to fetch the HTML content of the TikTok video page. Here’s a basic example of how you can achieve this:

1 | import requests |

Copy above code into your tiktok_comments_scraper.py file and run the following command in the directory where file is present.

1 | python tiktok_comments_scraper.py |

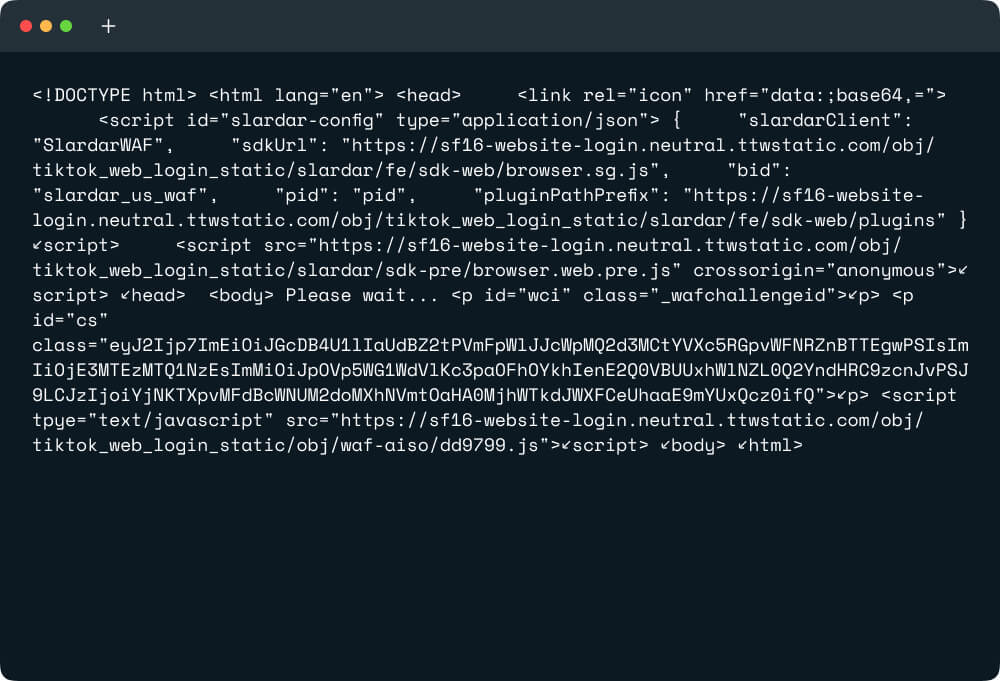

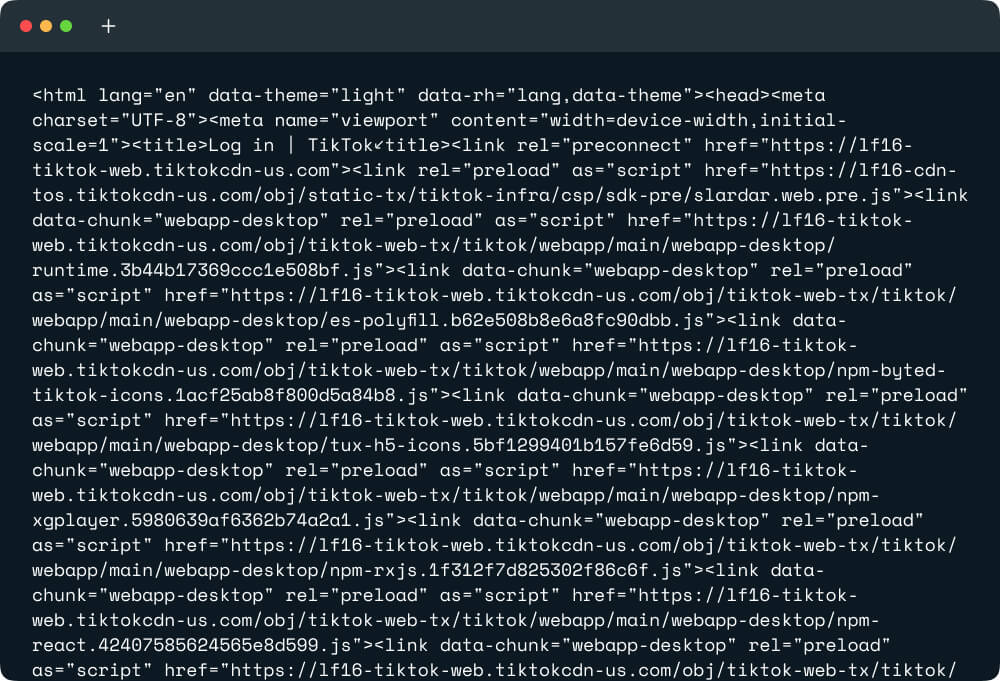

You will see that the HTML of the page get printed on the terminal.

But why there is no useful information in the HTML? It’s because TikTok relies on JavaScript rendering to load essential data dynamically. Unfortunately, with conventional scraping methods, accessing this data can be challenging.

Common Scraping Approaches Limitations

The common approach of fetching HTML using libraries like requests may encounter limitations when scraping TikTok video comments. Some of the issues with this approach include:

- Limited JavaScript Execution: Standard HTTP requests do not execute JavaScript, which means that dynamically loaded content, such as comments on TikTok videos, may not be captured accurately.

- Incomplete Data Retrieval: TikTok pages often load comments asynchronously or through AJAX requests, which may not be fully captured by a single HTTP request. As a result, the fetched HTML may lack certain elements or contain placeholders instead of actual comments.

- Rate Limiting and IP Blocking: Continuous scraping using traditional methods may trigger rate limits or IP blocking mechanisms on the TikTok servers, leading to restricted access or temporary bans.

To overcome these issues and ensure accurate scraping of TikTok video comments, alternative methods such as using headless browsers or dynamic rendering APIs may be necessary. One of the API out there is Crawlbase Crawling API.

Extracting HTML Using Crawlbase Crawling API

To overcome the limitations of the common approach and efficiently fetch TikTok video comments HTML, we can utilize the Crawlbase Crawling API. This API allows us to fetch the rendered HTML content of TikTok pages, including dynamically loaded content.

Here’s how you can use the Crawlbase Crawling API to retrieve TikTok video comments HTML:

1 | from crawlbase import CrawlingAPI |

Using the Crawlbase Crawling API allows us to fetch the HTML content of TikTok pages efficiently, ensuring that JavaScript-rendered content is captured accurately. This approach is particularly useful for scraping dynamic content like TikTok video comments.

4. Extract TikTok Comments in JSON format

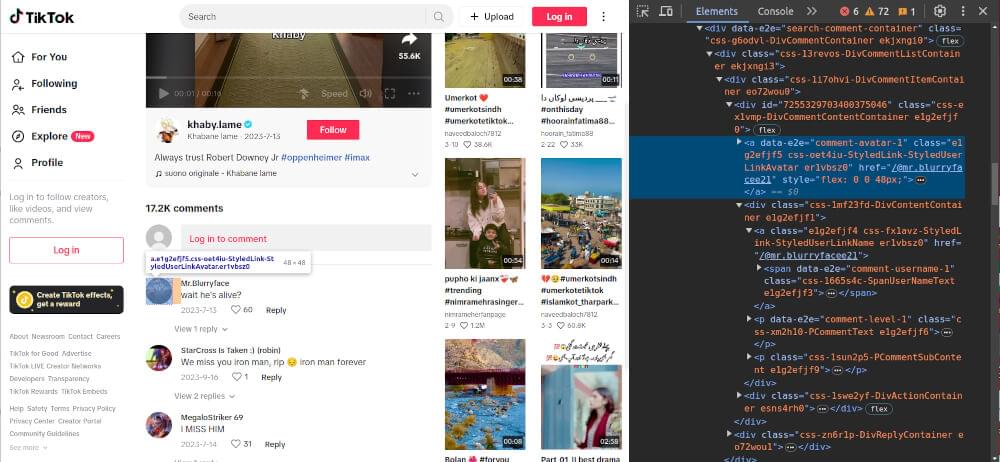

Scraping TikTok comments involves extracting various components such as video author information, comments listing, comment content, and commenter details. Let’s deeply diagnose each step along with professional and efficient code examples.

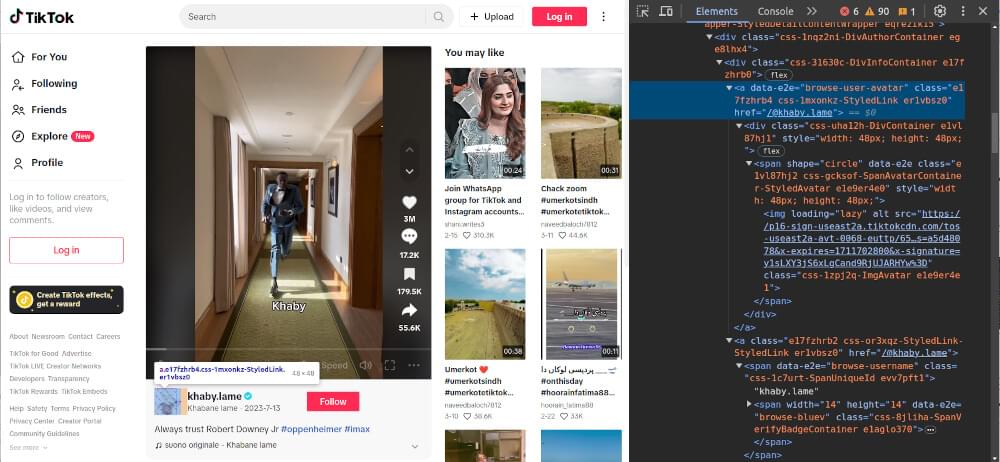

Scraping Video Author Information

When scraping comments from the video page, it’s crucial to maintain accurate records of both the corresponding video and its uploader for comprehensive analysis and attribution. To scrape the video author information from a TikTok video page, we can extract details such as the username, profile URL, and profile picture.

Here’s a code example demonstrating how to accomplish this:

1 | def scrape_video_author_info(soup): |

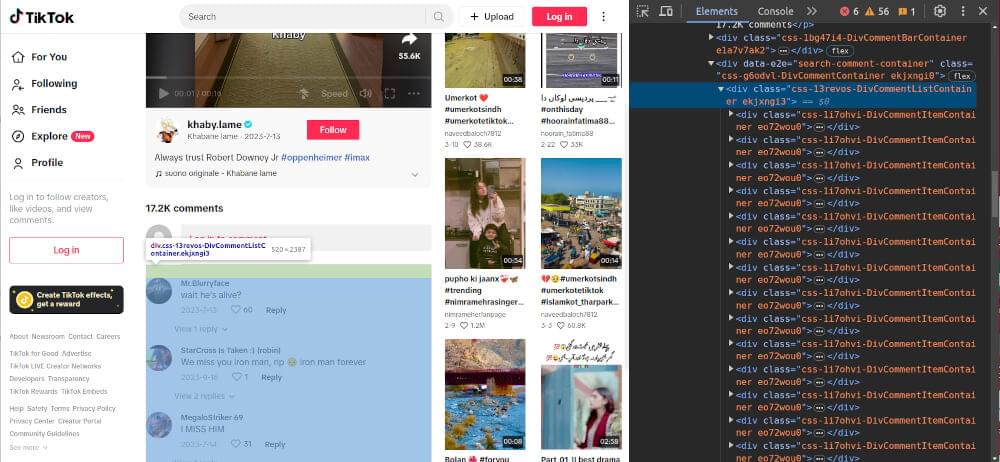

Scraping Comments Listing

To scrape the comments listing from a TikTok video page, we can extract the HTML elements containing the comments.

Here’s a code example demonstrating how to accomplish this:

1 | def scrape_comments_listing(soup): |

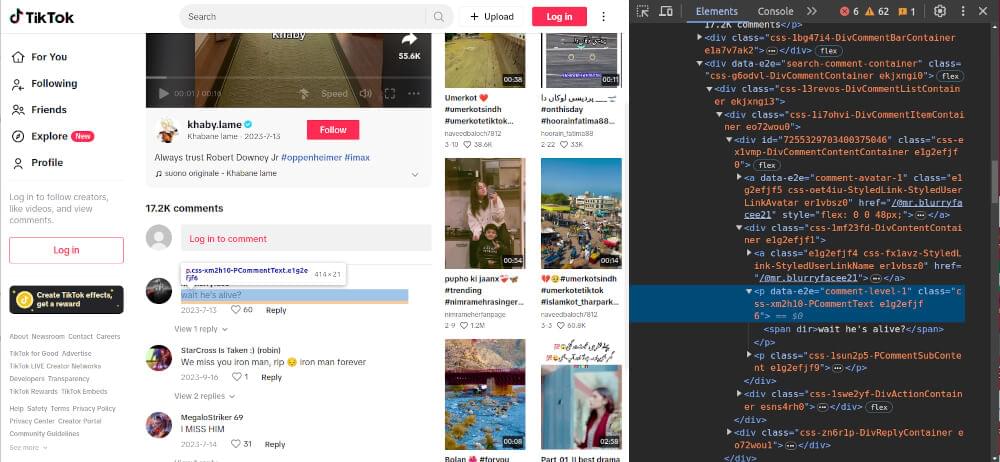

Scraping Comment Content

To scrape the content of each comment, we can extract the text content of the comment elements.

Here’s a code example demonstrating how to accomplish this:

1 | def scrape_comment_content(comment): |

Scraping Commenter Details

To scrape details about the commenter, such as their username and profile URL, we can extract relevant information from the comment elements.

Here’s a code example demonstrating how to accomplish this:

1 | def scrape_commenter_details(comment): |

Complete Code

Now, let’s combine these scraping functions into a complete code example that extracts video author information, comments listing, comment content, and commenter details:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

5. Handle Pagination in TikTok comments Scraping

TikTok often employs infinite scrolling to load more comments dynamically. To handle pagination, we can utilize the Crawlbase Crawling API scroll parameter. By default, the scroll interval is set to 10 seconds, but you can use scroll_interval parameter to change it. Here’s an example of how to handle pagination using the Crawlbase Crawling API:

1 | # Function to fetch HTML content with scroll pagination |

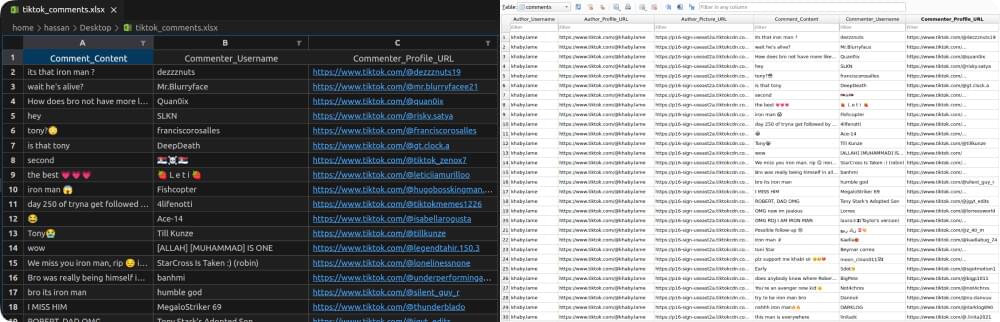

6. Saving Scraped TikTok Comments Data

After successfully scraping TikTok comments, it’s crucial to save the extracted data for further analysis and future reference. Here, we’ll explore two common methods for storing scraped TikTok comments data: saving to an Excel file and saving to an SQLite database.

Saving to Excel File

Excel files provide a convenient way to organize and analyze scraped data, making them a popular choice for storing structured information like TikTok comments. Python offers libraries like pandas for efficiently handling dataframes and openpyxl for writing data to Excel files.

1 | import pandas as pd |

save_to_excel(data, filename):

This function saves comment data along with author information to an Excel file. It takes two parameters: data, a dictionary containing both author information and comments, and filename, the name of the Excel file to which the data will be saved.

Inside the function:

- Author information and comments are extracted from the

datadictionary. - Author information is flattened into a dictionary

flat_author_info. - Comments are flattened into a list of dictionaries

flat_comments. - Two DataFrames are created: one for author information (

author_df) and one for comments (comments_df). - The DataFrames are written to different sheets in the Excel file using

pd.ExcelWriter. - Finally, a success message is printed indicating that the data has been saved to the Excel file.

Saving to SQLite Database

SQLite databases offer a lightweight and self-contained solution for storing structured data locally. Python provides the sqlite3 module for interacting with SQLite databases. We can create a table to store TikTok comments data and insert the scraped data into the table.

1 | import sqlite3 |

create_table_if_not_exists(db_filename, table_name):

This function ensures that a table exists in the SQLite database with the specified name. It takes two parameters: db_filename, the filename of the SQLite database, and table_name, the name of the table to be created or checked for existence.

Inside the function:

- A connection is established to the SQLite database.

- A SQL query is executed to create the table if it does not already exist. The table consists of fields for author information (username, profile URL, and picture URL) and comment information (content, commenter username, and commenter profile URL).

- If an error occurs during table creation, an error message is printed.

- Finally, the connection to the database is closed.

save_to_sqlite(data, db_filename, table_name):

This function saves comment data along with author information to a SQLite database. It takes three parameters: data, a dictionary containing both author information and comments, db_filename, the filename of the SQLite database, and table_name, the name of the table to which the data will be saved.

Inside the function:

- Author information and comments are extracted from the

datadictionary. - Author information is flattened into a tuple

flat_author_info. - For each comment, the author information and comment data are combined into a tuple

flat_comment. - An SQL query is executed to insert the data into the SQLite table.

- If an error occurs during data insertion, an error message is printed.

- Finally, the connection to the database is closed.

7. Complete Code with Pagination & Saving

Now, let’s extend our section 4 complete code example to include handling pagination and saving comment data to Excel and SQLite databases:

1 | from crawlbase import CrawlingAPI |

This extended code example demonstrates how to handle pagination while scraping TikTok comments and save the scraped comment data to both Excel and SQLite databases for further analysis.

tiktok_comments.xlsx File & comments table snapshot:

Congratulations on successfully setting up your TikTok comment scraper using the Crawlbase Crawling API and Python! I hope this guide was useful in scraping TikTok comments.

Do explore our curated list of TikTok scrapers to enhance your scraping capabilities:

📜 Best TikTok Scrapers

📜 How to Scrape TikTok Search Results

📜 How to Scrape Facebook

📜 How to Scrape Linkedin

📜 How to Scrape Twitter

📜 How to Scrape Instagram

📜 How to Scrape Youtube

For further customization options and advanced features, refer to the Crawlbase Crawling API documentation. If you have any questions or feedback, our support team is always available to assist you on your web scraping journey.

8. Frequently Asked Questions (FAQs)

Q. Why Scrape TikTok Comments?

Scraping TikTok comments allows users to extract valuable insights, trends, and sentiments from user-generated content. By analyzing comments, businesses can gain a better understanding of their audience’s preferences, opinions, and feedback. Researchers can also use comment data for social studies, sentiment analysis, and trend analysis.

Q. Is It Legal to Scrape TikTok Comments?

While scraping TikTok comments is technically against TikTok’s terms of service, the legality of web scraping depends on various factors, including the purpose of scraping, compliance with data protection laws, and respect for website terms of service. It’s essential to review and adhere to TikTok’s terms of service and data protection regulations before scraping comments from the platform.

Q. What Can You Learn from Scraping TikTok Comments?

Scraping TikTok comments can provide valuable insights into user engagement, sentiment analysis, content trends, and audience demographics. By analyzing comment data, users can identify popular topics, assess audience reactions to specific content, and understand user sentiment towards brands, products, or services.

Q. How to Handle Dynamic Content While Scraping TikTok Comments?

Handling dynamic content while scraping TikTok comments involves using techniques such as using headless browsers or automation tools to render the page fully before extracting the comments. Alternatively, users can utilize web scraping APIs like Crawlbase Crawling API, which provide JavaScript rendering capabilities to scrape dynamic content accurately. These methods ensure that all comments, including dynamically loaded ones, are captured effectively during the scraping process.