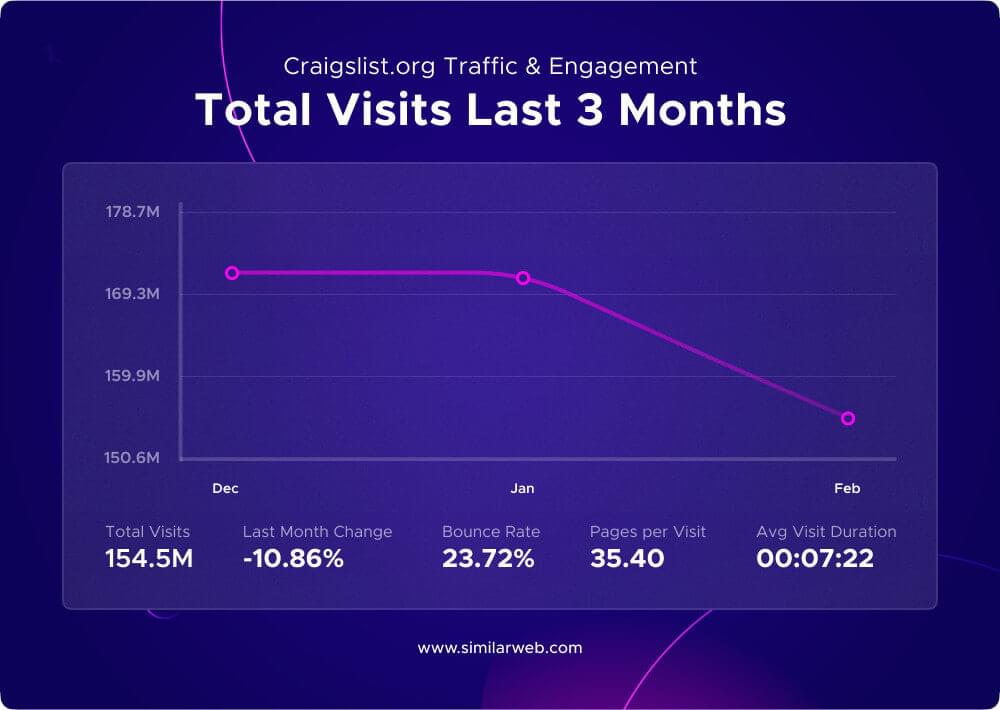

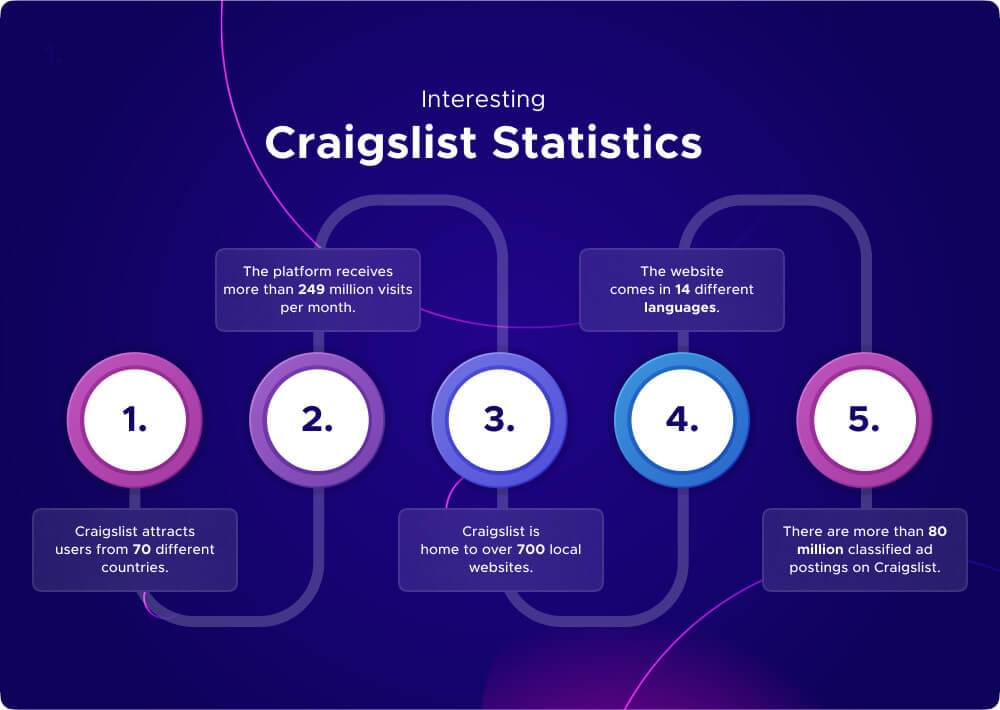

In this guide, we will show you how to scrape Craigslist, launched in 1995 by Craig Newmark, started as an email list to share events happening in the San Francisco Bay Area. By 1996, it had evolved into a website that continues to expand to become the largest classifieds platform in the United States. It currently serves users from 70 countries and receives more than 20 billion visitors every month.

Every month, Craigslist receives over 80 million classified advertising in a variety of categories including employment, housing, items for sale, services, and community events. Despite starting in the United States and Canada, Craigslist swiftly went global. Today, it’s a popular place to search for everything from jobs and housing to services and local activities.

In this blog, we will show you how to use JavaScript and the Crawlbase Crawling API to scrape product listings from Craigslist. You’ll learn how to scrape essential data like product prices, titles, locations and URLs without encountering any blocks or restrictions.

Table of Contents

- Install Necessary Libraries

- Setup the Project

- Extract Craigslist HTML data from Search Listings

- Scrape Craigslist Product Title in JSON

- Scrape Craigslist Product Price

- Scrape Craigslist Product Location

- Scrape Craigslist Product URL

- Finally, Complete the code

- Save Craigslist Data to CSV File

1. Install Necessary Libraries

Make sure you have Node.js installed on your computer to run JavaScript for web scraping. Learn the basics of JavaScript, such as variables, functions, loops and simple web page manipulation, as they are essential for our scraping script. Beginners can check out beginner tutorials on websites like Mozilla Developer Network (MDN) or W3Schools to get started.

To efficiently scrape data from Craigslist, you will need an API token from Crawlbase. Create a free account by signing up on their website, go to your account settings, and find your API tokens. These tokens work like keys, granting access to the features of Crawling API, which makes data scraping smooth and efficient.

2. Setup the Project

Step 1: Create a New Project Folder:

Open your terminal and type mkdir craigslist-scraper to create a new folder for your project.

1 | mkdir craigslist-scraper |

Step 2: Navigate to the Project Folder:

Enter cd craigslist-scraper to move into the newly created folder, making it easier to manage your project files.

1 | cd craigslist-scraper |

Step 3: Generate a JavaScript File:

Type touch index.js to create a new file named index.js within your project folder. You can choose a different name if you prefer.

1 | touch index.js |

Step 4: Add the Crawlbase Package:

Install the Crawlbase Node library for your project by running npm install crawlbase in your terminal. This library simplifies connecting to the Crawlbase Crawling API, facilitating the scraping of Craigslist data.

1 | npm install crawlbase |

Step 5: Install Fs, JSdom, Json2Csv:

Install fs for file system interaction, jsdom for HTML parsing, and json2csv for JSON to CSV conversion. These modules enable essential functionalities in your Craigslist data scraper project, facilitating file operations, DOM manipulation, and data format conversion.

1 | npm install fs jsdom json2csv |

Once you’ve completed these steps, you’ll be ready to start building your Craigslist data scraper!

3. Extract Craigslist HTML data from Search Listings

Once you have obtained your API credentials and installed the Crawlbase Node.js library for web scraping, it’s time to begin working on the “touch.js” file. Select the Craigslist search listing page from which you wish to scrape data. Within the “touch.js” file, utilize the Crawlbase Crawling API along with the fs library to scrape information from your chosen Craigslist page. Make sure to replace the placeholder URL in the code with the actual URL of the page you intend to scrape.

1 | const { CrawlingAPI } = require('crawlbase'), |

Code Explanation:

This JavaScript code demonstrates how to use the fs library to fetch data from a specific URL via an HTTP GET request. What makes it unique is its integration with Crawlbase Crawling API, which enhances and enables large-scale web scraping. Now, let’s dissect the code further.

Required Libraries:

The script requires the following libraries:

- crawlbase: This library facilitates web scraping using the Crawlbase Crawling API.

- fs: This is the Node.js file system module used for file operations.

1 | const { CrawlingAPI } = require('crawlbase'), |

Initialization:

- CrawlingAPI and fs are imported from their respective libraries.

- A token for accessing the Crawlbase API is provided as crawlbaseToken.

- An instance of CrawlingAPI is created with the provided token.

- The URL of the Craigslist search listing page to be scraped is stored in craigslistPageURL.

1 | const crawlbaseToken = 'YOUR_CRAWLBASE_TOKEN', |

Crawling Process:

The api.get() method is called with the Craigslist page URL as an argument to initiate the scraping process.

- If the request is successful, the handleCrawlResponse function is invoked to handle the response.

- If there’s an error during the crawling process, the handleCrawlError function is invoked to handle the error.

1 | api.get(craigslistPageURL).then(handleCrawlResponse).catch(handleCrawlError); |

Handling Response:

In the handleCrawlResponse function:

- It checks if the response status code is 200 (indicating a successful request).

- If successful, it writes the HTML response body to a file named “response.html” using fs.writeFileSync().

- A success message is logged to the console indicating that the HTML has been saved.

1 | function handleCrawlResponse(response) { |

Error Handling:

In the handleCrawlError function:

- Any errors that occur during the crawling process are logged to the console for debugging purposes.

1 | function handleCrawlError(error) { |

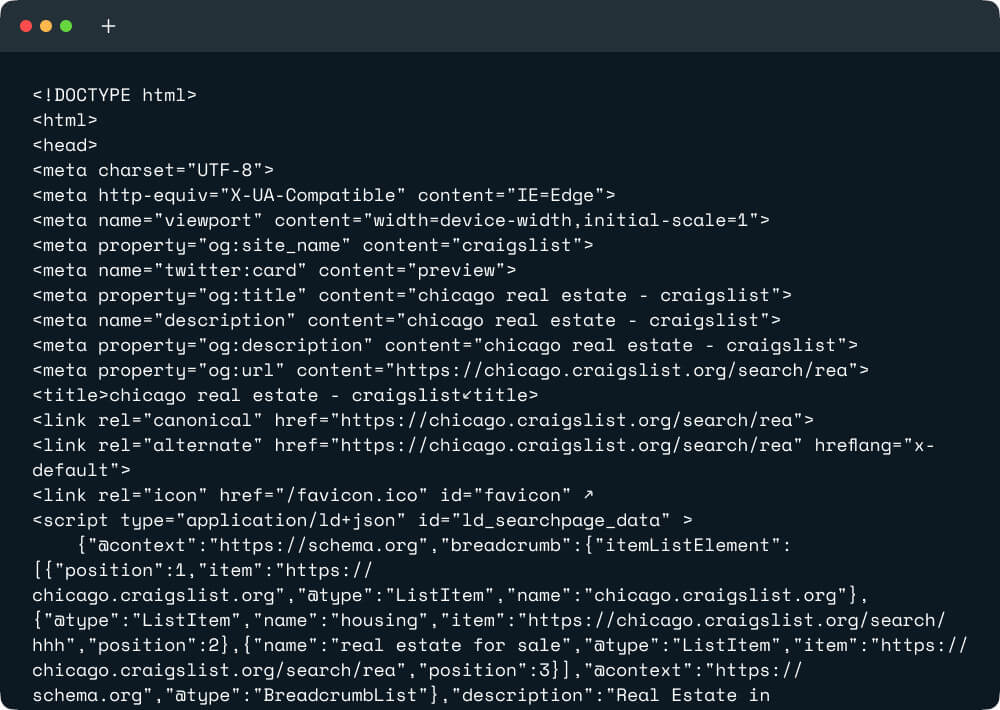

HTML Output:

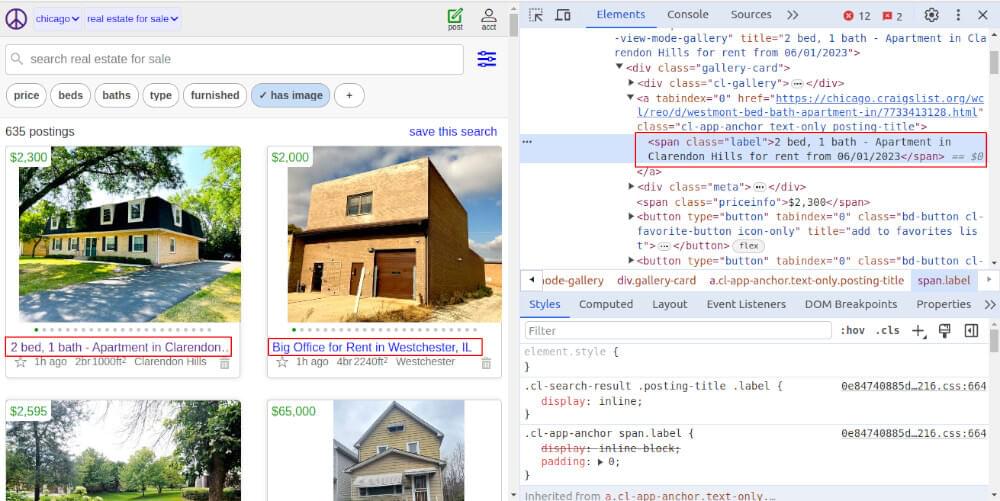

4. Scrape Craigslist Product Title in JSON

In this section, we will look at how to scrape valuable data from Craigslist search listing pages. The data we want to scrape includes elements like product titles, prices, locations, and URLs. To complete this task, we’ll create a custom JavaScript scraper using two key libraries: jsdom, which is commonly used for parsing and manipulating HTML documents, and fs, which simplifies file operations. The below script will parse the HTML structure of Craigslist search result pages (which we got in the previous example in response.html file), scrape the relevant information, and organize it into a JSON array.

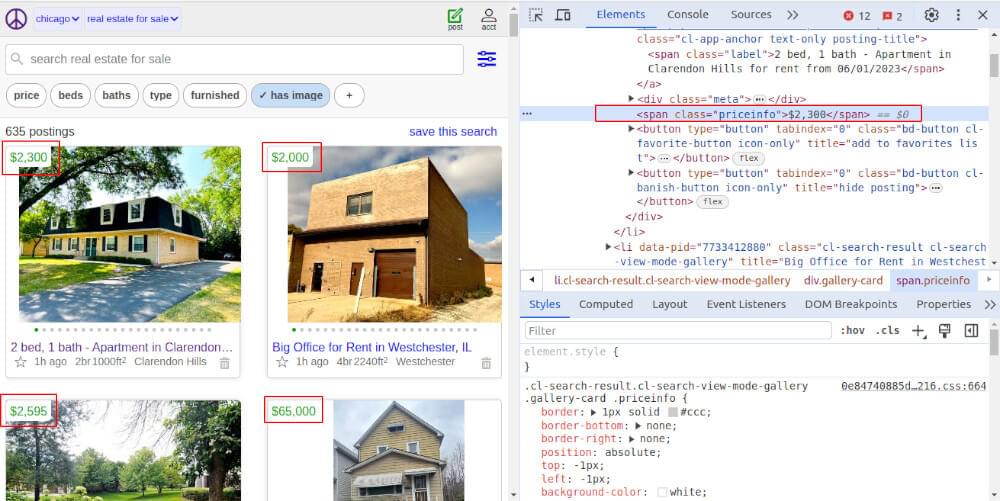

To scrape the product title, use browser developer tools to find the place where each listing is presented. Identify the specific section of the web page that contains the product title. After that, use JSdom selectors like.querySelector to precisely target this element based on its class. Use the.textContent() method to extract the text content, and use.trim() to make sure it’s clean.

1 | const fs = require('fs'), |

5. Scrape Craigslist Product Price:

In this section, we’ll look at how to scrape product prices from the crawled HTML of the Craigslist listing page.

1 | product.price = currentElement.querySelector('.price').textContent.trim(); |

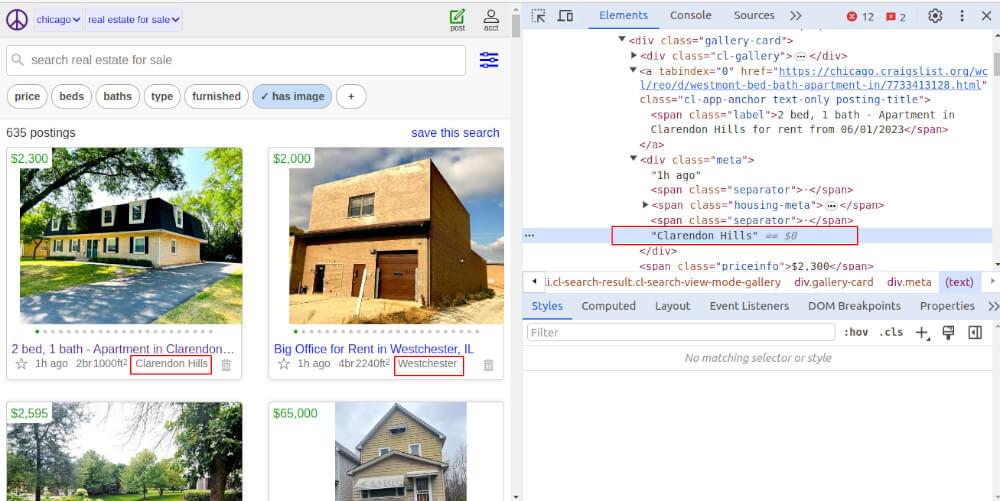

6. Scrape Craigslist Product Location:

The product location offers useful details about the product’s location, which are important for a variety of studies and commercial objectives. When scraping the product location, we’ll use the JSdom package to parse the HTML text and find the required information.

1 | product.location = currentElement.querySelector('.location').textContent.trim(); |

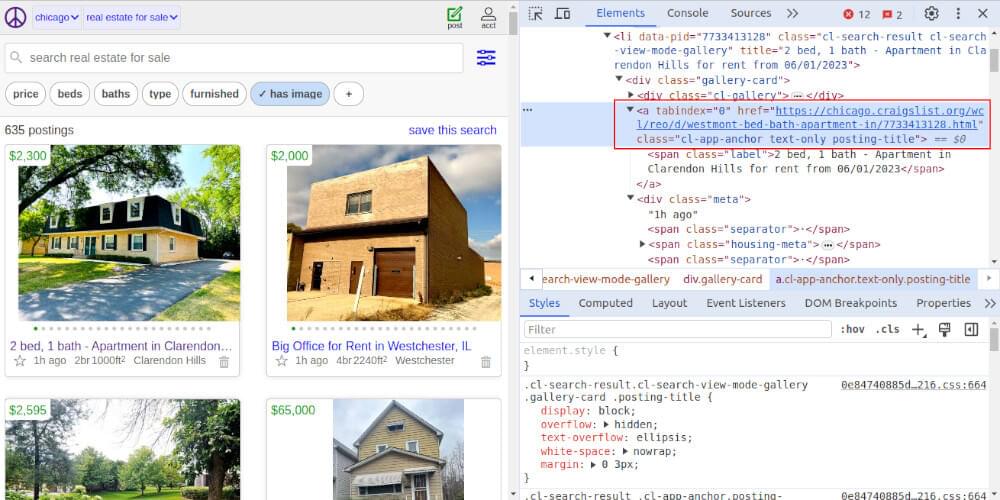

7. Scrape Craigslist Product URL:

Similar to earlier steps, we’ll use the JSdom package to parse the HTML content and find the required information.

1 | product.url = currentElement.querySelector('a').getAttribute('href'); |

8. Finally, Complete the code

1 | const fs = require('fs'), |

JSON Response:

1 | [ |

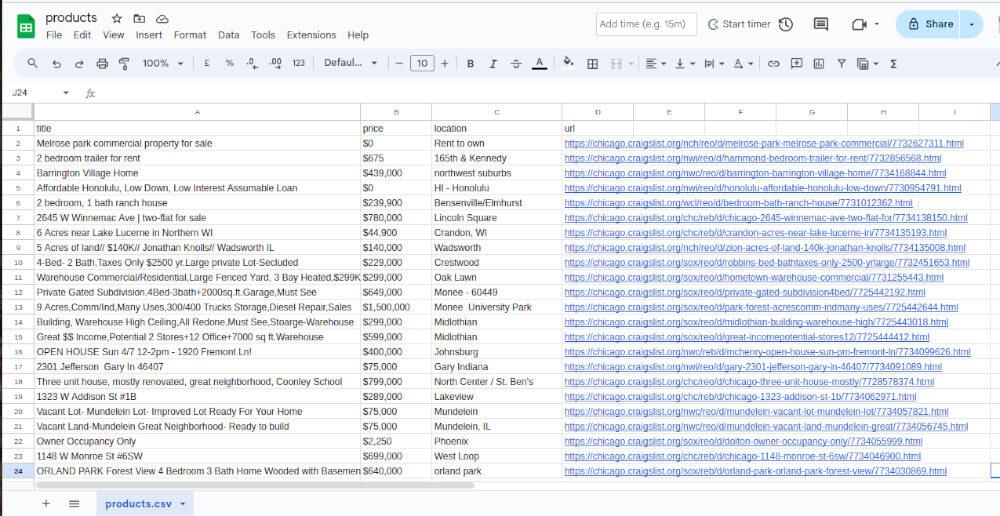

9. Save Craigslist Data to CSV File

Before running below code, make sure you have installed the json2csv package by running npm install json2csv. This script reads the products.json file which you scrape in previous step, specifies the fields to include in the CSV, converts the JSON data to CSV format, and then writes the CSV data to a file named products.csv.

1 | const fs = require('fs'), |

Snippet of the output CSV file:

Scraping Craigslist data is useful for studying the market, identifying possible leads, analyzing rivals, and collecting datasets. However, Craigslist uses strict security measures such as IP bans and CAPTCHA screens, making scraping difficult. The Crawlbase Crawling API addresses this issue by allowing you to scrape Craigslist’s public listings on a wide scale without encountering IP bans or CAPTCHA difficulties. This guide demonstrates how to scrape property listing data from Craigslist and store it as JSON and CSV files.

Check out our additional guides for similar methods on Trulia, Realtor.com, Zillow, and Target.com. These resources are valuable for improving your data scraping abilities across different platforms.

Extra Guides:

How to Scrape Websites with ChatGPT

How to Build Wayfair Price Tracker

How to Scrape TripAdvisor using Smart Proxy

10. Frequently Asked Questions

To conclude this guide, let’s address common FAQs about scraping Craigslist data.

What is the history of Craigslist?

Craig Newmark founded Craigslist back in 1995. It started as an email list to share local events and classified advertising in San Francisco. By 1996, it had evolved into a website that developed quickly to become a top classifieds destination worldwide. Even after it started making money, it remained focused on helping individuals, charging minor fees for job and apartment listings. It extended to other countries, including Canada and the United Kingdom. Craigslist maintains its popularity despite a few issues by keeping things straightforward and supporting local communities especially with Craig Newmark’s charitable contributions.

Does Craigslist allow scraping?

Scraping publicly available information is legal, but laws can vary, hence professional legal counsel is advisable before starting any Craigslist scraping projects. Craigslist prohibits automated scraping, while manual access for personal use is allowed. Unauthorized scraping may lead to access blocks or legal consequences. Copyright and privacy are two ethical and legal considerations that need to be taken into account because their legality varies depending on the jurisdiction. To understand more about scraping, read the “Is Web Scraping Legal?” article.

Does Craigslist have an official API?

Craigslist does not provide an official API for accessing its data. While some sections offer RSS feeds for limited data access, there is no comprehensive API available. As a result, developers often resort to web scraping techniques to scrape data from Craigslist.

How to avoid Craigslist CAPTCHAs?

To avoid Craigslist CAPTCHAs, consider using a reliable API like Crawlbase Crawling API. This API protects web crawlers from blocked requests, proxy failures, IP leaks, browser crashes, and CAPTCHAs. Crawlbase’s artificial intelligence algorithms and engineering team are always optimizing techniques to offer best crawling experiences. Users can use such powerful programs to defeat CAPTCHAs and collect data from Craigslist and other websites without delays or access limitations.

Why Scrape Craigslist?

Craigslist is a helpful resource that offers a wide variety of postings in several categories, including employment, real estate, services, and products for sale. Craigslist has a lot of information, but security features like IP filtering and CAPTCHA problems make it difficult to scrape. With Crawlbase Crawling API, these challenges can be overcome, which give businesses and researchers to access valuable data for various purposes.

Here’s how scraping Craigslist with Crawlbase can be beneficial:

- Market Research and Competitive Analysis: Companies can learn about pricing trends, consumer demand, and competitor strategies by scraping Craigslist listings. Understanding market trends, spotting gaps, and making wise decisions to keep ahead of the competition are all made easier by analyzing this data.

- Lead Generation: Craigslist scraping facilitates lead generation by gathering contact details from potential consumers or clients. This includes phone numbers and email addresses, that can be used for targeted marketing campaigns and sales outreach activities.

- Real Estate Insights: Craigslist scraping offers real estate experts and property investors excellent information into the housing market. Tracking rental prices, property listings, and area trends allows you to make more educated investment decisions and modify pricing tactics in response to market conditions.

- Job Market Analysis: Job seekers and employers can leverage Craigslist scraping to access real-time data on job postings. Analyzing job trends, skill requirements, and salary expectations enables job seekers to tailor their resumes and helps employers optimize recruitment strategies.

- E-Commerce Optimization: Craigslist scraping is a useful tool for e-commerce companies to track competitor prices, spot product trends, and improve their own listings. By using data-driven strategies to provide relevant products at competitive pricing, businesses can stay competitive in the online marketplace.