Crawling websites is not an easy task, especially when you start doing it with thousands or millions of requests. Your server will begin to suffer and will get blocked.

As you probably know, Crawlbase can help you avoid this situation, but in this article, we are not going to talk about that. But instead, we are going to check how you can easily scrape and crawl any website.

This is a hands-on tutorial, so if you want to follow it, make sure that you have a working account in Crawlbase. It’s free, so go ahead and create one here.

Extracting the URL

The first thing that you will notice when registering in Crawlbase is that we don’t have any fancy interface where you add the URLs that you want to crawl. No, as we want you to have complete freedom. Therefore, we created an API that you can call.

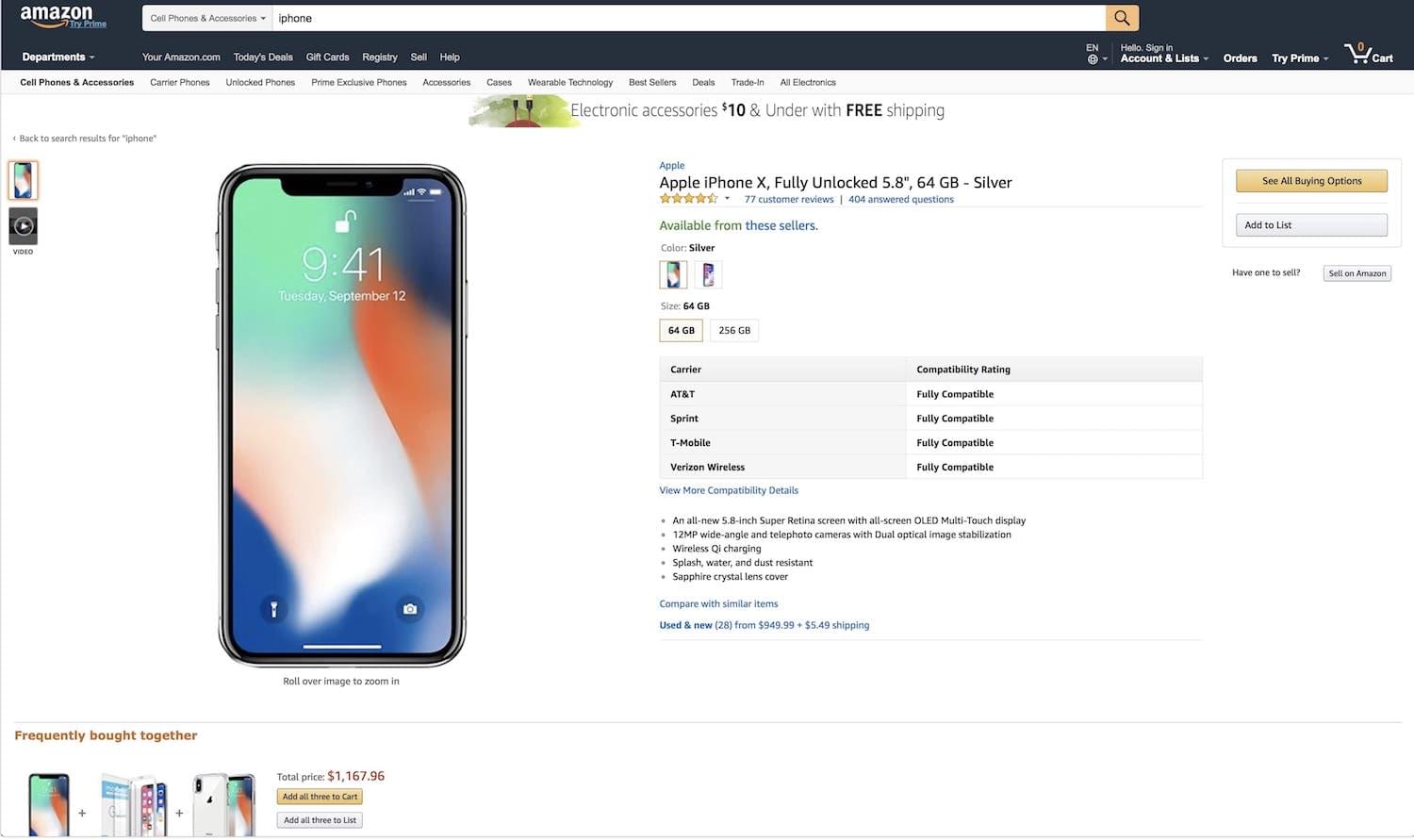

So let’s say we want to crawl and scrape the information of the iPhone X on Amazon.com; at the date of today, that would be the product URL: https://www.amazon.com/Apple-iPhone-Fully-Unlocked-5-8/dp/B075QN8NDH/ref=sr_1_6?s=wireless&ie=UTF8&sr=1-6

How can we crawl Amazon securely from crawlbase?

We will go first to my account page where we will find two tokens, the regular token, and the JavaScript token.

Amazon’s website is not generated with JavaScript, which means that it is not created on the client side, like some sites built with React or Vue. Therefore, we will be using the regular token.

For this tutorial, we will use the following demo token: caA53amvjJ24 but if you are following the tutorial, make sure to get yours from the my account page.

The Amazon URL has some special characters, so we have to make sure that we encode it properly, for example, if we are using Ruby, we could do the following:

1 | require 'cgi' |

This will return the following:

1 | https%3A%2F%2Fwww.amazon.com%2FApple-iPhone-Fully-Unlocked-5-8%2Fdp%2FB075QN8NDH%2Fref%3Dsr_1_6%3Fs%3Dwireless%26ie%3DUTF8%26sr%3D1-6 |

Great! We have our URL ready to be scraped with Crawlbase.

Scraping the content

The next thing that we have to do is to make the actual request.

The Crawlbase API will help us on that. We just have to do a request to the following URL: https://api.crawlbase.com/?token=YOUR_TOKEN&url=THE_URL

So we just have to replace YOUR_TOKEN with our token (which is caA53amvjJ24 for demo purposes) and THE_URL for the URL we just encoded.

Let’s do it in ruby!

1 | require 'net/http' |

Done. We have made our first request to Amazon via Crawlbase. Secure, anonymous and without getting blocked!

Now we should have the HTML from Amazon back, it should look something like this:

1 | <!doctype html><html class="a-no-js" data-19ax5a9jf="dingo"><!-- sp:feature:head-start --> |

Scraping website content

So now there is only one part missing which is extracting the actual content.

This can be done in a million different ways, and it always depends on the language you are programming. We always suggest using one of the many available libraries that are out there.

Here you have some that can help you do the scraping part with the returned HTML:

Scraping with Ruby

Scraping with Node

Scraping with Python

Overview of the Crawlbase API Features and Functionalities

The Crawlbase API is an exceptional tool designed to streamline the process of crawling a website. It provides users with comprehensive features to simplify and enhance website crawling.

Strong Crawling Capabilities

The API’s strength lies in its capability to crawl a website with diversity, allowing access to a wide range of data types:

- Textual Data Extraction: Extracts textual content, including articles, descriptions, and any text-based information available on web pages.

- Image Extraction: Retrieves images present on web pages, offering access to visuals or graphical content.

- Link Collection: Gathers links present on web pages, facilitating navigation and access to related content.

Customizable Configuration

One of its notable aspects is the flexibility it offers in customizing the crawling process to your specific requirements:

- Crawl Depth Adjustment: Allows users to set the depth of the crawl, defining how many levels deep the API should crawl into a website.

- Crawl Frequency Control: Provides options to adjust the frequency of crawls, ensuring timely and periodic updates as needed.

- Data Type Selection: Enables users to specify the types of data to extract, ensuring precise extraction based on user preferences.

Structured Data Retrieval

The Crawlbase API specializes in crawling websites with organized and structured data output:

- Formatted Output: Provides data in structured formats like JSON or XML, easing integration into various applications or systems.

- Data Organization: Ensures data retrieval in an organized manner, making it easily interpretable and ready for integration into your workflow.

Additional Features

- Error Handling: Offers robust error handling mechanisms, providing detailed reports on failed crawls or inaccessible data.

- Secure Authentication: Ensures secure access to the API through authentication protocols, maintaining data privacy and integrity.

The Crawlbase API’s multifaceted capabilities serve as a powerful resource for businesses and developers, enabling efficient and structured extraction of diverse data types from websites.

Benefits of Using the Crawlbase API for Website Crawling

The Crawlbase API presents a range of advantages that significantly enhance the website crawling process:

1. Efficiency and Speed

The API’s streamlined approach accelerates the data extraction process, swiftly gathering information from web pages. It employs efficient algorithms, ensuring optimal performance and faster retrieval of data.

2. Data Accuracy and Reliability

It guarantees accurate and reliable data extraction, minimizing errors and inaccuracies in the gathered information. Moreover, it ensures comprehensive data capture, covering various types of content available on websites.

3. Scalability and Flexibility

Crawlbase API allows crawling websites for scalable operations, accommodating crawling needs for individual websites or extensive networks of sites. Furthermore, it offers flexibility in customizing the crawling process, catering to unique requirements for data extraction.

4. Customization for Tailored Solutions

Crawling API offers customizable settings, allowing users to crawl a website according to specific data requirements. It also enables selective data extraction while crawling a website, ensuring the retrieval of pertinent and targeted information.

5. Improved Decision-Making and Insights

It facilitates access to comprehensive data sets, empowering better decision-making based on the insights gathered. It also provides valuable data by crawling websites that can be utilized for market analysis, trend identification, and business intelligence.

Crawlbase API improves your website crawling by providing a streamlined, reliable, and customizable approach to data extraction, equipping you with valuable insights and information for informed decision-making.

Advanced Techniques with the Crawlbase API

The Crawlbase API offers advanced techniques that can optimize the process of crawling websites and cater to diverse use cases:

- Parallel Crawling: Utilize parallel crawling techniques to simultaneously extract data from multiple web pages, enhancing the speed and efficiency of the crawling process.

- Incremental Crawling: Implement incremental crawling strategies to identify and extract only new or updated content, reducing redundancy and optimizing data retrieval.

- Dynamic Loading Handling: Employ methods to handle dynamic loading of content on websites, ensuring comprehensive data crawling despite dynamically generated elements.

- Customized Selectors: Use customized selectors to precisely target and extract specific elements from web pages, allowing focused data crawling.

Versatile Use Cases of the Crawlbase API

The Crawlbase API presents versatile applications that extend beyond the simple process of crawling websites. Its capabilities transcend conventional web crawling, inviting a spectrum of data-related needs and diverse industries.

Let’s explore the multifaceted use cases wherein the Crawlbase API becomes a valuable tool, offering applications across various domains and businesses:

- Market Research: Conduct extensive market research by crawling websites for product information, pricing data, and consumer reviews.

- Competitor Analysis: Gather valuable data about competitor strategies by analyzing and crawling websites, identifying trends and strategies.

- Content Aggregation: Aggregate content from diverse sources such as news websites or blogs, creating comprehensive databases or curated feeds.

- SEO Optimization: Crawl a website to extract data for SEO purposes, including backlink analysis, keyword research, and website performance metrics to enhance search engine rankings.

- Business Intelligence: Collect industry-specific data like financial information, company profiles, or industry trends for informed decision-making by crawling websites.

The Crawlbase API’s advanced techniques empower you to optimize crawling websites and cater to various use cases, from market research and competitor analysis to content aggregation and SEO optimization.

Strategies for Efficient Crawling using the Crawlbase API

Crawling websites involves employing smart strategies to maximize the potential of the Crawlbase API. These techniques ensure you crawl the most valuable and relevant data efficiently:

- Optimize Your Queries: Refine your queries by specifying the exact data needed, which reduces unnecessary load and time.

- Leverage Crawl Scheduling: Use the API’s scheduling feature to execute crawls during off-peak hours, ensuring smooth performance.

- Implement Incremental Crawling: Focus on new or modified data by employing incremental crawling techniques, reducing redundant data collection.

- Utilize Custom Extractors: Use custom features while crawling a website to precisely target and retrieve specific data points, enhancing efficiency and accuracy.

- Handle Rate Limiting Gracefully: Strategically manage rate limits by ensuring a steady crawl pace, preventing disruptions due to excessive requests.

You can use these strategies while crawling websites for perfect optimization. When you follow them, Crawlbase API can help you crawl a website smoothly and fetch desired data efficiently.

Handling Diverse Challenges in the Crawling Process

Navigating the complexities that often arise during website crawling can be challenging, but with the right approach, you can overcome these hurdles seamlessly.

Here are some recommendations to handle various complexities:

- Dynamic Content: Employ dynamic rendering techniques to capture content loaded through JavaScript, ensuring comprehensive data extraction even from dynamic pages.

- Captcha and Anti-Scraping Mechanisms: Implement strategies to circumvent anti-scraping measures, such as proxies or CAPTCHA solvers, maintaining uninterrupted crawling processes.

- Robust Error Handling: Develop a robust error-handling mechanism to manage connection timeouts, server errors, or interruptions, ensuring smoother crawling.

- Handling Complex Page Structures: Customize crawlers to interpret and navigate complex page structures, ensuring accurate data extraction from intricate layouts.

- Avoiding IP Blocking: Rotate IP addresses and implement IP rotation strategies to prevent IP blocking or restrictions from websites during crawling operations.

Bottom Line!

In this blog, we have explored different capabilities of the Crawlbase API for crawling websites. It offers a dynamic and comprehensive approach to crawling websites.

We have also opened up about diverse techniques and strategies to efficiently crawl a website. If you are at the beginning of crawling websites, we have mentioned the possible complexities you might face and the best ways to handle them. With all this information, now you have an idea about the importance and use cases of carefully crawling websites.

Data is surely an oxygen for modern businesses therefore, it is important to learn about crawling websites to gather accurate and reliable data. The Crawlbase API, with its super-strong features, customizable solutions, and expert-level handling of complexities, emerges as a vital tool for businesses, researchers, and data enthusiasts alike. Its agility and efficiency in data extraction and handling various crawling challenges show its significance in gaining valuable data and staying ahead of the competition.

We hope you enjoyed this tutorial and we hope to see you soon in Crawlbase. Happy crawling!