Web scraping is an efficient way to gather data from multiple websites quickly. Web scraping is a technique for obtaining data from web pages in various ways, including using online cloud-based services and particular APIs or even writing your web scraping code from scratch.

Web scraping is a technique for obtaining data from web pages in various ways. Web scraping is a method of obtaining vast amounts of information from websites, done automatically. Most of this data is unstructured HTML that is turned into structured data in a file or database before being used in different applications.

Web scraping with Python and Selenium can save you both time and effort because it automates browsing web pages for information. Web scraping is a technique that extracts data from online sources to populate databases or generate reports. Web scrapers use HTML parsing techniques to extract data from standard internet pages - like social media posts, news articles, product listings, or other content found on public-facing websites. Web scraper applications are used by people in various industries, ranging from marketing research firms to small business owners who want more targeted advertising options.

Data acquired from websites like e-commerce portals, job portals, and social media platforms can be used to understand better customer buying trends, employee attrition behavior, customer attitudes, etc. Beautiful Soup, Scrappy, and Selenium are the most prominent libraries or frameworks for web scraping with Python.

How to Scrape Data from Websites?

- Using Web Scraping Software: There are two types of web scraping software. First, one can be installed locally on your computer, and the second is cloud-based data extraction services like Crawlbase, ParseHub, OctoParse, and others.

- By Writing Code or Hiring a Developer: You can get a developer to create custom data extraction software tailored to your needs. The developer can then use web scraping APIs or libraries. Apify.com, for example, makes it simple to obtain APIs for scraping data from any website. Beautiful Soup is a Python module that allows you to extract data from a web page’s HTML code.

How Selenium and Python Drive Web Scraping?

Python provides libraries catering to a wide range of tasks, including web scraping. Selenium, a suite of open-source projects, facilitates browser automation across different platforms. It’s compatible with various popular programming languages.

Initially designed for cross-browser testing, Selenium with Python has evolved to encompass creative applications like web scraping.

Selenium utilizes the Webdriver protocol to automate processes across browsers like Firefox, Chrome, and Safari. This automation can occur locally, such as testing a web page, or remotely, like web scraping.

Why is Python a Great Web Scraping Programming Language?

Python is a high-level, general-purpose programming language widely used in web development, machine learning applications, and cutting-edge software technologies. Python is an excellent programming language for beginners and experienced programmers who have worked with other programming languages.

Scrapy is a Python-based open-source web crawling platform with a large user base. It is the most widely used language for web scraping since it can easily handle most procedures. It also includes several libraries explicitly designed for web scraping. Web scraping with Python great for scraping websites and getting data from APIs. Beautiful Soup is yet another Python library that is ideal for scouring the web. It generates a parse tree from which data may be extracted from HTML on a website. Navigation, searching, and changing these parse trees are all possible with Beautiful Soup.

On the other hand, web scraping can be tricky since some websites can restrict your attempts or even prohibit your IP address. You will get blocked if you don’t have a trustworthy API because you repeatedly send the request from the same or untrusted IP address. Scraping through a trusted Proxy would solve the problem as it uses a trustful pool of proxies, so every request gets accepted by the targeted websites.

Without proxies, writing a standard scraper in Python may not be adequate. To effectively scrape relevant data on the web, you’ll need Crawlbase ’s Crawling API, which will let you scrape most websites without having to deal with banned requests or CAPTCHAs.

Setups and tools

The following are the requirements for our simple scraping tool:

- Crawlbase account

- Any IDE

- Python 3

- Crawlbase Python Library

- Selenium Framework

Scraping Websites with the Scraper API in Python

Let’s begin by downloading and installing the library we’ll be using for this task. On your console, type the command:

1 | pip install crawlbase |

It’s time to start writing code now that everything is in place. To begin, import the Crawlbase API::

1 | from crawlbase import ScraperAPI |

Then, after initializing the API, enter your authentication token as follows:

1 | api = ScraperAPI({'token': 'USER_TOKEN'}) |

Get your target URL or any website you want to scrape afterward. We will be using Amazon as an example in this guide.

1 | targetURL = 'https://www.amazon.com/AMD-Ryzen-3800XT-16-Threads-Processor/dp/B089WCXZJC' |

The following section of our code allows us to download the URL’s whole HTML source code and, if successful, display the output on your console or terminal:

1 | response = api.get(targetURL) |

As you’ll see, Crawlbase responds to every request it receives. If the status is 200 or successful, our code will only show you the crawled HTML. Any other result, such as 503 or 404, indicates that the web crawler was unsuccessful. The API, on the other hand, employs thousands of proxies around the world, ensuring that the best data returns are obtained.

Simply include it in our GET request as a parameter. Our complete code should now seem as follows:

1 | from crawlbase import CrawlingAPI |

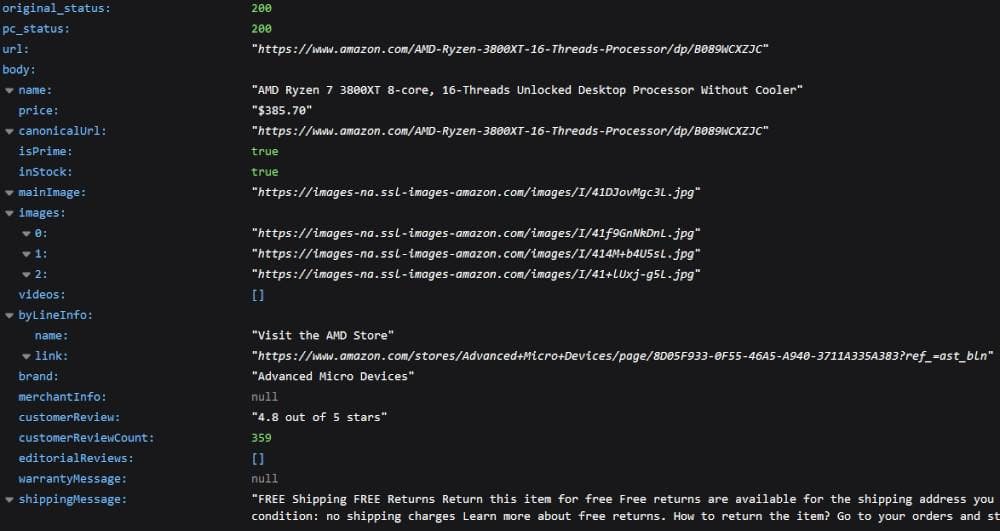

If everything goes properly, you should receive a response similar to the one below:

What is Selenium Web Scraping?

Selenium serves as a handy tool for automating web browsing tasks. It was created back in 2004 and initially focused on automating website and app testing across different browsers. However,

Selenium web scraping has gained popularity as a web scraping tool over time. With Selenium, you can automate browser actions like clicking, typing, and scrolling. Plus, it supports multiple programming languages like Python, Java, and C#.

When you engage in Selenium web scraping, you’re essentially using Selenium in combination with Python to extract data from websites. This involves programmatically controlling a web browser to interact with websites just like a human user would.

Why Use Selenium for Web Scraping?

When you’re considering web scraping, Selenium offers some clear advantages over other methods:

- Dynamic Websites: If you’re dealing with websites that use a lot of JavaScript or other scripting languages to create dynamic content, Selenium can handle it. It’s great for scraping data from pages that change or update based on user interactions.

- User Interactions: Scraping with Selenium can mimic human interactions with a webpage, such as clicking buttons, filling out forms, and scrolling. This means you can scrape data from websites that require user input, like login forms or interactive elements.

- Debugging: With Selenium web scraping, you can run your scraping scripts in debug mode. This lets you step through each part of the scraping process and see exactly what’s happening at each step. It’s invaluable for troubleshooting and fixing issues when they arise.

Scraping with Selenium and Crawlbase

Selenium is a web-based automation tool that is free and open-source. Selenium is mainly used in the market for testing but may also be used for web scraping.

Install selenium using pip

1 | pip install selenium |

Install selenium using conda

1 | conda install -c conda-forge selenium` |

1 | command : driver = webdriver.Chrome(ChromeDriverManager().install()) |

The complete documentation on selenium can be found here. The documentation is self-explanatory; therefore, read it to learn how to use Selenium with Python.

Web Scraping using Selenium Python

Import libraries:

1 | import os |

Install Driver:

1 | #Install Driver |

API call:

1 | curl 'https://api.crawlbase.com/scraper?token=TOKEN&url=https%3A%2F%2Fwww.amazon.com%2Fdp%2FB00JITDVD2' |

Applications of Web Scraping with Selenium and Python

- Sentiment Analysis: When you’re starting sentiment analysis, platforms like social media usually provide APIs to access data. However, sometimes you need more. Selenium Python web scraping can fetch real-time data on conversations, trends, and research, offering a deeper insight into sentiment analysis.

- Market Research: For eCommerce businesses, keeping an eye on product trends and pricing across various platforms is super important. Web scraping with Selenium and Python enables efficient monitoring of competitors and pricing strategies, providing valuable data into consumer feelings and market dynamics.

- Technological Research: Innovative technologies like driverless cars and facial recognition heavily rely on data. Web scraping extracts important data from trustworthy websites, serving as a convenient and widely used method for gathering data needed for technological advancements.

- Machine Learning: Machine learning algorithms need extensive datasets for training. Web scraping with Selenium and Python helps in gathering vast amounts of accurate and reliable data, fueling research, technological innovation, and overall growth across various fields. Whether it’s sentiment analysis or other machine learning algorithms, web scraping ensures access to the necessary data with precision and dependability.

Conclusion

Our scraping tool is now complete and ready to use, with just a few lines of code for web scraping with Python and Selenium. Of course, you may apply what you’ve learned here in any way you choose, and it will offer you a lot of material that has already been processed. Using the Scraping API, you won’t have to worry about website restrictions or CAPTCHAs, allowing you to focus on what matters most to your project or business.

Web scraping with Python and Selenium can be used in several different ways and on a much grander scale. Try with alternative applications and features if you want to. Perhaps you’d like to search and collect Google photos, keep track of product pricing on retail sites for daily changes, or even provide data extraction solutions to the company.