Web Crawling (or data crawling) is being used for copying data and alludes to gathering information from either the internet, or in information crawling cases—any report, document, and so forth Customarily, it is to be done in huge quantities, yet not restricted to little workloads. Therefore, typically finished with a crawler specialist. Data crawling services are an imperative piece of any SEO technique they assist us with discovering information that isn’t apparent in the public domain, and we can use this information for the advantages of the customer for any business. Data crawling implies managing huge data collections where you foster your crawlers (or bots) which creep to the most profound of the site pages. Data Scraping alludes to retrieving data from any source (not specifically from the web).

Tips for Data Crawling Services:

There are mainly a few approaches that will help you achieve the desired goal of data crawling. The first approach is to use the built-in APIs of the respective websites whose data is being crawled. For example, many enormous social media sites, as Facebook, Twitter, Instagram, and Stack Overflow give APIs to clients to get their data. Sometimes, you can pick the official APIs to get organized data.

Yet, if you want to crawl data from the webpages, websites, or any available online source, you can do it with the help of utilizing APIs available for data crawling. For example, Crawlbase’s data crawler API provides ideal data crawling services. It has all that you require crawling data. As there are lots of data crawling APIs available, there are certain tips based on which, you can select which data crawling service you should available that fulfills the specific needs of your business or development requirements. I have discussed these tips below:

1. Synchronous Vs Asynchronous Web Crawlers

Synchronous web crawling implies we scrawl just one website at a time and begin scraping the next one just when the first has got done with processing. Here we need to remember that the greatest time sink is the network request. Most often, we have spent a lot of time waiting for the Web Server to respond to the request we sent and give us the content of a webpage. During this slow downtime, the PC adequately isn’t accomplishing any work. There are million better things that the machine could do as of now and two of these things are - send additional requests and process got data.

We can discover a ton of libraries out there that can make asynchronous request sending simple and quick, for instance, python’s grequests, aiohttp, just as the simultaneous package and requests in combination with stringing from the standard python library. Scrapy’s default behavior is to schedule and process requests asynchronously, hence, if you need to begin and scale your project rapidly, this structure might be a great tool for the job.

2. Web Crawling While Rotating User-agents

We can carry out a user-agent rotation either by changing the headers manually or by composing a function that restores the user-agent list each time we start the web crawling script. You can execute this in the same way as the previous functions for recovering IP addresses. You can discover many websites where it’s workable to get different user-agent strings.

Try to keep your user-agent strings updated either manually or via mechanizing or automating the process. Since the new program releases are getting more incessant, it’s simpler than ever to distinguish an obsolete client-agent string and for servers to obstruct requests from said client-agents.

3. Don’t Flood The Website With Many Parallel Requests, Take It Slow

Enormous sites have algorithms to distinguish web scraping, a huge number of parallel requests from a similar Ip address will recognize you as a Denial-of-Service Attack on their site and blacklist your IPs right away. The best idea is to time your requests appropriately in a steady progression, giving them some human behavior. Gracious! However, crawling like that will take you ages. So, balance request using the normal response time of the sites, and experiment with the number of equal requests to the site to get the correct number.

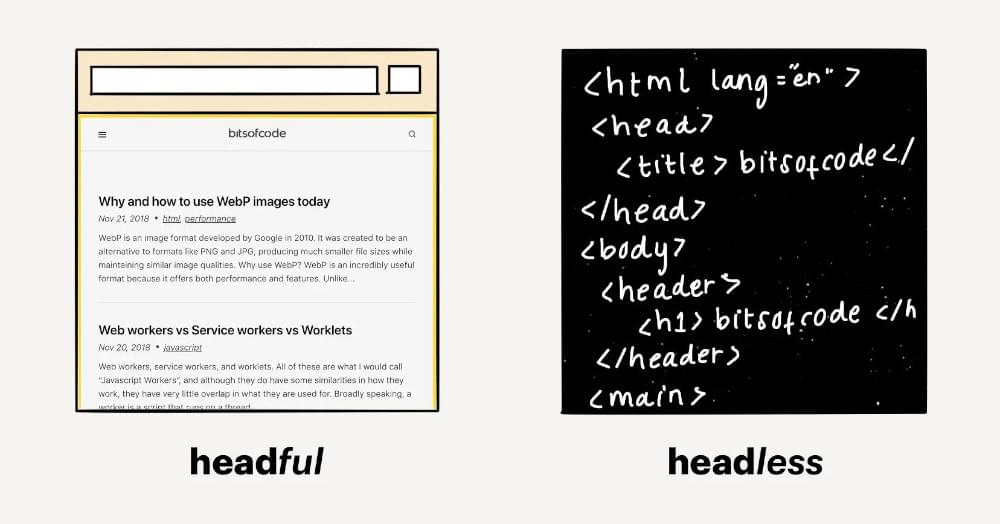

4. Use Headless Browsers

When it comes to data crawling, using headless browsers is a valuable tip. A headless browser is a web browser without a user interface, which means it can operate in the background and interact with websites just like a regular browser. This technique is particularly useful for automating web scraping tasks or performing tasks that require JavaScript rendering.

Popular headless browsers include Puppeteer (for Node.js), Selenium WebDriver, and Playwright, among others. These tools provide APIs for automating browser actions, interacting with web pages, and extracting desired data.

However, it’s important to note that while headless browsers offer significant advantages for data crawling, it’s crucial to adhere to ethical and legal considerations. Always make sure to respect website terms of service, robots.txt guidelines, and any applicable laws and regulations related to web scraping and data usage.

5. Crawl During Off-Peak Hours

This refers to the practice of scheduling your data crawling or web scraping activities during periods when website traffic is relatively low. Doing so can help optimize your crawling process and reduce potential disruptions or limitations imposed by high user activity on the target website.

Here’s why crawling during off-peak hours is beneficial:

- Reduced Server Load: Popular websites often experience heavy traffic during peak hours, which can strain their servers and result in slower response times. By crawling during off-peak hours when fewer users are accessing the website, you can avoid adding additional load to the server. This can lead to faster and more reliable crawling, as the website’s resources are more readily available for your data retrieval.

- Increased Crawling Speed: During off-peak hours, the website’s response times tend to be faster due to lower user activity. This means your crawler can retrieve data more quickly, resulting in a faster overall crawling process. This is especially advantageous when dealing with large datasets or time-sensitive scraping tasks.

- Reduced IP Blocking or Rate Limiting: Websites may implement security measures to protect against aggressive or abusive scraping activities. These measures can include IP blocking or rate limiting, where requests from a particular IP address or user agent are restricted after surpassing a certain threshold. By crawling during off-peak hours, you decrease the likelihood of triggering such security measures since there are fewer users and requests on the website. This reduces the risk of encountering IP blocks or being subjected to restrictive rate limits.

- Improved Data Consistency: Websites that rely on user-generated content, such as forums or social media platforms, may have a higher volume of updates or changes during peak hours when user activity is at its highest. Crawling during off-peak hours allows you to capture data in a more consistent and stable state since there are fewer ongoing updates or modifications. This can be particularly important when you require accurate and up-to-date information from the website.

- Enhanced User Experience: If your data crawling activity puts a significant strain on a website’s resources during peak hours, it can negatively impact the experience of regular users trying to access the site. Crawling during off-peak hours demonstrates consideration for the website’s users by minimizing disruptions and ensuring that they can access the website smoothly.

It’s worth noting that the definition of “off-peak hours” may vary depending on the website and its target audience. It’s a good practice to monitor website traffic patterns and identify periods of reduced activity for optimal crawling times. Additionally, be mindful of any website-specific guidelines or limitations related to crawling, as outlined in their terms of service or robots.txt file.

By timing your crawling activities strategically, you can maximize efficiency, minimize disruptions, and ensure a smoother data retrieval process.

6. Don’t Violate Copyright Issues

Legal compliance is of utmost importance when it comes to data crawling or web scraping to avoid violating copyright issues. Copyright laws exist to protect the rights of content creators and regulate the use and distribution of their intellectual property. As a data crawler, it is crucial to respect these rights and ensure that you do not infringe upon the copyrights of others.

When crawling websites, it is important to be mindful of the content you are accessing and extracting. Copying or redistributing copyrighted materials without permission can lead to legal consequences. Therefore, it is recommended to focus on publicly available and non-copyrighted content or obtain proper authorization from the content owners before crawling or scraping their data.

It is also essential to be aware of website terms of service, usage policies, and robots.txt guidelines. These documents may specify the permissions and restrictions regarding crawling activities. Adhering to these guidelines demonstrates ethical behavior and helps maintain a positive relationship with website owners and administrators.

By respecting copyright laws and obtaining the necessary permissions, you can ensure that your data crawling activities are conducted in an ethical and legal manner. This not only protects the rights of content creators but also safeguards your own reputation and credibility as a responsible data crawler.

7. Using Custom Headers For A Web Crawler

When contemplating how to web crawl, we mostly don’t consider what data we sent to the server when we’re making requests. Contingent upon what tool we’re using to crawl the web if the ‘headers’ area left unmodified, it’s genuinely simple to get a bot.

Whenever you’re getting to a site page, two things are happening. Initially, a Request object built, and second, a Response object made once the server reacts to the underlying Request.

8. Easy Integration

Seamlessly integrate the Web crawler API into the app to crawl any online web page. The ideal data web crawling service will have an easy integration of the crawler into your app. It will not have any complicated procedure to add up the crawling service in the app. Usually, many data crawling services have easy hands-on application integration so that users may not bother while using their services.

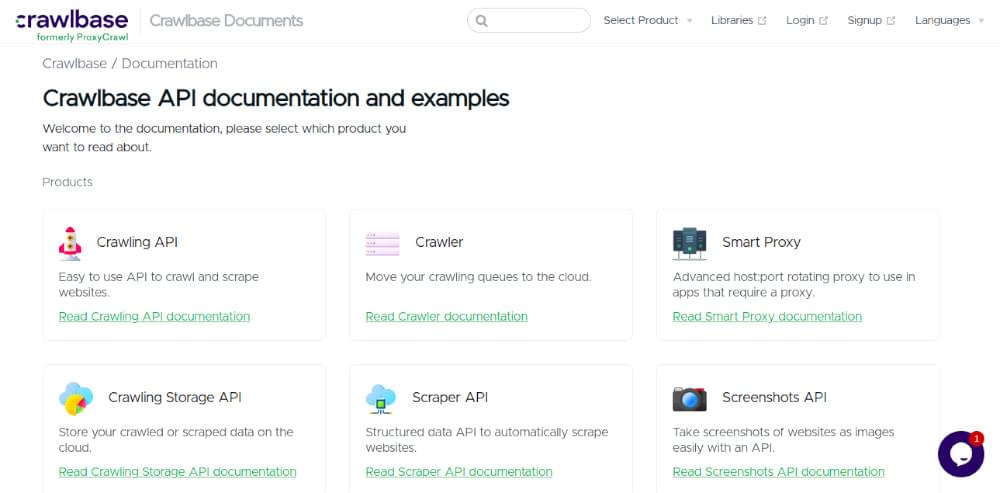

9. Follow The Official Documentation

The official documentation provides the basis for integrating web crawling services into the app. It is the key tip to know that the data crawling service you are going to integrate into your app to get your required objectives that the service provider must have comprehensive documentation provided with easy guidelines. Since simplicity is the key, so that more detailed and simplified guiding documentation is available, it will be a plus point to use that data crawling service. This documented guidance will surely assist you whenever you stuck at any point.

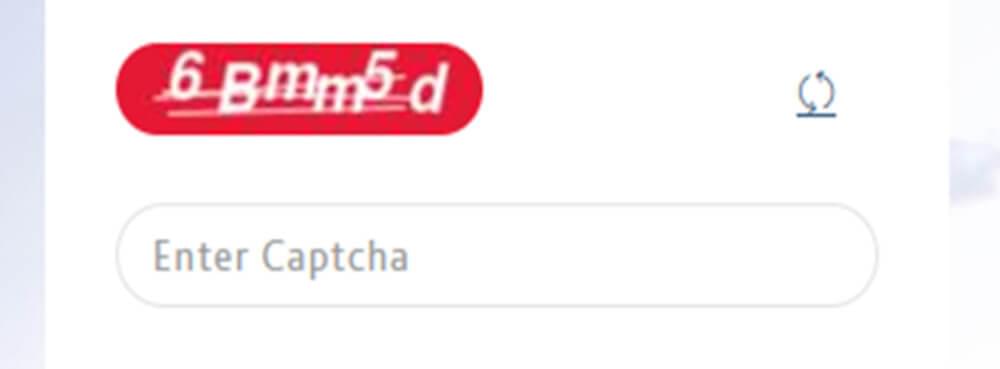

10. Solving CAPTCHAs

A proficient method of obstructing essential scripts is by following the behavior of the user and store it into the cookies. If the user conduct appears to be dubious, a Completely Automated Public Turing test to distinguish between Computers and Humans (CAPTCHA) serves for the client to settle. This way, it will not block a possibly genuine client while most web crawlers will. Since the test depends on the understanding that only a human could finish the CAPTCHA assessment.

If you need to crawl data from sites that test their users with CAPTCHA, carry out a critical thinking script immediately. Crawling of data succeeds by the smart handling of website CAPTCHAS. The best data crawling service providers must have constant tweaking & alternative ways to handle CAPTCHAS. They implement an algorithm that embeds the latest smart technologies to solve the problem right away. These scripts ensure to solve CAPTCHAS for you and to avoid any blocks. As the ideal Data Crawling services mainly care for their users’ crawling success and ensure it anyhow.

11. Crawling Of All Kinds Of Web Pages

Data crawling APIs will help you crawl real web browsers. They will handle crawling data from regular web pages and also crawl the dynamic JavaScript web pages. If they created any web page using React, Angular, Vue, Ember, Meteor, etc., they will crawl the data from that page and provide you the necessary HTML so that you easily use that for further scraping or any relevant usage.

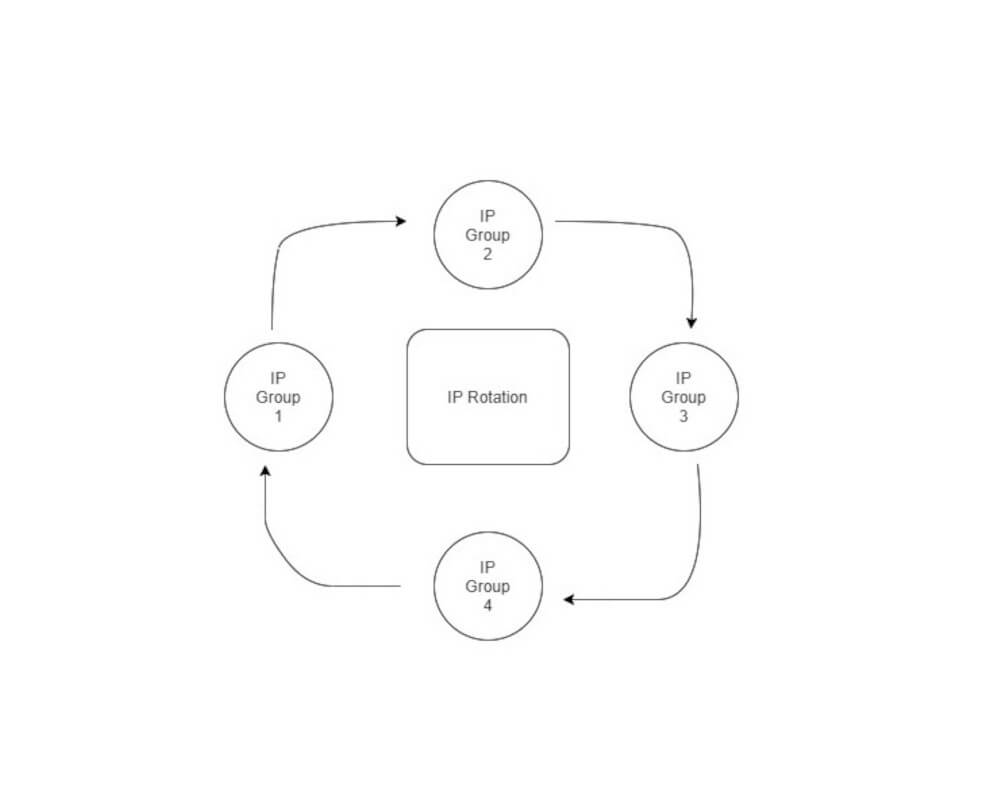

12. IP address rotation

A moderately logical method of executing IP rotation is by utilizing instant Scrapy middleware. Scrapy is a framework of a python that has grown explicitly for web data crawling and scraping. A valuable Scrapy device for pivoting IP locations could be scrapy-intermediaries middleware.

Another method of turning IP addresses is by utilizing an intermediary service, also called proxy service. Based upon the bought plan or intermediary entryways, or the accessible data crawling services, you’ll get a set number of IP to depend on an area of your decision. In this way, we will send everyone datum crawling requests through these. Use world-class intermediaries if you can, as they will send the most client-like headers to the worker you’re attempting to get to.

13. Dealing With Cookies

A cookie is a system with which the web-server recalls the HTTP state for a user’s perusing session. Simply saying, it tracks the movement of the user, recollects the language and other favored settings that a user has chosen when visiting already. For instance, if you are doing internet shopping, and you add things to your shopping cart, you expect the things will be there when you go to registration. A session cookie is a thing that empowers the site page to do this.

For web information crawling, a regular illustration of cookie utilization kept a signed-in state on the off chance that you need to crawl the data that is secret phrase ensured. In case you’re thinking about how to crawl a site that has persistent cookies. One method of having a few parameters & cookies persevere across requests is by utilizing python’s Session object of request module. You can go through cookies to speed web information crawling. In case you’re getting to a similar page, this works by utilizing a similar Transmission Control Protocol (TCP). We would simply reuse the current connection of HTTP, hence saving time.

Step-by-Step Process to use Web Crawling Service:

1)Go to the website of data crawling services and click on “Create a free account”.

2)The following form opens up when the “Create a free account” button to be clicked.

3)Fill this form and register your account for free. After submitting information for the creation of an account, the following screen appears that asks you to check your email.

4)You need to go to your email’s “Inbox” which will contain the “Confirm Account” email from the data crawling service provider. If you don’t found the confirmation email in the email Inbox, kindly check your Spam mail folder and click on the “Confirm Account” button.

5)After the confirmation of your account, you will land on the login screen.

6)After inserting the login credentials, you had put in while registration of the account, you will land on the main dashboard.

7)From all the services that the data crawling service provider has provided on the dashboard, click on the required service. Suppose, in our case, it is “Crawling API”

8)After clicking on “Crawling API” from your user dashboard, they will direct you to the following page.

9)This page highlights the initial 1000 crawling requests. Now, click the “Start crawling now” button. This will give you a document

Conclusion

As web information crawling is rapidly becoming well known because of business intelligence and the tools of research, it is additionally imperative to do it appropriately. It doesn’t make any difference on the off chance that you need to crawl any information from online internet business websites and need to crawl their particular directions, there are a couple of things everybody should remember while crawling data from any website as discussed above in details.

Web crawling with Crawlbase Crawling API can power up the businesses like social media monitoring, Travel sites, Lead generation, E-commerce, Events listings, Price comparison, Finance, reputation monitoring, and the list is never-ending.

Every business has rivalry in the current world, so organizations scratch their rival data consistently to screen the developments. In the period of large information, utilization of web scratching is unending. Depending on your business, you can find a lot of areas where web data can be of great use. Web scraping is thus an art that is used to make data gathering automated and fast.