Crawling the web is essential for public data gathering. This data is later used to improve business and marketing strategies. Getting blacklisted while scraping data is a common issue for those who don’t know how to crawl a website without getting blocked. We gathered a list of tips to stay hidden while crawling the web.

At first, Web pages detect web crawlers and tools by checking their IP addresses, user agents, browser parameters, and general behavior. If the website finds it suspicious, you receive CAPTCHAs. Then eventually, your requests get blocked since your crawler is detected.

Been there? Need some practical anonymous scraping techniques? You are at the right place! Let’s begin!

A Little About Web Crawling

Web crawling, in essence, revolves around the process of cataloging information found on the internet or within specific data repositories. This task is carried out by web crawlers, often referred to as “spiders,” which methodically navigate the World Wide Web to index the available information.

A prime illustration of web crawling in action is the operations conducted by popular web browsers like Google, Yahoo, or Bing. These browsers systematically access websites one after another, carefully indexing all the content contained within these webpages.

From a business standpoint, any web-related attempts that prioritize information indexing rather than data extraction fall under the category of web crawling.

11 Secret Tips For Anonymous Web Crawling

Let’s dive deep into anonymous web crawling, finding the best techniques and privacy tips to ensure you stay hidden while crawling.

We have collected some really amazing crawler privacy tips to safeguarding your identity, respecting website policies, and conducting your crawls ethically and anonymously:

1. Make the crawling slower

An IP address has a limited number of actions that can be done on the website at a certain time. When a human accesses a web page, the speed is understandably less than what happens in web crawling. To stay hidden while crawling and mitigate the risk of being blocked, you should slow down your crawling speed. For instance, you can add random breaks between requests or initiate wait commands before performing a specific action.

Web scraping bots fetch data very fast, but it is easy for a site to detect your scraper as humans cannot browse that fast. The faster you crawl, the worse it is for everyone. If a website gets too many requests, then it can handle it might become unresponsive. Make your spider look real by mimicking human actions. Put some random programmatic sleep calls in between requests, add some delays after crawling a small number of pages, and choose the lowest number of concurrent requests possible. Ideally, put a delay of 10-20 seconds between clicks and not put much load on the website, treating the website nicely.

2. Avoid image scraping

Images are data-heavy objects that can often be copyright protected. Not only will it take additional bandwidth and storage space, but there’s also a higher risk of infringing on someone else’s rights.

Plus, they are often hidden in JavaScript elements ‘behind Lazy loading,’ which will significantly increase the data acquisition process’s complexity and slow down the web scraper itself. So, you have to avoid JavaScript too.

3. Use a Crawling API

The Crawlbase Crawling API offers peace of mind by automating most things that cause headaches. You may either do everything on your own or use the API to do as much crawling as you need.

Undoubtedly, it’s one of the best crawler privacy tips. Our Crawling API protects your web crawler against blocked requests, proxy failure, IP leak, browser crashes, and CAPTCHAs.

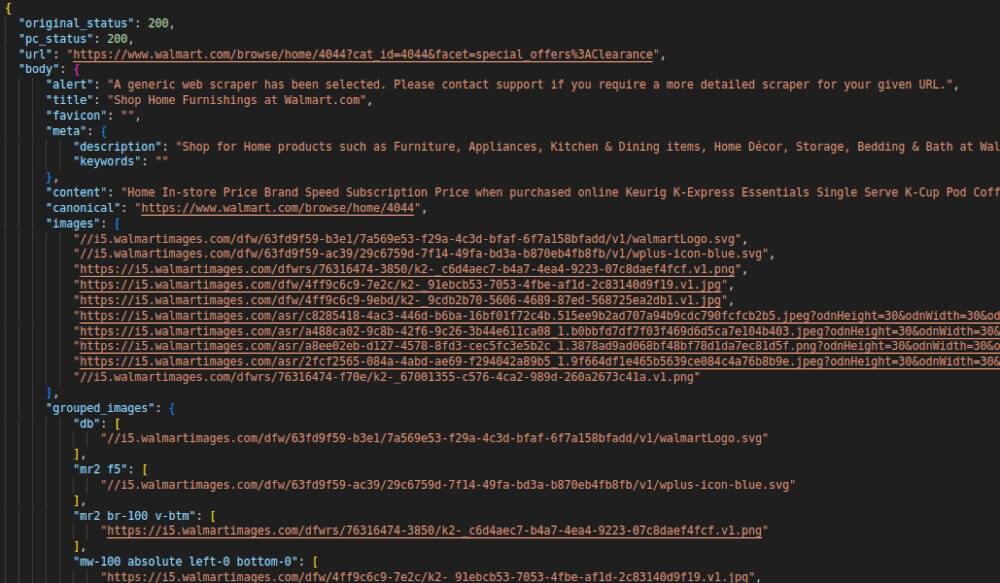

Now, we will see how you can use Python 3 to scrape Walmart. To get started with running the script, we first need to gather some information like:

- The Crawling API URL

https://api.crawlbase.com - Your Crawlbase token

- Walmart URL (Clearance Sale in our case)

We then need to import some relevant modules.

1 | from urllib.request import urlopen |

Now that we have imported the modules and even have the relevant information gathered, we need to pass the URL for scraping, the scraping API with some parameters like a token to fulfill requirements.

1 | url = quote_plus('https://www.walmart.com/browse/home/4044?cat_id=4044&facet=special_offers%3AClearance') |

The full code will look something like this where we will format the end result to look pretty.

1 | from urllib.request import urlopen |

The final output will be displayed in the output panel when you press the Control + F10 key (on Windows).

We also have an available library for Python that you may use at.

4. Respect The Robots.txt File

First of all, you have to understand what is a robots.txt file and what is its mechanism. The robots.txt file is based inside the root of the website. It set the rules of crawling which parts of the website should not be scraped, how frequently it can be scraped. Some websites are not allowing anyone to scrape them. So, basically, it tells search engine crawlers which pages or files the crawler can or can’t request from your site. A bot that is programmed to follow the rules set on robots.txt will by default follow these rules and retrieve data. This is used mainly to avoid overloading any website with requests.

For example, this robots.txt instructs all Search engine robots to not index any of the website’s content. This is defined by disallowing the root / of your website.

User-agent: *

Disallow: /

You can still find the robots.txt file to only allow Bingbot to scrape data in some cases. In contrast, all other bots are banned from extracting any data. Still, as this is decided by the website owner, there is nothing you can do to avoid this rule.

You should also note when site owners do not find a way to stop crawling on their sites, they simply implement captchas and/or text message validations in place for all the links, which will surely bother any human or bot while accessing the links. However, if your reason is legit, you can still proceed with the same.

5. Beware of Honeypot Traps

Installed honeypots are links in the HTML code. Those links are invisible to normal users, but web scrapers can detect them. Honeypots are used to identify and block web crawlers because only robots would follow that link. Honeypots are systems set up to lure hackers and detect any hacking attempts that try to gain information.

Some honeypot links to detect spiders will have the CSS style “display: none” or color disguised to blend in with the page’s background color. This detection is obviously not easy and requires a significant amount of programming work to accomplish in the right way; that’s why this technique is not commonly used.

6. Avoid Session URL Trap

Sessions are used to store visitor data for this visit only. Most frameworks use sessions. Each session usually gets a unique id (12345zxcv from the example: “https://www.example.com/?session=12345zxcv“). Session data, as known, is stored in cookies. A session ID appears if a session data is not stored in a cookie due to some reasons like the server’s misconfiguration.

Each visit from a crawler constitutes a ‘new visit’ and gets a new session id. The same URL crawled twice will get 2 different session ids and 2 different URLs. Each time a crawler crawls a page, all the links with the new session id will look like new pages resulting in an explosion of URLs ready to crawl.

To avoid the session URL trap, just visit your website, disable cookies, and click a few links. If a session id appears in the URL, you are vulnerable to the session URL trap. The MarketingTracer on-page SEO crawler is built to detect this crawl trap. Just check your crawl index and filter on ‘session.’

7. Check If The Website Changes Layouts

Anonymous web crawling can be difficult for some websites that know scrapers well and can be a bit tricky as they may have several layouts. For instance, some sets of pages may have a different layout then what the rest of them display. To avoid this, you may have different Paths or CSS selectors to scrape data. Or how you can add a condition in your code to scrape those pages differently.

8. How do websites detect and block web scraping?

Websites use different techniques to differentiate a web spider/scraper from a real person. Some of these mechanisms are specified below:

- Unusually High Download Rate/Traffic: particularly from a single client/or IP address within a brief period.

- Repetitive Tasks Completed In The Same Browsing Pattern: based on an assumption that a human user will not perform the repetitive tasks every time.

- Checking If Your Browser Is Real: A mere check is to try and execute JavaScript. More intelligent tools can go the extra mile by checking your GPUs and CPUs to make sure you are from a real browser.

- Detection With Honeypots: honeypots are usually links that are invisible to a normal user but only to a spider. When a web spider/scraper tries to access the link, the alarms are tripped.

You may have to spend some time upfront investigating the website’s scraping risks to avoid triggering any anti-scrape mechanism and build a spider bot accordingly.

9. Use Captcha Solving APIs:

As you begin to scrape websites on a large scale, you will eventually get blocked. You will start to see CAPTCHA pages before the regular web pages. Services like Anticaptcha or 2Captcha to bypass these restrictions.

For anonymous web crawling of websites that use Captcha, it is better to seek captcha services as they are comparatively cheaper than custom Captcha solvers, which is useful when performing large scale scrapes.

10. Avoid scraping behind a login form:

Login Forms for private platforms like Facebook, Instagram, and LinkedIn send login information or cookies along with requests to view the page which in turn makes it easier for a target website to see incoming requests from the same address. A scraper would have to do this with each request and this could take away your credentials or block your account which leads to web scraping efforts getting blocked.

It’s recommended to avoid scraping websites with login forms to not get blocked easily and stay hidden while crawling. But one way to avoid this is to imitate human-like behavior with browsers whenever authentication is required to get your target data.

11. Change Your Crawling Behavior:

One of the best anonymous scraping techniques to prevent a website from automatically blocking your crawler due to a repetitive crawling pattern is to introduce some diversity in your approach. If your crawler follows the same pattern consistently, it becomes easy for the website to identify it based on its predictable actions.

It is recommended to add some occasional changes into your crawler’s behavior. This could involve random clicks or scrolling actions to mimic the behavior of a regular user on the site. It’s also a good idea to take the time to explore the website’s structure by visiting it manually before starting an extensive scraping session. This way, you can better understand how to blend in seamlessly with the site’s natural flow.

How do you know if a website has banned or blocked you?

The following signs show that you are blocked to scrape from a certain website:

- Frequent HTTP 404, 301 or 50x error responses

- CAPTCHA pages

- Unusual delays in content delivery

Some of the common error responses to check for are: - 503 - Service Unavailable

- 429 - Too Many Requests

- 408 - Request Timeout

- 403 - Requested Resource Forbidden

- 404 - Not Found

- 401 - Unauthorized Client

- 301 - Moved Permanently

Is Website Crawling Against the Law?

Crawling or scraping a website is generally not illegal, as long as you’re not breaking the website’s Terms of Service (ToS). It’s important that the web scraper you use doesn’t try to log in or access areas of the site that are restricted, as this would violate the ToS of the website.

However, there’s a common misunderstanding that scraping all types of public data is always legal. Some content like images, videos, and articles can be considered creative works and are protected by copyright laws. You can’t freely use your web scraper to collect and use such copyrighted material.

In some cases, the ToS of certain websites explicitly state that scraping is not allowed, and in those situations, it can be considered illegal. So, it’s crucial to respect the rules set by each website to ensure you’re scraping data legally.

Conclusion

Hopefully, you have learned new anonymous scraping techniques by reading this article. I must remind you to keep respecting the robots.txt file. Also, try not to make large requests to smaller websites. Alternatively, you could use Crawlbase Scraper API which determines all that on its own to not waste your precious time and focus to get things done on time and in a scalable way.