One major issue when crawling and scraping thousands of web pages online is getting blocked. Especially if you are sending repeated requests to a single website, bot detection will most likely kick in and the next thing you know, they already banned your IP address.

Now, if you are already familiar with web scraping, you probably already know that the best way to avoid such roadblocks is by using proxies. The higher the number of quality proxies you can use, the higher the chance of bypassing such blocks. With the increasing popularity of web scraping, it comes naturally that more and more companies will offer solutions for people who want quality proxies for their web crawlers. However, with the vast number of choices out there, how can you pick the right tool for you? Companies started to offer variations of such tools that one may look like it has the same purpose, but at its root, was actually meant to specialize at something else.

Here at Crawlbase, we know exactly what is going on. Some of our clients are getting confused about what product is best for them. Thus, we made this article to provide clear answers and help you decide what product you would want to use for your project. We will be focusing on one of the most common questions from our clients: “What should we use? The Crawling API or Smart backconnect proxy?”

What is Smart backconnect proxy?

So, let us start with the textbook definition. Backconnect proxies, also known as rotating or reverse proxies, are servers that let you connect to its collection of thousands or even millions of proxies. These proxies are, in essence, pools of proxies that are handled by one proxy network. Instead of sending your requests to different proxies manually, this product will allow you to send all your request to one proxy host either by authentication or via port usage and will rotate the IPs for you on the back end.

Different companies may offer one or two types of proxies with added functionality. For example, some only provide residential proxies, while some give you an option to choose between residential or data center, or even both at the same pool. The quality of proxies used and the rotation of IPs are essential to make sure every request that you send will not get blocked or IP banned by the target website.

Features or options may also vary for each backconnnect proxy provider. with Crawlbase’s case, authentication via username and password is not needed, instead, users can connect using a proxy host and a port while identification is done by whitelisting your server’s IP.

Below are some of the key features of Crawlbase’s Smart backconnect proxy:

- IP rotation Integral to any backconnect proxy service, the rotating IP provides quality proxies which means a bad IP can be easily replaced with fresh ones. These pools of proxies are especially effective against bot detection, captchas, and/or blocked requests.

- Static IP Locking a static IP is required if you plan to maintain sessions between requests. This is especially useful when you want to send form data or contact forms, trackbacks, or any posting-related requests.

- Geolocalization Used in conjunction with Static IP, you can send your requests via the static port of any specified country.

- Multiple threads Threads represent the number of connections allowed to the proxy network at any given time. The more threads you have, the more requests you can send simultaneously.

What is the Crawling API?

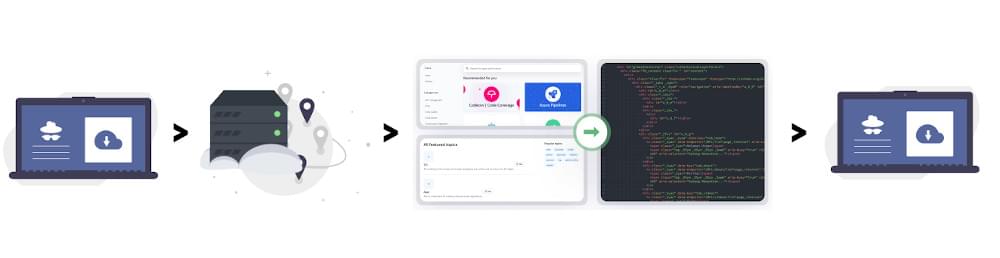

As the name implies, the Crawling API is an application programming interface (API) web crawler. A web crawler or a spider bot is an internet bot that can browse the web systematically. Using the Crawling API will allow you to crawl and scrape various data from any target websites easily. The Crawling API is also built on top of thousands of residential and data center proxies to bypass blocks, bot detection, and captcha. Unlike Smart backconnect proxy, however, the Crawling API is built as an all-in-one solution for your scraping needs. It is basically a web crawler with rotating proxies handled by Artificial Intelligence.

Web crawlers, like the Crawling API, typically also have more functions and features that are highly scalable and useful for web scraping compared to Smart backconnect proxies. Below are the key features of the API:

- Data Scrapers This API feature allows you to get the parsed content of any supported website instead of getting the full HTML source code. This is useful if you do not want to build your own scraper out from scratch.

- Various Parameters The Crawling API has lots of optional parameters that will greatly compliment your scraping projects. For example, you can easily pass the country parameter if you want your request to come from a specific country. There is also an option to specify a user_agent so the API servers can pass it to the requested URL. Those are just two examples, much more are available at your disposal.

- Supports all HTTP request methods All request types can be performed with the API. Send GET request to crawl and scrape URLs. Do POST requests if you need to send form data, or even a PUT request if required.

- Headless Browsers The API is capable of crawling and scraping content generated via JavaScript. This means that you can ensure that each request can provide results with great accuracy even if the page is dynamically generated on the browser.

- Rotating IP Just like the Smart backconnect proxy, the Crawling API is using rotating proxies to effectively bypass blocks and avoid captchas. Albeit, the proxy pool may not be as vast as a backconnect proxy, it is sufficient to crawl most websites online.

Which one is for you?

Now that we have provided the description of each product, it will be easier to see their differences. We have listed the Pros and Cons of these products below, relative to each other, to help you pick the right tool for the job.

Crawling API

| Pros | Cons | |

|---|---|---|

| Best for extracting raw or parsed data | There is a default rate limit but can be adjusted by request | |

| Faster API response time | No static IP option | |

| Better success rate | Some apps or software may not have the capability to use an API | |

| More built-in options for crawling and scraping | ||

| Supports all websites | ||

| Compatible with other Crawlbase products like the Crawler, Screenshot API, and Storage API. |

Smart backconnect Proxy

| Pros | Cons | |

|---|---|---|

| Option to get huge pools of proxies | Can be slower than the Crawling API | |

| Option to get static IPs | No built-in scraping capabilities | |

| Compatible with all browsers, software, and all custom apps that need proxies. | Not compatible with websites like Google, LinkedIn, or Amazon | |

| No rate limit (Dependent on the number of threads) | Not compatible with other Crawlbase services |

It is also worth mentioning that there are some cases the Smart backconnect proxy and Crawling API may be both a viable option for you. Since both can provide the anonymity you needed and are very effective in avoiding blocks, captchas, IP ban when sending repeated requests to a website. In such cases, it could boil down to cost.

Crawlbase’s Smart backconnect proxy service is subscription-based, so the cost per month is fixed and recurring. While the Crawling API’s pricing is tiered and payments are made at the end of each month based on the number of requests that were made by the user. With this kind of payment mode, there is no commitment and you can control your expenses by only paying for what is necessary.

Conclusion

At the end of the day, both of these products are built with quality proxies at their cores. Both have their own strengths and weaknesses, with varying capabilities. This article should be enough to give you an idea of what will work best for you. If in case you still have questions, give us a message, our support team is always ready to help.